กิจกรรม

31 มี.ค. 23 - 2 เม.ย. 23

อิสลาม เหตุการณ์การเรียนรู้ Fabric Power BI และ SQL ที่ใหญ่ที่สุด 31 มีนาคม – 2 เมษายน ใช้รหัส FABINSIDER เพื่อบันทึก $400

ลงทะเบียนวันนี้เบราว์เซอร์นี้ไม่ได้รับการสนับสนุนอีกต่อไป

อัปเกรดเป็น Microsoft Edge เพื่อใช้ประโยชน์จากคุณลักษณะล่าสุด เช่น การอัปเดตความปลอดภัยและการสนับสนุนด้านเทคนิค

APPLIES TO:  Azure Data Factory

Azure Data Factory  Azure Synapse Analytics

Azure Synapse Analytics

เคล็ดลับ

Try out Data Factory in Microsoft Fabric, an all-in-one analytics solution for enterprises. Microsoft Fabric covers everything from data movement to data science, real-time analytics, business intelligence, and reporting. Learn how to start a new trial for free!

Mapping data flows are visually designed data transformations in Azure Data Factory. Data flows allow data engineers to develop data transformation logic without writing code. The resulting data flows are executed as activities within Azure Data Factory pipelines that use scaled-out Apache Spark clusters. Data flow activities can be operationalized using existing Azure Data Factory scheduling, control, flow, and monitoring capabilities.

Mapping data flows provide an entirely visual experience with no coding required. Your data flows run on ADF-managed execution clusters for scaled-out data processing. Azure Data Factory handles all the code translation, path optimization, and execution of your data flow jobs.

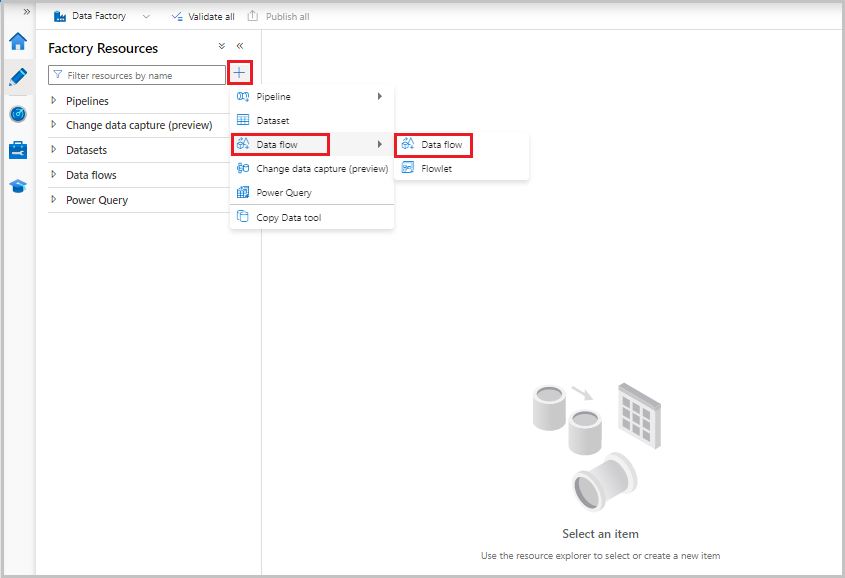

Data flows are created from the factory resources pane like pipelines and datasets. To create a data flow, select the plus sign next to Factory Resources, and then select Data Flow.

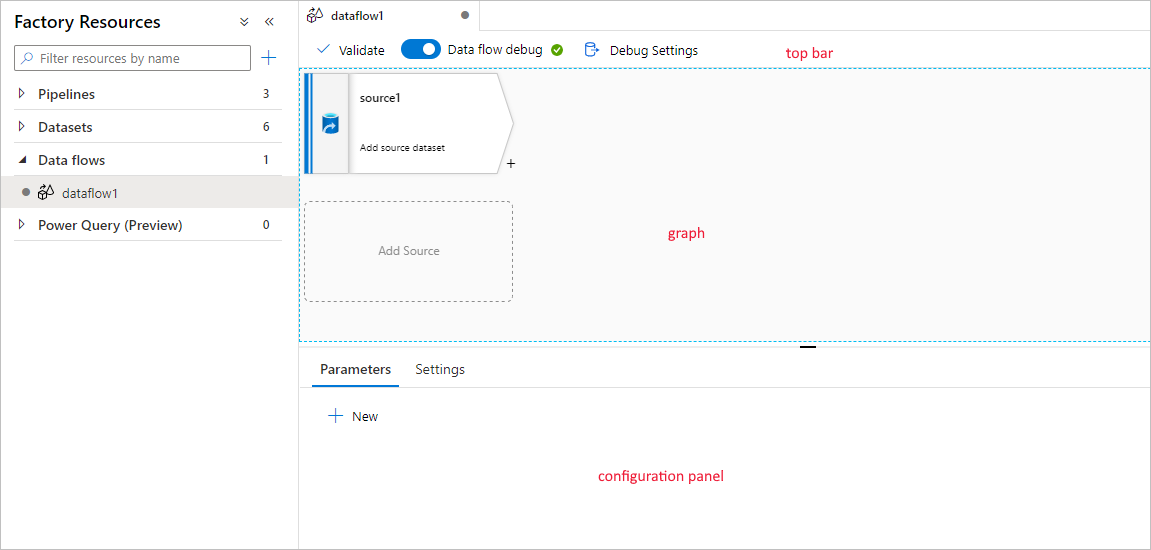

This action takes you to the data flow canvas, where you can create your transformation logic. Select Add source to start configuring your source transformation. For more information, see Source transformation.

This action takes you to the data flow canvas, where you can create your transformation logic. Select Add source to start configuring your source transformation. For more information, see Source transformation.

Mapping data flow has a unique authoring canvas designed to make building transformation logic easy. The data flow canvas is separated into three parts: the top bar, the graph, and the configuration panel.

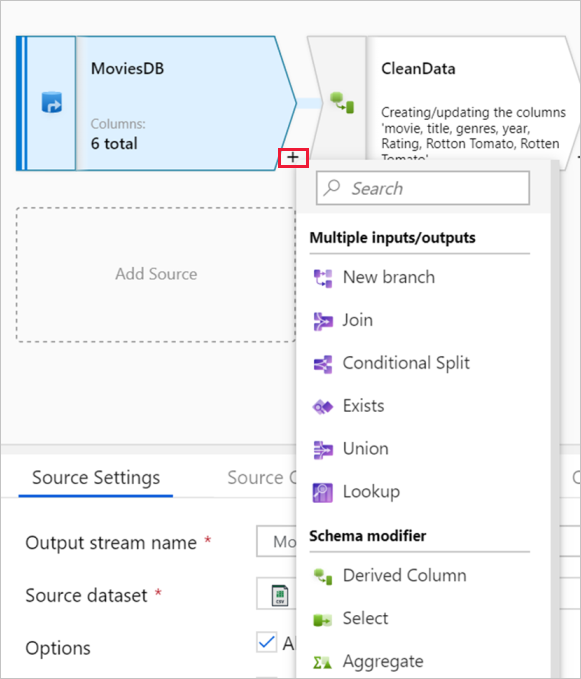

The graph displays the transformation stream. It shows the lineage of source data as it flows into one or more sinks. Sinks can be any data source destinations where you want to move the results of your transformed data. To add a new source, select Add source. To add a new transformation, select the plus sign on the lower right of an existing transformation. Learn more on how to manage the data flow graph.

The configuration panel shows the settings specific to the currently selected transformation. If no transformation is selected, it shows the data flow. In the overall data flow configuration, you can add parameters via the Parameters tab. For more information, see Mapping data flow parameters.

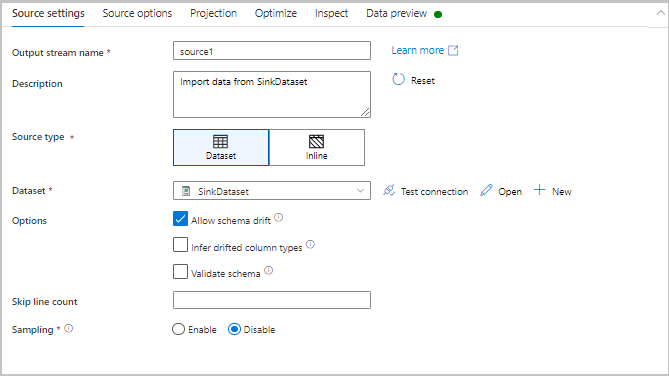

Each transformation contains at least four configuration tabs.

The first tab in each transformation's configuration pane contains the settings specific to that transformation. For more information, see that transformation's documentation page.

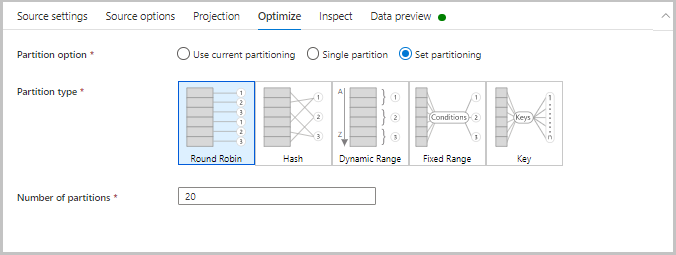

The Optimize tab contains settings to configure partitioning schemes. To learn more about how to optimize your data flows, see the mapping data flow performance guide.

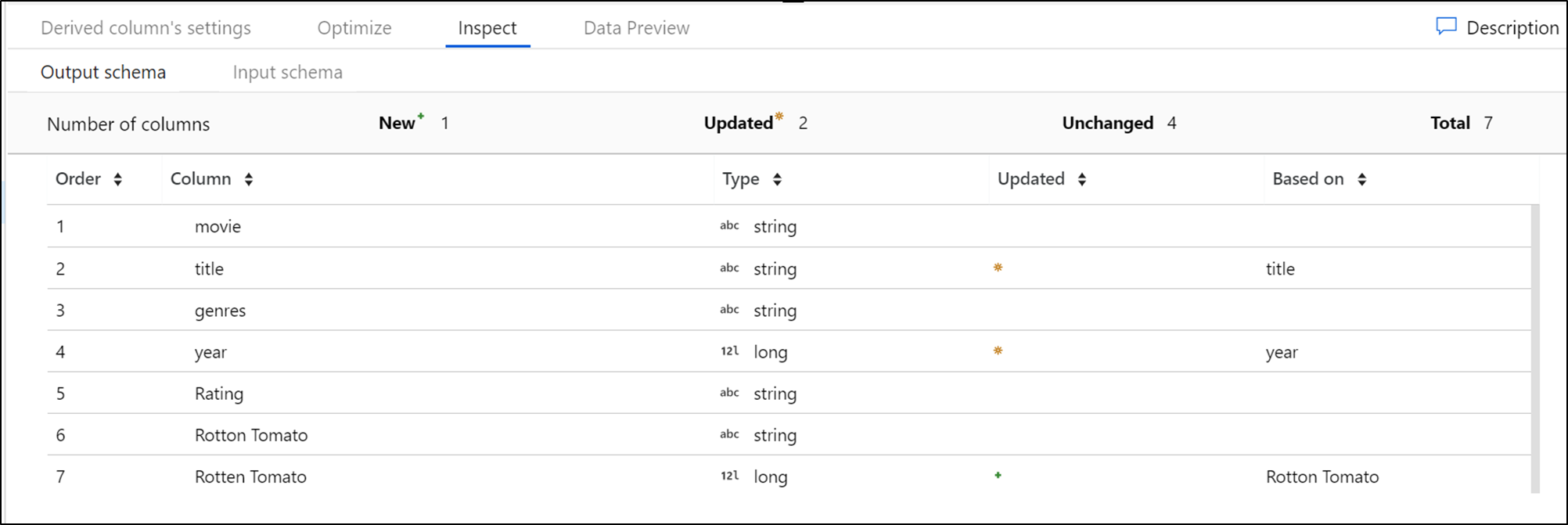

The Inspect tab provides a view into the metadata of the data stream that you're transforming. You can see column counts, the columns changed, the columns added, data types, the column order, and column references. Inspect is a read-only view of your metadata. You don't need to have debug mode enabled to see metadata in the Inspect pane.

As you change the shape of your data through transformations, you can see the metadata changes flow in the Inspect pane. If there isn't a defined schema in your source transformation, then metadata isn't visible in the Inspect pane. Lack of metadata is common in schema drift scenarios.

If debug mode is on, the Data Preview tab gives you an interactive snapshot of the data at each transform. For more information, see Data preview in debug mode.

The top bar contains actions that affect the whole data flow, like saving and validation. You can view the underlying JSON code and data flow script of your transformation logic as well. For more information, learn about the data flow script.

View the mapping data flow transformation overview to get a list of available transformations.

Mapping data flows are operationalized within ADF pipelines using the data flow activity. All a user has to do is specify which integration runtime to use and pass in parameter values. For more information, learn about the Azure integration runtime.

Debug mode allows you to interactively see the results of each transformation step while you build and debug your data flows. The debug session can be used both when building your data flow logic and when running pipeline debug runs with data flow activities. To learn more, see the debug mode documentation.

Mapping data flow integrates with existing Azure Data Factory monitoring capabilities. To learn how to understand data flow monitoring output, see monitoring mapping data flows.

The Azure Data Factory team has created a performance tuning guide to help you optimize the execution time of your data flows after building your business logic.

กิจกรรม

31 มี.ค. 23 - 2 เม.ย. 23

อิสลาม เหตุการณ์การเรียนรู้ Fabric Power BI และ SQL ที่ใหญ่ที่สุด 31 มีนาคม – 2 เมษายน ใช้รหัส FABINSIDER เพื่อบันทึก $400

ลงทะเบียนวันนี้การฝึกอบรม

โมดูล

Code-free transformation at scale with Azure Data Factory - Training

Perform code-free transformation at scale with Azure Data Factory or Azure Synapse Pipeline

ใบรับรอง

ได้รับการรับรองจาก Microsoft: Associate วิศวกรข้อมูลผ้า - Certifications

ในฐานะวิศวกรข้อมูลผ้า คุณควรมีความเชี่ยวชาญเฉพาะเรื่องเกี่ยวกับรูปแบบการโหลดข้อมูล สถาปัตยกรรมข้อมูล และกระบวนการประสานงาน