Execute Pipeline activity in Azure Data Factory and Synapse Analytics

APPLIES TO:  Azure Data Factory

Azure Data Factory  Azure Synapse Analytics

Azure Synapse Analytics

Tip

Try out Data Factory in Microsoft Fabric, an all-in-one analytics solution for enterprises. Microsoft Fabric covers everything from data movement to data science, real-time analytics, business intelligence, and reporting. Learn how to start a new trial for free!

The Execute Pipeline activity allows a Data Factory or Synapse pipeline to invoke another pipeline.

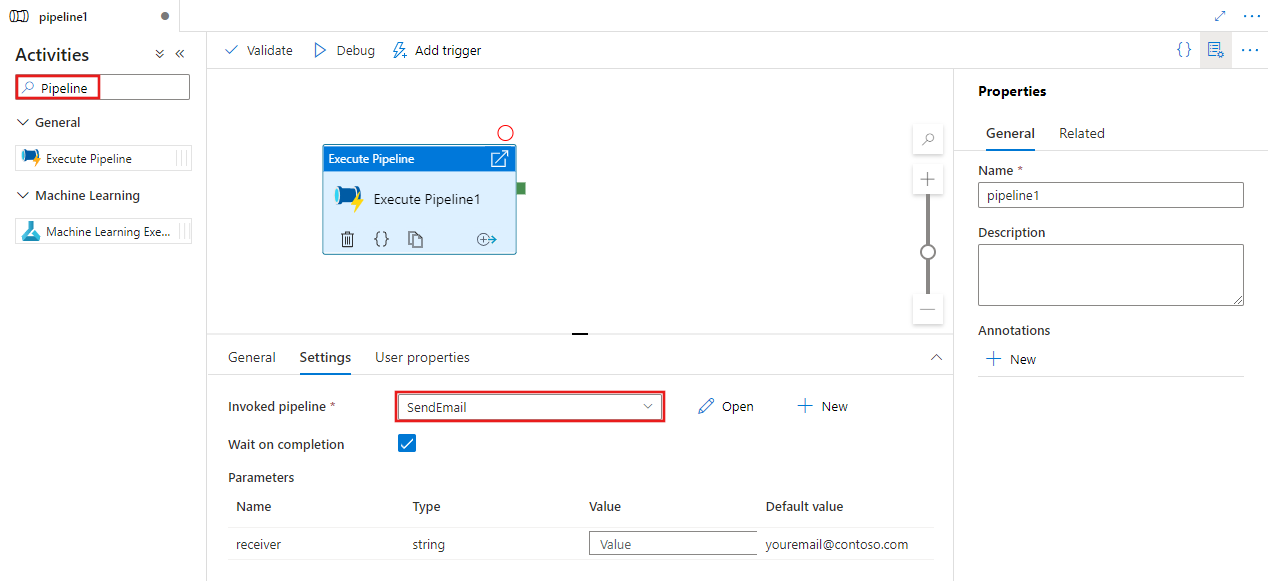

Create an Execute Pipeline activity with UI

To use an Execute Pipeline activity in a pipeline, complete the following steps:

Search for pipeline in the pipeline Activities pane, and drag an Execute Pipeline activity to the pipeline canvas.

Select the new Execute Pipeline activity on the canvas if it is not already selected, and its Settings tab, to edit its details.

Select an existing pipeline or create a new one using the New button. Select other options and configure any parameters for the pipeline as required to complete your configuration.

Syntax

{

"name": "MyPipeline",

"properties": {

"activities": [

{

"name": "ExecutePipelineActivity",

"type": "ExecutePipeline",

"typeProperties": {

"parameters": {

"mySourceDatasetFolderPath": {

"value": "@pipeline().parameters.mySourceDatasetFolderPath",

"type": "Expression"

}

},

"pipeline": {

"referenceName": "<InvokedPipelineName>",

"type": "PipelineReference"

},

"waitOnCompletion": true

}

}

],

"parameters": [

{

"mySourceDatasetFolderPath": {

"type": "String"

}

}

]

}

}

Type properties

| Property | Description | Allowed values | Required |

|---|---|---|---|

| name | Name of the execute pipeline activity. | String | Yes |

| type | Must be set to: ExecutePipeline. | String | Yes |

| pipeline | Pipeline reference to the dependent pipeline that this pipeline invokes. A pipeline reference object has two properties: referenceName and type. The referenceName property specifies the name of the reference pipeline. The type property must be set to PipelineReference. | PipelineReference | Yes |

| parameters | Parameters to be passed to the invoked pipeline | A JSON object that maps parameter names to argument values | No |

| waitOnCompletion | Defines whether activity execution waits for the dependent pipeline execution to finish. Default is true. | Boolean | No |

Sample

This scenario has two pipelines:

- Master pipeline - This pipeline has one Execute Pipeline activity that calls the invoked pipeline. The master pipeline takes two parameters:

masterSourceBlobContainer,masterSinkBlobContainer. - Invoked pipeline - This pipeline has one Copy activity that copies data from an Azure Blob source to Azure Blob sink. The invoked pipeline takes two parameters:

sourceBlobContainer,sinkBlobContainer.

Master pipeline definition

{

"name": "masterPipeline",

"properties": {

"activities": [

{

"type": "ExecutePipeline",

"typeProperties": {

"pipeline": {

"referenceName": "invokedPipeline",

"type": "PipelineReference"

},

"parameters": {

"sourceBlobContainer": {

"value": "@pipeline().parameters.masterSourceBlobContainer",

"type": "Expression"

},

"sinkBlobContainer": {

"value": "@pipeline().parameters.masterSinkBlobContainer",

"type": "Expression"

}

},

"waitOnCompletion": true

},

"name": "MyExecutePipelineActivity"

}

],

"parameters": {

"masterSourceBlobContainer": {

"type": "String"

},

"masterSinkBlobContainer": {

"type": "String"

}

}

}

}

Invoked pipeline definition

{

"name": "invokedPipeline",

"properties": {

"activities": [

{

"type": "Copy",

"typeProperties": {

"source": {

"type": "BlobSource"

},

"sink": {

"type": "BlobSink"

}

},

"name": "CopyBlobtoBlob",

"inputs": [

{

"referenceName": "SourceBlobDataset",

"type": "DatasetReference"

}

],

"outputs": [

{

"referenceName": "sinkBlobDataset",

"type": "DatasetReference"

}

]

}

],

"parameters": {

"sourceBlobContainer": {

"type": "String"

},

"sinkBlobContainer": {

"type": "String"

}

}

}

}

Linked service

{

"name": "BlobStorageLinkedService",

"properties": {

"type": "AzureStorage",

"typeProperties": {

"connectionString": "DefaultEndpointsProtocol=https;AccountName=*****;AccountKey=*****"

}

}

}

Source dataset

{

"name": "SourceBlobDataset",

"properties": {

"type": "AzureBlob",

"typeProperties": {

"folderPath": {

"value": "@pipeline().parameters.sourceBlobContainer",

"type": "Expression"

},

"fileName": "salesforce.txt"

},

"linkedServiceName": {

"referenceName": "BlobStorageLinkedService",

"type": "LinkedServiceReference"

}

}

}

Sink dataset

{

"name": "sinkBlobDataset",

"properties": {

"type": "AzureBlob",

"typeProperties": {

"folderPath": {

"value": "@pipeline().parameters.sinkBlobContainer",

"type": "Expression"

}

},

"linkedServiceName": {

"referenceName": "BlobStorageLinkedService",

"type": "LinkedServiceReference"

}

}

}

Running the pipeline

To run the master pipeline in this example, the following values are passed for the masterSourceBlobContainer and masterSinkBlobContainer parameters:

{

"masterSourceBlobContainer": "executetest",

"masterSinkBlobContainer": "executesink"

}

The master pipeline forwards these values to the invoked pipeline as shown in the following example:

{

"type": "ExecutePipeline",

"typeProperties": {

"pipeline": {

"referenceName": "invokedPipeline",

"type": "PipelineReference"

},

"parameters": {

"sourceBlobContainer": {

"value": "@pipeline().parameters.masterSourceBlobContainer",

"type": "Expression"

},

"sinkBlobContainer": {

"value": "@pipeline().parameters.masterSinkBlobContainer",

"type": "Expression"

}

},

....

}

Warning

Execute Pipeline activity passes array parameter as string to the child pipeline.This is due to the fact that the payload is passed from the parent pipeline to the >child as string. We can see it when we check the input passed to the child pipeline. Plese check this section for more details.

Related content

See other supported control flow activities: