Not

Bu sayfaya erişim yetkilendirme gerektiriyor. Oturum açmayı veya dizinleri değiştirmeyi deneyebilirsiniz.

Bu sayfaya erişim yetkilendirme gerektiriyor. Dizinleri değiştirmeyi deneyebilirsiniz.

Şunlar için geçerlidir:Linux üzerinde SQL Server

SQL Server 2017 (14.x) ile başlayarak, SQL Server hem Linux hem de Windows üzerinde desteklenir. Windows tabanlı SQL Server dağıtımları gibi, SQL Server veritabanlarının ve örneklerinin Linux altında yüksek oranda kullanılabilir olması gerekir. Bu makalede Pacemaker'ı Corosync ile anlamak için temel bilgiler ve SQL Server yapılandırmaları için nasıl planlanıp dağıtılacağı anlatılır.

HA eklentisi/uzantısıyla ilgili temel bilgiler

Şu anda desteklenen tüm dağıtımlar Pacemaker kümeleme yığınını temel alan yüksek kullanılabilirlik eklentisi/uzantısını gönderir. Bu yığın iki ana bileşen içerir: Pacemaker ve Corosync. Yığının tüm bileşenleri şunlardır:

- Kalp pili. Kümelenmiş makineler arasında her şeyi koordine eden çekirdek kümeleme bileşeni.

- Corosync. Başarısız işlemleri yeniden başlatma, çoğunluk sağlama gibi özellikleri sağlayan bir çerçeve ve API kümesi.

- libQB. Günlük kaydı gibi özellikler sağlar.

- Kaynak yöneticisi. Bir uygulamanın Pacemaker ile tümleştirilebilmesi için belirli işlevler sağlanır.

- Çit ajanı. Düğümleri yalıtma ve sorun yaşıyorlarsa bunlarla ilgilenmeye yardımcı olan betikler/işlevler.

Uyarı

Küme yığını genellikle Linux dünyasında Pacemaker olarak adlandırılır.

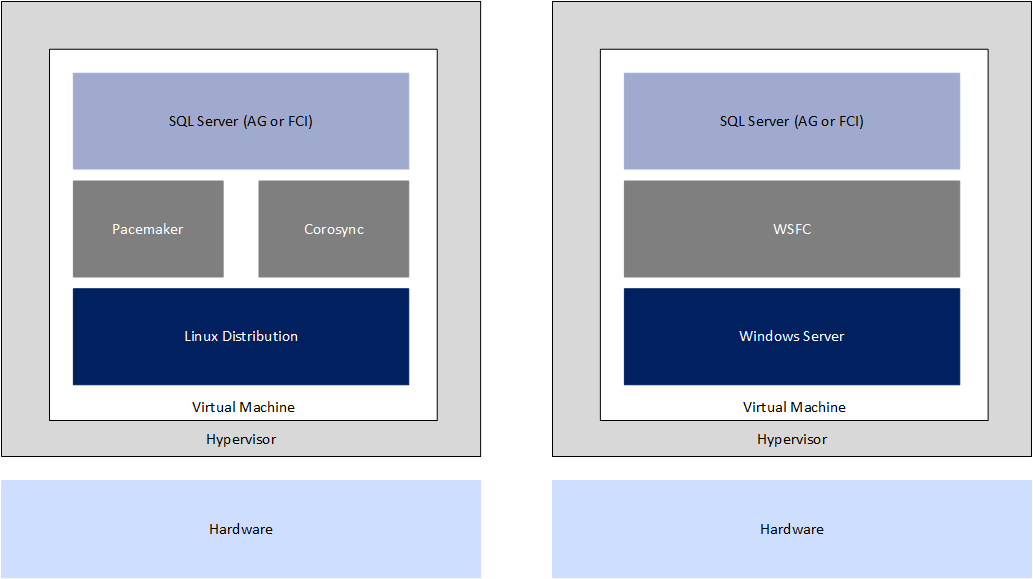

Bu çözüm, windows kullanarak kümelenmiş yapılandırmaları dağıtmaya benzer, ancak birçok yönden farklıdır. Windows'da, Windows Server yük devretme kümesi (WSFC) olarak adlandırılan kümelemenin kullanılabilirlik biçimi işletim sisteminde yerleşiktir ve WSFC, yük devretme kümelemesi oluşturulmasını sağlayan özellik varsayılan olarak devre dışıdır. Windows'da, AG'ler ve FCI'ler bir WSFC'nin üzerinde oluşturulur ve SQL Server tarafından sağlanan belirli kaynak DLL'si sayesinde sıkı bir entegrasyona sahiptir. Bu sıkı bağlı çözüm, genel olarak, tek bir tedarikçiden geldiği için mümkündür.

Linux'ta desteklenen her dağıtım pacemaker'a sahip olsa da, her dağıtım özelleştirilebilir ve biraz farklı uygulama ve sürümlere sahip olabilir. Farklılıklardan bazıları bu makaledeki yönergelere yansıtılacaktır. Kümeleme katmanı açık kaynaktır, dolayısıyla dağıtımlarla birlikte gelse de WSFC'nin Windows altında olduğu gibi sıkı bir şekilde tümleştirilmemektedir. Bu nedenle Microsoft mssql-server-ha sağlar; böylece SQL Server ve Pacemaker yığını, Windows altında olduğu gibi AG'ler ve FC'lere yakın ancak tam olarak aynı olmayan bir deneyim sağlayabilir.

RHEL ve SLES için tam başvuru bilgileriyle her şeyin ne olduğuna ilişkin daha ayrıntılı bir açıklama da dahil olmak üzere Pacemaker hakkında tam belgeler için:

Uyarı

SQL Server 2025'te (17.x) başlayarak SUSE Linux Enterprise Server (SLES) desteklenmez.

Yığın hakkında daha fazla bilgi almak için ClusterLabs sitesindeki resmi Pacemaker belgeleri sayfasına da bakın.

Pacemaker kavramları ve terminolojisi

Bu bölümde Pacemaker uygulaması için yaygın kavramlar ve terminoloji belgelemektedir.

Düğüm

Düğüm, kümeye katılan bir sunucudur. Pacemaker kümesi en fazla 16 düğümü yerel olarak destekler. Corosync ek düğümlerde çalışmıyorsa bu sayı aşılabilir, ancak SQL Server için Corosync gereklidir. Bu nedenle, herhangi bir SQL Server tabanlı yapılandırma için kümenin sahip olabileceği düğüm sayısı üst sınırı 16'dır; Bu Pacemaker sınırıdır ve SQL Server tarafından uygulanan AG'ler veya FC'ler için maksimum sınırlamalarla hiçbir ilgisi yoktur.

Kaynak

Hem WSFC hem de Pacemaker kümesi kaynak kavramına sahiptir. Kaynak, disk veya IP adresi gibi küme bağlamında çalışan belirli işlevlerdir. Örneğin Pacemaker altında hem FCI hem de AG kaynakları oluşturulabilir. Bu, WSFC'de yapılan ve AG'yi yapılandırırken bir FCI veya AG kaynağı için SQL Server kaynağı gördüğünüzden farklı değildir, ancak SQL Server'ın Pacemaker ile tümleştirilmesindeki temel farklılıklar nedeniyle tam olarak aynı değildir.

Pacemaker standart ve kopya kaynaklara sahiptir. Kopyalama kaynakları, tüm düğümlerde aynı anda çalışan kaynaklardır. Örneğin, yük dengeleme amacıyla birden çok düğümde çalışan bir IP adresi olabilir. HERHANGI bir zamanda yalnızca bir düğüm FCI barındırabildiğinden, FCI'ler için oluşturulan tüm kaynaklar standart bir kaynak kullanır.

Uyarı

Bu makalede, Microsoft'un artık kullanmadığı köle terimine referanslar bulunmaktadır. Terim yazılımdan kaldırıldığında, bu makaleden kaldırırız.

Ag oluşturulduğunda, çok durumlu kaynak olarak adlandırılan bir kopya kaynağının özelleştirilmiş bir biçimini gerektirir. AG yalnızca bir birincil kopyaya sahip olsa da, AG'nin kendisi yapılandırıldığı tüm düğümlerde çalışır ve salt okunur erişim gibi özelliklere izin verebilir. Bu düğümün "canlı" bir kullanımı olduğundan, kaynaklar iki durum kavramına sahiptir: Terfi (önceki Ana) ve Terfi Edilmemiş (daha önce Köle). Daha fazla bilgi için bkz . Çok durumlu kaynaklar: Birden çok modu olan kaynaklar.

Kaynak grupları/kümeleri

WSFC'deki rollere benzer şekilde Pacemaker kümesinde de kaynak grubu kavramı vardır. Kaynak grubu (SLES'de küme olarak adlandırılır), birlikte çalıştırılan ve bir düğümden diğerine tek bir birim olarak yük devredebilen bir kaynak koleksiyonudur. Kaynak grupları Yükseltildi veya Yükseltilmemiş olarak yapılandırılan kaynaklar içeremez; bu nedenle, AG'ler için kullanılamazlar. Kaynak grubu FCI'ler için kullanılabilir ancak genellikle önerilen bir yapılandırma değildir.

Sınırlamalar

WSFC'ler kaynaklar ve bağımlılıklar gibi kaynaklar için çeşitli parametrelere sahiptir ve WSFC'ye iki farklı kaynak arasındaki üst/alt ilişkiyi bildirir. Bağımlılık, WSFC'ye önce hangi kaynağın çevrimiçi olması gerektiğini söyleyen bir kuraldır.

Pacemaker kümesi bağımlılık kavramına sahip değildir, ancak kısıtlamalar vardır. Üç tür kısıtlama vardır: birlikte bulundurma, konum ve sıralama.

- Birlikte bulundurma kısıtlaması, iki kaynağın aynı düğümde çalışıp çalışmayacağını belirler.

- Konum kısıtlaması Pacemaker kümesine kaynağın nerede çalışabileceğini (veya çalıştıramazsınız) bildirir.

- Sıralama kısıtlaması Pacemaker kümesine kaynakların başlama sırasını bildirir.

Uyarı

Bir kaynak grubundaki kaynaklar için birlikte bulundurma kısıtlamaları gerekli değildir, çünkü bunların tümü tek bir birim olarak görülür.

Quorum, çit aracıları ve STONITH

Pacemaker'daki Quorum, kavram olarak WSFC'ye benzer. Kümenin quorum mekanizmasının tüm amacı, kümenin çalışır durumda kalmasını sağlamaktır. Linux dağıtımları için hem WSFC hem de HA eklentileri, her düğümün çoğunluk belirlenmesine katkı sağladığı oylama kavramına sahiptir. Oyların çoğunluğunun yukarıda olmasını istiyorsunuz, aksi takdirde en kötü senaryoda küme kapatılacaktır.

WSFC'nin aksine, çoğunluk sağlamak için herhangi bir tanık kaynağı yoktur. WSFC gibi hedef de seçmen sayısını tek tutmaktır. Korum yapılandırmasının, Kullanılabilirlik Grupları (AG'ler) ve Hata Toleranslı Küme Örnekleri (FCI'ler) için farklı konuları vardır.

WSFC'ler katılan düğümlerin durumunu izler ve bir sorun oluştuğunda bunları işler. WSFC'lerin sonraki sürümleri, düzgün çalışmayan veya kullanılamayan bir düğümün (düğüm açık değil, ağ iletişimi kesik vb.) karantinaya alınması gibi özellikler sunar. Linux tarafında, bu tür işlevler bir çit aracısı tarafından sağlanır. Kavram bazen eskrim olarak adlandırılır. Ancak, bu çit aracıları dağıtıma özgüdür ve genellikle donanım satıcıları ve hiper yöneticiler sağlayanlar gibi bazı yazılım satıcıları tarafından sağlanır. Örneğin VMware, vSphere kullanılarak sanallaştırılmış Linux VM'leri için kullanılabilecek bir çit aracısı sağlar.

Çoğunluk ve engelleme, STONITH adlı başka bir kavramla bağlanır, yani Diğer Düğümü Kafadan Çekme. STONITH, tüm Linux dağıtımlarında desteklenen bir Pacemaker kümesine sahip olmak için gereklidir. Daha fazla bilgi için Red Hat Yüksek Kullanılabilirlik Kümesi'nde Eskrim (RHEL) bölümüne bakın.

corosync.conf

Dosya, corosync.conf kümenin yapılandırmasını içerir.

/etc/corosync konumunda. Normal günlük işlemler boyunca, küme düzgün ayarlandıysa bu dosyanın hiçbir zaman düzenlenmesi gerekmez.

Küme günlüğü konumu

Pacemaker kümelerinin günlük konumları dağıtıma bağlı olarak farklılık gösterir.

- RHEL ve SLES:

/var/log/cluster/corosync.log - Ubuntu:

/var/log/corosync/corosync.log

Varsayılan günlük kaydı konumunu değiştirmek için corosync.conf öğesini değiştirin.

SQL Server için Pacemaker kümelerini planlama

Bu bölümde Pacemaker kümesi için önemli planlama noktaları ele alınmaktadır.

SQL Server için Linux tabanlı Pacemaker kümelerini sanallaştırma

AG'ler ve FC'ler için Linux tabanlı SQL Server dağıtımlarını dağıtmak için sanal makinelerin kullanılması, Windows tabanlı karşılık gelenleriyle aynı kurallar kapsamındadır. Donanım sanallaştırma ortamında çalışan Microsoft SQL Server ürünleri için Destek ilkesinde Microsoft tarafından sağlanan sanallaştırılmış SQL Server dağıtımlarının desteklenebilirliğine yönelik temel kurallar kümesi vardır. Microsoft'un Hyper-V ve VMware'in ESXi'si gibi farklı hiper yöneticiler, platformların kendilerindeki farklılıklar nedeniyle bunların üzerinde farklı varyanslara sahip olabilir.

Sanallaştırma altındaki AG'ler ve FC'ler söz konusu olduğunda, belirli bir Pacemaker kümesinin düğümleri için benzeşimsizliğin ayarlandığından emin olun. AG veya FCI yapılandırmasında yüksek kullanılabilirlik için yapılandırıldığında, SQL Server'ı barındıran VM'ler hiçbir zaman aynı hiper yönetici ana bilgisayarında çalışmamalıdır. Örneğin, iki düğümlü bir FCI dağıtılırsa, özellikle Dinamik Geçiş veya vMotion gibi özellikler kullanılıyorsa, bir düğümü barındıran VM'lerden birinin konak hatası durumunda gideceği bir yer olması için en az üç hiper yönetici konağı olması gerekir.

Hyper-V belgeleri için bkz. Yüksek Kullanılabilirlik için Konuk Kümelediğini Kullanma

Ağ

WSFC'nin aksine Pacemaker, Pacemaker kümesinin kendisi için ayrılmış bir ad veya en az bir ayrılmış IP adresi gerektirmez. Ağ adı kaynağı olmadığından, AG'ler ve FC'ler IP adresleri gerektirir (daha fazla bilgi için her birinin belgelerine bakın), ancak adlar gerekmez. SLES, yönetim amacıyla bir IP adresinin yapılandırılmasına izin verir, ancak Pacemaker kümesini oluşturma bölümünde görülebileceği gibi gerekli değildir.

WSFC gibi Pacemaker da, bireysel IP adreslerine sahip, farklı ağ kartları (fiziksel için NIC'ler veya pNIC'ler) anlamına gelen, yedekli ağ bağlantısını tercih eder. Küme yapılandırması açısından her IP adresinin kendi halkası olur. Ancak, günümüzde WSFC'lerde olduğu gibi, birçok uygulama sanallaştırılmıştır veya sunucuya yalnızca tek bir sanallaştırılmış NIC (vNIC) sunulduğu genel buluttadır. Tüm pNIC'ler ve vNIC'ler aynı olan fiziksel veya sanal anahtara bağlıysa, ağ katmanında gerçek bir yedeklilik yoktur; bu nedenle birden çok NIC'yi yapılandırmak sanal makine için bir yanılsamadan ibarettir. Ağ yedekliliği genellikle sanallaştırılmış dağıtımlar için hiper yöneticide yerleşiktir ve kesinlikle genel bulutta yerleşiktir.

Birden çok NIC ve Pacemaker ile WSFC arasındaki farklardan biri Pacemaker'ın aynı alt ağda birden çok IP adresine izin vermesi, WSFC'nin izin vermemesidir. Birden çok alt ağ ve Linux kümesi hakkında daha fazla bilgi için Birden çok alt ağ Always On kullanılabilirlik gruplarını ve yük devretme kümesi örneklerini yapılandırma makalesine bakın.

Quorum ve STONITH

Quorum yapılandırması ve gereksinimleri, SQL Server'ın AG veya FCI'yi hedefleyen dağıtımlarıyla ilgilidir.

Desteklenen bir Pacemaker kümesi için STONITH gereklidir. STONITH'i yapılandırmak için dağıtımdaki belgeleri kullanın. Örneğin, SLES için Depolama Tabanlı Fencing ... ESXI tabanlı çözümler için VMware vCenter için bir STONITH aracısı da vardır. Daha fazla bilgi için bkz. VMware VM VCenter SOAP Eskrimi (Resmi olmayan) için Stonith Eklenti Aracısı.

Birlikte çalışabilirlik

Bu bölümde, Linux tabanlı bir kümenin WSFC ile veya diğer Linux dağıtımlarıyla nasıl etkileşim kurabileceği açıklanır.

WSFC

Şu anda WSFC ve Pacemaker kümesinin birlikte çalışmasının doğrudan bir yolu yoktur. Bu, WSFC ve Pacemaker genelinde çalışan bir AG veya FCI oluşturmanın mümkün olmadığı anlamına gelir. Ancak, her ikisi de AG'ler için tasarlanmış olan iki birlikte çalışabilirlik çözümü vardır. FCI'nin platformlar arası yapılandırmaya katılmanın tek yolu, bu iki senaryodan birine örnek olarak katılmasıdır:

- "Küme türü 'Yok' olan bir AG." Daha fazla bilgi için Windows kullanılabilirlik grupları belgelerine bakın.

- İki farklı AG'nin kendi kullanılabilirlik grubu olarak yapılandırılmasını sağlayan özel bir kullanılabilirlik grubu türü olan dağıtılmış AG. Dağıtılmış AG'ler hakkında daha fazla bilgi için Bkz. Dağıtılmış kullanılabilirlik grupları.

Diğer Linux dağıtımları

Linux'ta Pacemaker kümesinin tüm düğümleri aynı dağıtımda olmalıdır. Örneğin, bu, bir RHEL düğümünün SLES düğümüne sahip bir Pacemaker kümesinin parçası olamayacağı anlamına gelir. Bunun temel nedeni daha önce belirtildi: dağıtımların farklı sürümleri ve işlevleri olabileceği için işler düzgün çalışamadı. Dağıtımları karıştırma, WSFC'leri ve Linux'ı karıştırma ile aynı hikayeye sahiptir: Yok veya dağıtılmış AG'leri kullanın.