快速入門:在應用程式中新增 1:1 視訊通話

使用通訊服務的通話 SDK,在應用程式中新增 1 對 1 視訊通話,以開始使用 Azure 通訊服務。 您將了解如何使用適用於 JavaScript 的 Azure 通訊服務通話 SDK,開始並接聽視訊通話。

範例程式碼

如果您想要直接跳到結尾,您可以在 GitHub \(英文\) 上下載此快速入門作為範例。

注意

您可以使用 Azure 通訊服務 UI 程式庫 \(英文\),來存取外撥給 Azure 通訊服務使用者的通話。 此 UI 程式庫讓開發人員只需使用幾行程式碼,就能將已啟用 VoIP 的通話用戶端新增至其應用程式中。

必要條件

取得具備有效訂用帳戶的 Azure 帳戶。 免費建立帳戶。

您必須具有 Node.js 18。 您可以使用 msi 安裝程式進行安裝。

建立作用中的通訊服務資源。 建立通訊服務資源。 您必須記錄您的連接字串,以供此快速入門使用。

建立使用者存取權杖來將通話用戶端具現化。 了解如何建立和管理使用者存取權杖。 您也可以使用 Azure CLI,並搭配您的連接字串執行命令,以建立使用者和存取權杖。

az communication identity token issue --scope voip --connection-string "yourConnectionString"如需詳細資訊,請參閱使用 Azure CLI 建立和管理存取權杖。

設定

建立新的 Node.js 應用程式

開啟您的終端機或命令視窗,為您的應用程式建立新的目錄,並瀏覽至該目錄。

mkdir calling-quickstart && cd calling-quickstart

執行 npm init -y 以使用預設設定建立 package.json 檔案。

npm init -y

Install the package

使用 npm install 命令,以安裝適用於 JavaScript 的 Azure 通訊服務通話 SDK。

npm install @azure/communication-common --save

npm install @azure/communication-calling --save

設定應用程式架構

此快速入門會使用 Webpack 來組合應用程式資產。 執行下列命令以安裝 webpack、webpack-cli 和 webpack-dev-server npm 套件,並將其列為 package.json 中的開發相依性:

npm install copy-webpack-plugin@^11.0.0 webpack@^5.88.2 webpack-cli@^5.1.4 webpack-dev-server@^4.15.1 --save-dev

程式碼如下:

在專案的根目錄中建立 index.html 檔案。 我們會使用此檔案來設定基本配置,讓使用者能夠進行 1:1 視訊通話。

<!-- index.html -->

<!DOCTYPE html>

<html>

<head>

<title>Azure Communication Services - Calling Web SDK</title>

<link rel="stylesheet" type="text/css" href="styles.css"/>

</head>

<body>

<h4>Azure Communication Services - Calling Web SDK</h4>

<input id="user-access-token"

type="text"

placeholder="User access token"

style="margin-bottom:1em; width: 500px;"/>

<button id="initialize-call-agent" type="button">Initialize Call Agent</button>

<br>

<br>

<input id="callee-acs-user-id"

type="text"

placeholder="Enter callee's Azure Communication Services user identity in format: '8:acs:resourceId_userId'"

style="margin-bottom:1em; width: 500px; display: block;"/>

<button id="start-call-button" type="button" disabled="true">Start Call</button>

<button id="hangup-call-button" type="button" disabled="true">Hang up Call</button>

<button id="accept-call-button" type="button" disabled="true">Accept Call</button>

<button id="start-video-button" type="button" disabled="true">Start Video</button>

<button id="stop-video-button" type="button" disabled="true">Stop Video</button>

<br>

<br>

<div id="connectedLabel" style="color: #13bb13;" hidden>Call is connected!</div>

<br>

<div id="remoteVideosGallery" style="width: 40%;" hidden>Remote participants' video streams:</div>

<br>

<div id="localVideoContainer" style="width: 30%;" hidden>Local video stream:</div>

<!-- points to the bundle generated from client.js -->

<script src="./main.js"></script>

</body>

</html>

下列類別和介面會處理 Azure 通訊服務通話 SDK 的一些主要功能:

| 名稱 | 描述 |

|---|---|

CallClient |

通話 SDK 的主要進入點。 |

AzureCommunicationTokenCredential |

實作 CommunicationTokenCredential 介面,可用來將 callAgent 具現化。 |

CallAgent |

用來開始和管理通話。 |

DeviceManager |

用來管理媒體裝置。 |

Call |

用來代表通話。 |

LocalVideoStream |

用來在本機系統上建立相機裝置的本機視訊串流。 |

RemoteParticipant |

用來代表通話中的遠端參與者 |

RemoteVideoStream |

用來表示來自遠端參與者的遠端視訊串流。 |

在 index.js 專案的根目錄中建立檔案,以包含此快速入門的應用程式邏輯。 將下列程式碼新增至 index.js:

// Make sure to install the necessary dependencies

const { CallClient, VideoStreamRenderer, LocalVideoStream } = require('@azure/communication-calling');

const { AzureCommunicationTokenCredential } = require('@azure/communication-common');

const { AzureLogger, setLogLevel } = require("@azure/logger");

// Set the log level and output

setLogLevel('verbose');

AzureLogger.log = (...args) => {

console.log(...args);

};

// Calling web sdk objects

let callAgent;

let deviceManager;

let call;

let incomingCall;

let localVideoStream;

let localVideoStreamRenderer;

// UI widgets

let userAccessToken = document.getElementById('user-access-token');

let calleeAcsUserId = document.getElementById('callee-acs-user-id');

let initializeCallAgentButton = document.getElementById('initialize-call-agent');

let startCallButton = document.getElementById('start-call-button');

let hangUpCallButton = document.getElementById('hangup-call-button');

let acceptCallButton = document.getElementById('accept-call-button');

let startVideoButton = document.getElementById('start-video-button');

let stopVideoButton = document.getElementById('stop-video-button');

let connectedLabel = document.getElementById('connectedLabel');

let remoteVideosGallery = document.getElementById('remoteVideosGallery');

let localVideoContainer = document.getElementById('localVideoContainer');

/**

* Using the CallClient, initialize a CallAgent instance with a CommunicationUserCredential which will enable us to make outgoing calls and receive incoming calls.

* You can then use the CallClient.getDeviceManager() API instance to get the DeviceManager.

*/

initializeCallAgentButton.onclick = async () => {

try {

const callClient = new CallClient();

tokenCredential = new AzureCommunicationTokenCredential(userAccessToken.value.trim());

callAgent = await callClient.createCallAgent(tokenCredential)

// Set up a camera device to use.

deviceManager = await callClient.getDeviceManager();

await deviceManager.askDevicePermission({ video: true });

await deviceManager.askDevicePermission({ audio: true });

// Listen for an incoming call to accept.

callAgent.on('incomingCall', async (args) => {

try {

incomingCall = args.incomingCall;

acceptCallButton.disabled = false;

startCallButton.disabled = true;

} catch (error) {

console.error(error);

}

});

startCallButton.disabled = false;

initializeCallAgentButton.disabled = true;

} catch(error) {

console.error(error);

}

}

/**

* Place a 1:1 outgoing video call to a user

* Add an event listener to initiate a call when the `startCallButton` is clicked:

* First you have to enumerate local cameras using the deviceManager `getCameraList` API.

* In this quickstart we're using the first camera in the collection. Once the desired camera is selected, a

* LocalVideoStream instance will be constructed and passed within `videoOptions` as an item within the

* localVideoStream array to the call method. Once your call connects it will automatically start sending a video stream to the other participant.

*/

startCallButton.onclick = async () => {

try {

const localVideoStream = await createLocalVideoStream();

const videoOptions = localVideoStream ? { localVideoStreams: [localVideoStream] } : undefined;

call = callAgent.startCall([{ communicationUserId: calleeAcsUserId.value.trim() }], { videoOptions });

// Subscribe to the call's properties and events.

subscribeToCall(call);

} catch (error) {

console.error(error);

}

}

/**

* Accepting an incoming call with video

* Add an event listener to accept a call when the `acceptCallButton` is clicked:

* After subscribing to the `CallAgent.on('incomingCall')` event, you can accept the incoming call.

* You can pass the local video stream which you want to use to accept the call with.

*/

acceptCallButton.onclick = async () => {

try {

const localVideoStream = await createLocalVideoStream();

const videoOptions = localVideoStream ? { localVideoStreams: [localVideoStream] } : undefined;

call = await incomingCall.accept({ videoOptions });

// Subscribe to the call's properties and events.

subscribeToCall(call);

} catch (error) {

console.error(error);

}

}

/**

* Subscribe to a call obj.

* Listen for property changes and collection updates.

*/

subscribeToCall = (call) => {

try {

// Inspect the initial call.id value.

console.log(`Call Id: ${call.id}`);

//Subscribe to call's 'idChanged' event for value changes.

call.on('idChanged', () => {

console.log(`Call Id changed: ${call.id}`);

});

// Inspect the initial call.state value.

console.log(`Call state: ${call.state}`);

// Subscribe to call's 'stateChanged' event for value changes.

call.on('stateChanged', async () => {

console.log(`Call state changed: ${call.state}`);

if(call.state === 'Connected') {

connectedLabel.hidden = false;

acceptCallButton.disabled = true;

startCallButton.disabled = true;

hangUpCallButton.disabled = false;

startVideoButton.disabled = false;

stopVideoButton.disabled = false;

remoteVideosGallery.hidden = false;

} else if (call.state === 'Disconnected') {

connectedLabel.hidden = true;

startCallButton.disabled = false;

hangUpCallButton.disabled = true;

startVideoButton.disabled = true;

stopVideoButton.disabled = true;

console.log(`Call ended, call end reason={code=${call.callEndReason.code}, subCode=${call.callEndReason.subCode}}`);

}

});

call.on('isLocalVideoStartedChanged', () => {

console.log(`isLocalVideoStarted changed: ${call.isLocalVideoStarted}`);

});

console.log(`isLocalVideoStarted: ${call.isLocalVideoStarted}`);

call.localVideoStreams.forEach(async (lvs) => {

localVideoStream = lvs;

await displayLocalVideoStream();

});

call.on('localVideoStreamsUpdated', e => {

e.added.forEach(async (lvs) => {

localVideoStream = lvs;

await displayLocalVideoStream();

});

e.removed.forEach(lvs => {

removeLocalVideoStream();

});

});

// Inspect the call's current remote participants and subscribe to them.

call.remoteParticipants.forEach(remoteParticipant => {

subscribeToRemoteParticipant(remoteParticipant);

});

// Subscribe to the call's 'remoteParticipantsUpdated' event to be

// notified when new participants are added to the call or removed from the call.

call.on('remoteParticipantsUpdated', e => {

// Subscribe to new remote participants that are added to the call.

e.added.forEach(remoteParticipant => {

subscribeToRemoteParticipant(remoteParticipant)

});

// Unsubscribe from participants that are removed from the call

e.removed.forEach(remoteParticipant => {

console.log('Remote participant removed from the call.');

});

});

} catch (error) {

console.error(error);

}

}

/**

* Subscribe to a remote participant obj.

* Listen for property changes and collection udpates.

*/

subscribeToRemoteParticipant = (remoteParticipant) => {

try {

// Inspect the initial remoteParticipant.state value.

console.log(`Remote participant state: ${remoteParticipant.state}`);

// Subscribe to remoteParticipant's 'stateChanged' event for value changes.

remoteParticipant.on('stateChanged', () => {

console.log(`Remote participant state changed: ${remoteParticipant.state}`);

});

// Inspect the remoteParticipants's current videoStreams and subscribe to them.

remoteParticipant.videoStreams.forEach(remoteVideoStream => {

subscribeToRemoteVideoStream(remoteVideoStream)

});

// Subscribe to the remoteParticipant's 'videoStreamsUpdated' event to be

// notified when the remoteParticiapant adds new videoStreams and removes video streams.

remoteParticipant.on('videoStreamsUpdated', e => {

// Subscribe to new remote participant's video streams that were added.

e.added.forEach(remoteVideoStream => {

subscribeToRemoteVideoStream(remoteVideoStream)

});

// Unsubscribe from remote participant's video streams that were removed.

e.removed.forEach(remoteVideoStream => {

console.log('Remote participant video stream was removed.');

})

});

} catch (error) {

console.error(error);

}

}

/**

* Subscribe to a remote participant's remote video stream obj.

* You have to subscribe to the 'isAvailableChanged' event to render the remoteVideoStream. If the 'isAvailable' property

* changes to 'true', a remote participant is sending a stream. Whenever availability of a remote stream changes

* you can choose to destroy the whole 'Renderer', a specific 'RendererView' or keep them, but this will result in displaying blank video frame.

*/

subscribeToRemoteVideoStream = async (remoteVideoStream) => {

let renderer = new VideoStreamRenderer(remoteVideoStream);

let view;

let remoteVideoContainer = document.createElement('div');

remoteVideoContainer.className = 'remote-video-container';

let loadingSpinner = document.createElement('div');

loadingSpinner.className = 'loading-spinner';

remoteVideoStream.on('isReceivingChanged', () => {

try {

if (remoteVideoStream.isAvailable) {

const isReceiving = remoteVideoStream.isReceiving;

const isLoadingSpinnerActive = remoteVideoContainer.contains(loadingSpinner);

if (!isReceiving && !isLoadingSpinnerActive) {

remoteVideoContainer.appendChild(loadingSpinner);

} else if (isReceiving && isLoadingSpinnerActive) {

remoteVideoContainer.removeChild(loadingSpinner);

}

}

} catch (e) {

console.error(e);

}

});

const createView = async () => {

// Create a renderer view for the remote video stream.

view = await renderer.createView();

// Attach the renderer view to the UI.

remoteVideoContainer.appendChild(view.target);

remoteVideosGallery.appendChild(remoteVideoContainer);

}

// Remote participant has switched video on/off

remoteVideoStream.on('isAvailableChanged', async () => {

try {

if (remoteVideoStream.isAvailable) {

await createView();

} else {

view.dispose();

remoteVideosGallery.removeChild(remoteVideoContainer);

}

} catch (e) {

console.error(e);

}

});

// Remote participant has video on initially.

if (remoteVideoStream.isAvailable) {

try {

await createView();

} catch (e) {

console.error(e);

}

}

}

/**

* Start your local video stream.

* This will send your local video stream to remote participants so they can view it.

*/

startVideoButton.onclick = async () => {

try {

const localVideoStream = await createLocalVideoStream();

await call.startVideo(localVideoStream);

} catch (error) {

console.error(error);

}

}

/**

* Stop your local video stream.

* This will stop your local video stream from being sent to remote participants.

*/

stopVideoButton.onclick = async () => {

try {

await call.stopVideo(localVideoStream);

} catch (error) {

console.error(error);

}

}

/**

* To render a LocalVideoStream, you need to create a new instance of VideoStreamRenderer, and then

* create a new VideoStreamRendererView instance using the asynchronous createView() method.

* You may then attach view.target to any UI element.

*/

createLocalVideoStream = async () => {

const camera = (await deviceManager.getCameras())[0];

if (camera) {

return new LocalVideoStream(camera);

} else {

console.error(`No camera device found on the system`);

}

}

/**

* Display your local video stream preview in your UI

*/

displayLocalVideoStream = async () => {

try {

localVideoStreamRenderer = new VideoStreamRenderer(localVideoStream);

const view = await localVideoStreamRenderer.createView();

localVideoContainer.hidden = false;

localVideoContainer.appendChild(view.target);

} catch (error) {

console.error(error);

}

}

/**

* Remove your local video stream preview from your UI

*/

removeLocalVideoStream = async() => {

try {

localVideoStreamRenderer.dispose();

localVideoContainer.hidden = true;

} catch (error) {

console.error(error);

}

}

/**

* End current call

*/

hangUpCallButton.addEventListener("click", async () => {

// end the current call

await call.hangUp();

});

在 styles.css 專案的根目錄中建立檔案,以包含此快速入門的應用程式樣式。 將下列程式碼新增至 styles.css:

/**

* CSS for styling the loading spinner over the remote video stream

*/

.remote-video-container {

position: relative;

}

.loading-spinner {

border: 12px solid #f3f3f3;

border-radius: 50%;

border-top: 12px solid #ca5010;

width: 100px;

height: 100px;

-webkit-animation: spin 2s linear infinite; /* Safari */

animation: spin 2s linear infinite;

position: absolute;

margin: auto;

top: 0;

bottom: 0;

left: 0;

right: 0;

transform: translate(-50%, -50%);

}

@keyframes spin {

0% { transform: rotate(0deg); }

100% { transform: rotate(360deg); }

}

/* Safari */

@-webkit-keyframes spin {

0% { -webkit-transform: rotate(0deg); }

100% { -webkit-transform: rotate(360deg); }

}

新增 Webpack 本機伺服器程式碼

在 webpack.config.js 專案的根目錄中建立檔案,以包含此快速入門的本機伺服器邏輯。 將下列程式碼新增至 webpack.config.js:

const path = require('path');

const CopyPlugin = require("copy-webpack-plugin");

module.exports = {

mode: 'development',

entry: './index.js',

output: {

filename: 'main.js',

path: path.resolve(__dirname, 'dist'),

},

devServer: {

static: {

directory: path.join(__dirname, './')

},

},

plugins: [

new CopyPlugin({

patterns: [

'./index.html'

]

}),

]

};

執行程式碼

使用 webpack-dev-server 來建置並執行您的應用程式。 執行下列命令,在本機 Web 伺服器上組合應用程式主機:

npx webpack serve --config webpack.config.js

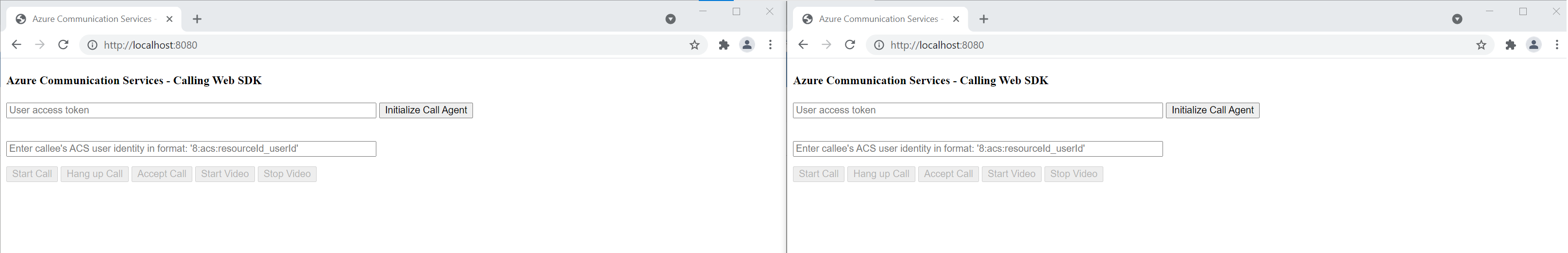

開啟瀏覽器,並在兩個索引標籤上瀏覽到 http://localhost:8080/.You 應該會看到下列畫面:

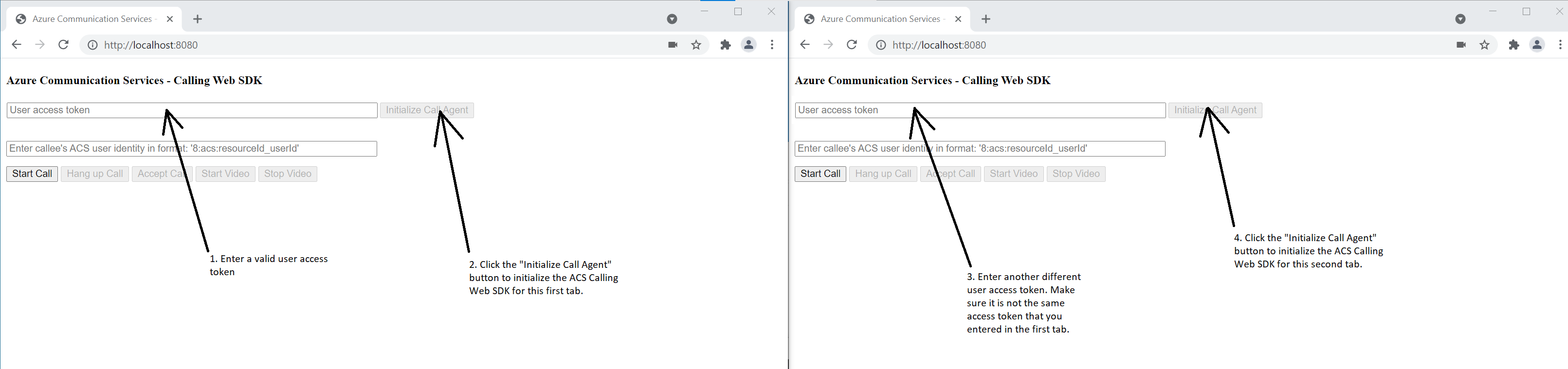

在第一個索引標籤上,輸入有效的使用者存取權杖,並在另一個索引標籤上,輸入另一個不同的有效使用者存取權杖。

如果您還沒有可使用的權杖,請參閱使用者存取權杖文件。

在這兩個索引標籤上,按一下 [初始化通話代理程式] 按鈕。 您應該會看見下列畫面:

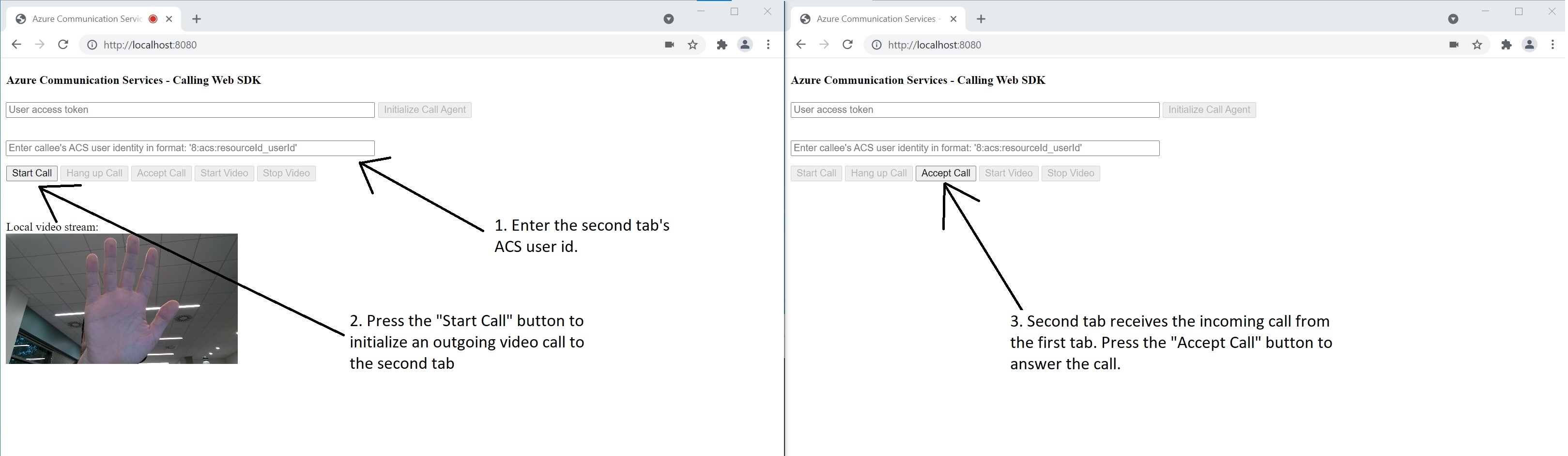

在第一個索引標籤上,輸入第二個索引標籤的 Azure 通訊服務使用者身分識別,然後按一下 [開始通話] 按鈕。 第一個索引標籤會開始外撥給第二個索引標籤的通話,而第二個索引標籤的 [接受通話] 按鈕會變成已啟用:

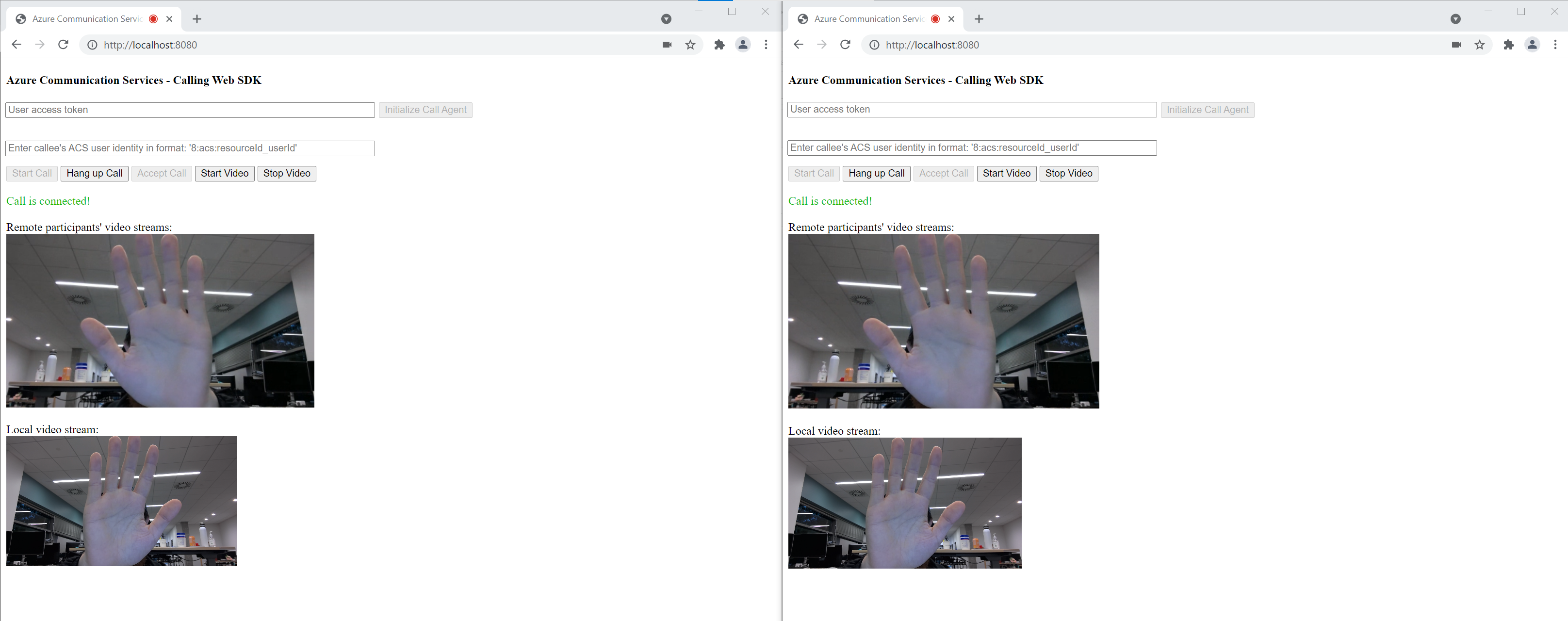

從第二個索引標籤中,按一下 [接受通話] 按鈕,通話即會開始並連線。 您應該會看見下列畫面:

這兩個索引標籤現在已成功處於 1 對 1 視訊通話中。 這兩個索引標籤可以聽到彼此的音訊,並看見彼此的視訊串流。

使用通訊服務的通話用戶端程式庫來將視訊通話新增至您的應用程式,以開始使用 Azure 通訊服務。 了解如何包含 1:1 視訊通話,以及如何建立或加入群組通話。 此外,您可以使用適用於 Android 的 Azure 通訊服務通話 SDK 來開始、接聽及加入視訊通話。

如果您要開始使用範例程式碼,您可以下載範例應用程式。

必要條件

具有有效訂用帳戶的 Azure 帳戶。 免費建立帳戶。

Android Studio,用於建立 Android 應用程式。

已部署通訊服務資源。 建立通訊服務資源。 您必須針對此快速入門記錄您的連接字串。

針對您的 Azure 通訊服務的使用者存取權杖。 您也可以使用 Azure CLI,並搭配您的連接字串執行命令,以建立使用者和存取權杖。

az communication identity token issue --scope voip --connection-string "yourConnectionString"如需詳細資訊,請參閱使用 Azure CLI 建立和管理存取權杖。

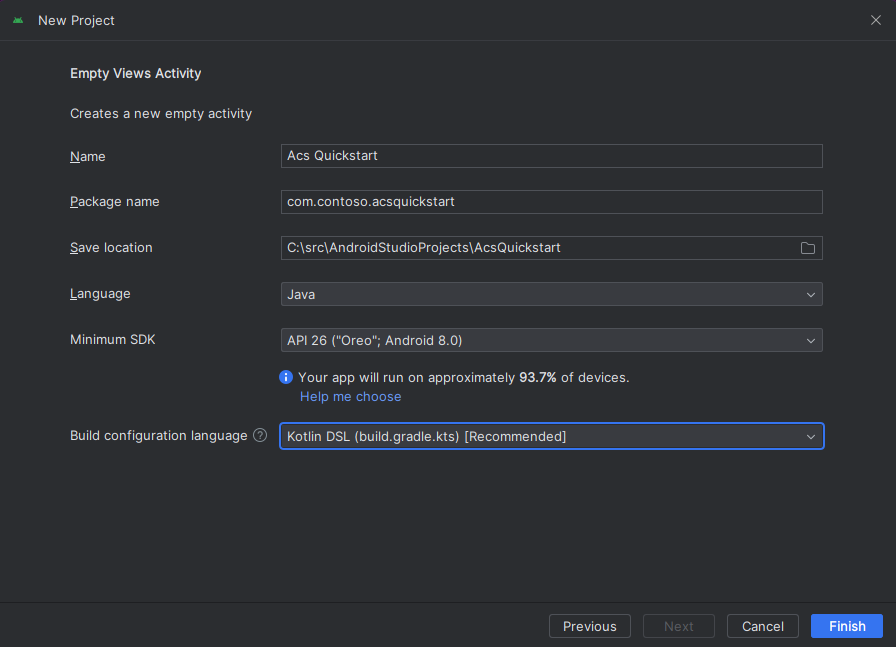

建立具有空白活動的 Android 應用程式

從 Android Studio 中,選取 [啟動新的 Android Studio 專案]。

![顯示在 Android Studio 中選取 [啟動新的 Android Studio 專案] 按鈕的螢幕快照。](media/android/studio-new-project.png)

在 [手機和平板電腦] 底下,選取 [空白活動] 專案範本。

![顯示 [項目範本畫面] 中選取 [空白活動] 選項的螢幕快照。](media/android/studio-blank-activity.png)

針對 [最低 SDK],選取 [API 26: Android 8.0 (Oreo)] 或更新版本。 請參閱 SDK 支援版本。

Install the package

找出您的專案層級 build.gradle,並將 mavenCentral() 新增至 buildscript 和 allprojects 底下的存放庫清單

buildscript {

repositories {

...

mavenCentral()

...

}

}

allprojects {

repositories {

...

mavenCentral()

...

}

}

接著,在模組層級 build.gradle 中,將下列幾行新增至 dependencies 和 android 區段中:

android {

...

packagingOptions {

pickFirst 'META-INF/*'

}

compileOptions {

sourceCompatibility JavaVersion.VERSION_1_8

targetCompatibility JavaVersion.VERSION_1_8

}

}

dependencies {

...

implementation 'com.azure.android:azure-communication-calling:2.0.0'

...

}

將權限新增至應用程式資訊清單

若要要求進行通話所需的權限,您必須先在應用程式資訊清單 (app/src/main/AndroidManifest.xml) 中宣告權限。 使用下列程式碼取代檔案內容:

<?xml version="1.0" encoding="utf-8"?>

<manifest xmlns:android="http://schemas.android.com/apk/res/android"

package="com.contoso.acsquickstart">

<uses-permission android:name="android.permission.INTERNET" />

<uses-permission android:name="android.permission.ACCESS_NETWORK_STATE" />

<uses-permission android:name="android.permission.ACCESS_WIFI_STATE" />

<uses-permission android:name="android.permission.RECORD_AUDIO" />

<uses-permission android:name="android.permission.CAMERA" />

<application

android:allowBackup="true"

android:icon="@mipmap/ic_launcher"

android:label="@string/app_name"

android:roundIcon="@mipmap/ic_launcher_round"

android:supportsRtl="true"

android:theme="@style/AppTheme">

<!--Our Calling SDK depends on the Apache HTTP SDK.

When targeting Android SDK 28+, this library needs to be explicitly referenced.

See https://developer.android.com/about/versions/pie/android-9.0-changes-28#apache-p-->

<uses-library android:name="org.apache.http.legacy" android:required="false"/>

<activity android:name=".MainActivity">

<intent-filter>

<action android:name="android.intent.action.MAIN" />

<category android:name="android.intent.category.LAUNCHER" />

</intent-filter>

</activity>

</application>

</manifest>

設定應用程式的配置

您需要受話者識別碼或群組通話識別碼的文字輸入、進行通話的按鈕,以及掛斷通話的額外按鈕。

同時,還需要兩個按鈕來開啟和關閉本機視訊。 您必須為本機和遠端視訊串流放置兩個容器。 您可以透過設計工具或藉由編輯配置 XML 來新增這些按鈕。

移至 app/src/main/res/layout/activity_main.xml,然後使用下列程式碼取代檔案內容:

<?xml version="1.0" encoding="utf-8"?>

<androidx.constraintlayout.widget.ConstraintLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:app="http://schemas.android.com/apk/res-auto"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent"

tools:context=".MainActivity">

<LinearLayout

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:orientation="vertical">

<EditText

android:id="@+id/call_id"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:ems="10"

android:gravity="center"

android:hint="Callee ID"

android:inputType="textPersonName"

app:layout_constraintBottom_toTopOf="@+id/call_button"

app:layout_constraintEnd_toEndOf="parent"

app:layout_constraintStart_toStartOf="parent"

app:layout_constraintTop_toTopOf="parent"

app:layout_constraintVertical_bias="0.064" />

<LinearLayout

android:layout_width="match_parent"

android:layout_height="wrap_content">

<Button

android:id="@+id/call_button"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:layout_marginBottom="16dp"

android:gravity="center"

android:text="Call"

app:layout_constraintBottom_toBottomOf="parent"

app:layout_constraintEnd_toEndOf="parent"

app:layout_constraintStart_toStartOf="parent" />

<Button

android:id="@+id/show_preview"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:layout_marginBottom="16dp"

android:gravity="center"

android:text="Show Video"

app:layout_constraintBottom_toBottomOf="parent"

app:layout_constraintEnd_toEndOf="parent"

app:layout_constraintStart_toStartOf="parent" />

<Button

android:id="@+id/hide_preview"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:layout_marginBottom="16dp"

android:gravity="center"

android:text="Hide Video"

app:layout_constraintBottom_toBottomOf="parent"

app:layout_constraintEnd_toEndOf="parent"

app:layout_constraintStart_toStartOf="parent" />

<Button

android:id="@+id/hang_up"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:layout_marginBottom="16dp"

android:gravity="center"

android:text="Hang Up"

app:layout_constraintBottom_toBottomOf="parent"

app:layout_constraintEnd_toEndOf="parent"

app:layout_constraintStart_toStartOf="parent" />

</LinearLayout>

<ScrollView

android:layout_width="match_parent"

android:layout_height="wrap_content">

<GridLayout

android:id="@+id/remotevideocontainer"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:columnCount="2"

android:rowCount="2"

android:padding="10dp"></GridLayout>

</ScrollView>

</LinearLayout>

<FrameLayout

android:layout_width="match_parent"

android:layout_height="match_parent">

<LinearLayout

android:id="@+id/localvideocontainer"

android:layout_width="180dp"

android:layout_height="300dp"

android:layout_gravity="right|bottom"

android:orientation="vertical"

android:padding="10dp">

<Button

android:id="@+id/switch_source"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:gravity="center"

android:text="Switch Source"

android:visibility="invisible" />

</LinearLayout>

</FrameLayout>

</androidx.constraintlayout.widget.ConstraintLayout>

建立主要活動 Scaffolding 和繫結

建立配置之後,即可新增繫結,以及活動的基本 Scaffolding。 活動會處理要求執行階段權限、建立通話代理程式,並在按下按鈕時進行通話。

系統會覆寫 onCreate 方法來叫用 getAllPermissions 和 createAgent,並新增通話按鈕的繫結。 此事件只會在建立活動時發生一次。 如需 onCreate 的詳細資訊,請參閱了解活動生命週期指南。

移至 MainActivity.java 檔案,然後使用下列程式碼取代內容:

package com.example.videocallingquickstart;

import androidx.appcompat.app.AppCompatActivity;

import androidx.core.app.ActivityCompat;

import android.Manifest;

import android.content.pm.PackageManager;

import android.media.AudioManager;

import android.os.Bundle;

import android.util.DisplayMetrics;

import android.util.Log;

import android.view.View;

import android.widget.Button;

import android.widget.EditText;

import android.widget.GridLayout;

import android.widget.Toast;

import android.widget.LinearLayout;

import android.content.Context;

import com.azure.android.communication.calling.CallState;

import com.azure.android.communication.calling.CallingCommunicationException;

import com.azure.android.communication.calling.ParticipantsUpdatedListener;

import com.azure.android.communication.calling.PropertyChangedEvent;

import com.azure.android.communication.calling.PropertyChangedListener;

import com.azure.android.communication.calling.StartCallOptions;

import com.azure.android.communication.calling.VideoDeviceInfo;

import com.azure.android.communication.common.CommunicationIdentifier;

import com.azure.android.communication.common.CommunicationTokenCredential;

import com.azure.android.communication.calling.CallAgent;

import com.azure.android.communication.calling.CallClient;

import com.azure.android.communication.calling.DeviceManager;

import com.azure.android.communication.calling.VideoOptions;

import com.azure.android.communication.calling.LocalVideoStream;

import com.azure.android.communication.calling.VideoStreamRenderer;

import com.azure.android.communication.calling.VideoStreamRendererView;

import com.azure.android.communication.calling.CreateViewOptions;

import com.azure.android.communication.calling.ScalingMode;

import com.azure.android.communication.calling.IncomingCall;

import com.azure.android.communication.calling.Call;

import com.azure.android.communication.calling.AcceptCallOptions;

import com.azure.android.communication.calling.ParticipantsUpdatedEvent;

import com.azure.android.communication.calling.RemoteParticipant;

import com.azure.android.communication.calling.RemoteVideoStream;

import com.azure.android.communication.calling.RemoteVideoStreamsEvent;

import com.azure.android.communication.calling.RendererListener;

import com.azure.android.communication.common.CommunicationUserIdentifier;

import com.azure.android.communication.common.MicrosoftTeamsUserIdentifier;

import com.azure.android.communication.common.PhoneNumberIdentifier;

import com.azure.android.communication.common.UnknownIdentifier;

import java.util.ArrayList;

import java.util.HashSet;

import java.util.List;

import java.util.HashMap;

import java.util.Map;

import java.util.concurrent.ExecutionException;

import java.util.concurrent.Executors;

import java.util.ArrayList;

import java.util.HashSet;

import java.util.List;

import java.util.HashMap;

import java.util.Map;

import java.util.concurrent.ExecutionException;

import java.util.concurrent.Executors;

public class MainActivity extends AppCompatActivity {

private CallAgent callAgent;

private VideoDeviceInfo currentCamera;

private LocalVideoStream currentVideoStream;

private DeviceManager deviceManager;

private IncomingCall incomingCall;

private Call call;

VideoStreamRenderer previewRenderer;

VideoStreamRendererView preview;

final Map<Integer, StreamData> streamData = new HashMap<>();

private boolean renderRemoteVideo = true;

private ParticipantsUpdatedListener remoteParticipantUpdatedListener;

private PropertyChangedListener onStateChangedListener;

final HashSet<String> joinedParticipants = new HashSet<>();

Button switchSourceButton;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

getAllPermissions();

createAgent();

handleIncomingCall();

Button callButton = findViewById(R.id.call_button);

callButton.setOnClickListener(l -> startCall());

Button hangupButton = findViewById(R.id.hang_up);

hangupButton.setOnClickListener(l -> hangUp());

Button startVideo = findViewById(R.id.show_preview);

startVideo.setOnClickListener(l -> turnOnLocalVideo());

Button stopVideo = findViewById(R.id.hide_preview);

stopVideo.setOnClickListener(l -> turnOffLocalVideo());

switchSourceButton = findViewById(R.id.switch_source);

switchSourceButton.setOnClickListener(l -> switchSource());

setVolumeControlStream(AudioManager.STREAM_VOICE_CALL);

}

/**

* Request each required permission if the app doesn't already have it.

*/

private void getAllPermissions() {

// See section on requesting permissions

}

/**

* Create the call agent for placing calls

*/

private void createAgent() {

// See section on creating the call agent

}

/**

* Handle incoming calls

*/

private void handleIncomingCall() {

// See section on answering incoming call

}

/**

* Place a call to the callee id provided in `callee_id` text input.

*/

private void startCall() {

// See section on starting the call

}

/**

* End calls

*/

private void hangUp() {

// See section on ending the call

}

/**

* Mid-call operations

*/

public void turnOnLocalVideo() {

// See section

}

public void turnOffLocalVideo() {

// See section

}

/**

* Change the active camera for the next available

*/

public void switchSource() {

// See section

}

}

要求執行階段時的權限

針對 Android 6.0 和更新版本 (API 層級 23),以及 targetSdkVersion 23 或更新版本,權限會在執行階段授與,而不是在安裝應用程式時授與。 為了提供支援,可實作 getAllPermissions,針對每個必要的權限呼叫 ActivityCompat.checkSelfPermission 和 ActivityCompat.requestPermissions。

/**

* Request each required permission if the app doesn't already have it.

*/

private void getAllPermissions() {

String[] requiredPermissions = new String[]{Manifest.permission.RECORD_AUDIO, Manifest.permission.CAMERA, Manifest.permission.WRITE_EXTERNAL_STORAGE, Manifest.permission.READ_PHONE_STATE};

ArrayList<String> permissionsToAskFor = new ArrayList<>();

for (String permission : requiredPermissions) {

if (ActivityCompat.checkSelfPermission(this, permission) != PackageManager.PERMISSION_GRANTED) {

permissionsToAskFor.add(permission);

}

}

if (!permissionsToAskFor.isEmpty()) {

ActivityCompat.requestPermissions(this, permissionsToAskFor.toArray(new String[0]), 1);

}

}

注意

當您設計應用程式時,請考量何時應要求這些權限。 您應該視需要要求權限,而不是提前要求。 如需詳細資訊,請參閱 Android 權限指南 \(機器翻譯\)。

物件模型

下列類別和介面會處理 Azure 通訊服務通話 SDK 的一些重大功能:

| 名稱 | 描述 |

|---|---|

CallClient |

通話 SDK 的主要進入點。 |

CallAgent |

用來開始和管理通話。 |

CommunicationTokenCredential |

用來作為權杖認證以將 CallAgent 具現化。 |

CommunicationIdentifier |

用來作為可能屬於通話一部分的不同類型參與者。 |

從使用者存取權杖建立代理程式

您需要使用者權杖,才能建立已驗證的通話代理程式。 一般來說,此權杖會從具有應用程式特定驗證的服務產生。 如需使用者存取權杖的詳細資訊,請參閱使用者存取權杖。

在快速入門中,將 <User_Access_Token> 取代為針對您的 Azure 通訊服務資源產生的使用者存取權杖。

/**

* Create the call agent for placing calls

*/

private void createAgent() {

Context context = this.getApplicationContext();

String userToken = "<USER_ACCESS_TOKEN>";

try {

CommunicationTokenCredential credential = new CommunicationTokenCredential(userToken);

CallClient callClient = new CallClient();

deviceManager = callClient.getDeviceManager(context).get();

callAgent = callClient.createCallAgent(getApplicationContext(), credential).get();

} catch (Exception ex) {

Toast.makeText(context, "Failed to create call agent.", Toast.LENGTH_SHORT).show();

}

}

使用通話代理程式開始視訊通話

您可以使用通話代理程式來進行通話。 您只需提供受話者識別碼和通話選項的清單。

若要進行視訊通話,您必須使用 deviceManagergetCameras API 來列舉本機相機。 選取所需的相機之後,使用它來建構 LocalVideoStream 執行個體。 然後,將其當作 localVideoStream 陣列中的項目傳遞至 videoOptions,並傳入通話方法。 當通話連線之後,便會自動開始從選取的相機中將視訊串流傳送給另一位參與者。

private void startCall() {

Context context = this.getApplicationContext();

EditText callIdView = findViewById(R.id.call_id);

String callId = callIdView.getText().toString();

ArrayList<CommunicationIdentifier> participants = new ArrayList<CommunicationIdentifier>();

List<VideoDeviceInfo> cameras = deviceManager.getCameras();

StartCallOptions options = new StartCallOptions();

if(!cameras.isEmpty()) {

currentCamera = getNextAvailableCamera(null);

currentVideoStream = new LocalVideoStream(currentCamera, context);

LocalVideoStream[] videoStreams = new LocalVideoStream[1];

videoStreams[0] = currentVideoStream;

VideoOptions videoOptions = new VideoOptions(videoStreams);

options.setVideoOptions(videoOptions);

showPreview(currentVideoStream);

}

participants.add(new CommunicationUserIdentifier(callId));

call = callAgent.startCall(

context,

participants,

options);

//Subscribe to events on updates of call state and remote participants

remoteParticipantUpdatedListener = this::handleRemoteParticipantsUpdate;

onStateChangedListener = this::handleCallOnStateChanged;

call.addOnRemoteParticipantsUpdatedListener(remoteParticipantUpdatedListener);

call.addOnStateChangedListener(onStateChangedListener);

}

在此快速入門中,您依賴 getNextAvailableCamera 函式來挑選通話所使用的相機。 此函式採用相機列舉作為輸入,並逐一查看清單,以取得下一個可用的相機。 如果引數是 null,則函式會挑選清單中的第一個裝置。 如果您在選取 [開始通話] 時沒有可用的相機,即會改為開始音訊通話。 但是,如果遠端參與者透過視訊來接聽,您仍然可以看到遠端視訊串流。

private VideoDeviceInfo getNextAvailableCamera(VideoDeviceInfo camera) {

List<VideoDeviceInfo> cameras = deviceManager.getCameras();

int currentIndex = 0;

if (camera == null) {

return cameras.isEmpty() ? null : cameras.get(0);

}

for (int i = 0; i < cameras.size(); i++) {

if (camera.getId().equals(cameras.get(i).getId())) {

currentIndex = i;

break;

}

}

int newIndex = (currentIndex + 1) % cameras.size();

return cameras.get(newIndex);

}

當您建構 LocalVideoStream 執行個體之後,就可以建立轉譯器,在 UI 上顯示它。

private void showPreview(LocalVideoStream stream) {

previewRenderer = new VideoStreamRenderer(stream, this);

LinearLayout layout = findViewById(R.id.localvideocontainer);

preview = previewRenderer.createView(new CreateViewOptions(ScalingMode.FIT));

preview.setTag(0);

runOnUiThread(() -> {

layout.addView(preview);

switchSourceButton.setVisibility(View.VISIBLE);

});

}

若要允許使用者切換本機視訊來源,請使用 switchSource。 此方法會挑選下一個可用的相機,並將其定義為本機串流。

public void switchSource() {

if (currentVideoStream != null) {

try {

currentCamera = getNextAvailableCamera(currentCamera);

currentVideoStream.switchSource(currentCamera).get();

} catch (InterruptedException e) {

e.printStackTrace();

} catch (ExecutionException e) {

e.printStackTrace();

}

}

}

接聽來電

您可以在 callAgent 上訂閱 addOnIncomingCallListener,以取得來電。

private void handleIncomingCall() {

callAgent.addOnIncomingCallListener((incomingCall) -> {

this.incomingCall = incomingCall;

Executors.newCachedThreadPool().submit(this::answerIncomingCall);

});

}

若要在視訊相機開啟的情況下接受通話,請使用 deviceManagergetCameras API 來列舉本機相機。 挑選相機,並建構 LocalVideoStream 執行個體。 先將其傳遞到 acceptCallOptions,然後在 call 物件上呼叫 accept 方法。

private void answerIncomingCall() {

Context context = this.getApplicationContext();

if (incomingCall == null){

return;

}

AcceptCallOptions acceptCallOptions = new AcceptCallOptions();

List<VideoDeviceInfo> cameras = deviceManager.getCameras();

if(!cameras.isEmpty()) {

currentCamera = getNextAvailableCamera(null);

currentVideoStream = new LocalVideoStream(currentCamera, context);

LocalVideoStream[] videoStreams = new LocalVideoStream[1];

videoStreams[0] = currentVideoStream;

VideoOptions videoOptions = new VideoOptions(videoStreams);

acceptCallOptions.setVideoOptions(videoOptions);

showPreview(currentVideoStream);

}

try {

call = incomingCall.accept(context, acceptCallOptions).get();

} catch (InterruptedException e) {

e.printStackTrace();

} catch (ExecutionException e) {

e.printStackTrace();

}

//Subscribe to events on updates of call state and remote participants

remoteParticipantUpdatedListener = this::handleRemoteParticipantsUpdate;

onStateChangedListener = this::handleCallOnStateChanged;

call.addOnRemoteParticipantsUpdatedListener(remoteParticipantUpdatedListener);

call.addOnStateChangedListener(onStateChangedListener);

}

遠端參與者和遠端視訊串流

當您開始通話或接聽來電時,您必須訂閱 addOnRemoteParticipantsUpdatedListener 事件來處理遠端參與者。

remoteParticipantUpdatedListener = this::handleRemoteParticipantsUpdate;

call.addOnRemoteParticipantsUpdatedListener(remoteParticipantUpdatedListener);

使用在相同類別內定義的事件接聽程式時,請將接聽程式繫結至變數。 將變數傳遞為引數,以新增和移除接聽程式方法。

如果您嘗試以引數形式直接傳入接聽程式,則您會失去對該接聽程式的參考。 Java 會建立這些接聽程式的新執行個體,而不是參考先前建立的接聽程式。 您無法移除先前的執行個體,因為您沒有對這些執行個體的參考。

遠端視訊串流更新

針對 1:1 通話,您必須處理新增的參與者。 當您移除遠端參與者時,通話即會結束。 針對新增的參與者,您可以訂閱 addOnVideoStreamsUpdatedListener 來處理視訊串流更新。

public void handleRemoteParticipantsUpdate(ParticipantsUpdatedEvent args) {

handleAddedParticipants(args.getAddedParticipants());

}

private void handleAddedParticipants(List<RemoteParticipant> participants) {

for (RemoteParticipant remoteParticipant : participants) {

if(!joinedParticipants.contains(getId(remoteParticipant))) {

joinedParticipants.add(getId(remoteParticipant));

if (renderRemoteVideo) {

for (RemoteVideoStream stream : remoteParticipant.getVideoStreams()) {

StreamData data = new StreamData(stream, null, null);

streamData.put(stream.getId(), data);

startRenderingVideo(data);

}

}

remoteParticipant.addOnVideoStreamsUpdatedListener(videoStreamsEventArgs -> videoStreamsUpdated(videoStreamsEventArgs));

}

}

}

private void videoStreamsUpdated(RemoteVideoStreamsEvent videoStreamsEventArgs) {

for(RemoteVideoStream stream : videoStreamsEventArgs.getAddedRemoteVideoStreams()) {

StreamData data = new StreamData(stream, null, null);

streamData.put(stream.getId(), data);

if (renderRemoteVideo) {

startRenderingVideo(data);

}

}

for(RemoteVideoStream stream : videoStreamsEventArgs.getRemovedRemoteVideoStreams()) {

stopRenderingVideo(stream);

}

}

public String getId(final RemoteParticipant remoteParticipant) {

final CommunicationIdentifier identifier = remoteParticipant.getIdentifier();

if (identifier instanceof PhoneNumberIdentifier) {

return ((PhoneNumberIdentifier) identifier).getPhoneNumber();

} else if (identifier instanceof MicrosoftTeamsUserIdentifier) {

return ((MicrosoftTeamsUserIdentifier) identifier).getUserId();

} else if (identifier instanceof CommunicationUserIdentifier) {

return ((CommunicationUserIdentifier) identifier).getId();

} else {

return ((UnknownIdentifier) identifier).getId();

}

}

轉譯遠端視訊

建立遠端視訊串流的轉譯器,並將其附加至檢視,以開始轉譯遠端檢視。 處置檢視以停止轉譯。

void startRenderingVideo(StreamData data){

if (data.renderer != null) {

return;

}

GridLayout layout = ((GridLayout)findViewById(R.id.remotevideocontainer));

data.renderer = new VideoStreamRenderer(data.stream, this);

data.renderer.addRendererListener(new RendererListener() {

@Override

public void onFirstFrameRendered() {

String text = data.renderer.getSize().toString();

Log.i("MainActivity", "Video rendering at: " + text);

}

@Override

public void onRendererFailedToStart() {

String text = "Video failed to render";

Log.i("MainActivity", text);

}

});

data.rendererView = data.renderer.createView(new CreateViewOptions(ScalingMode.FIT));

data.rendererView.setTag(data.stream.getId());

runOnUiThread(() -> {

GridLayout.LayoutParams params = new GridLayout.LayoutParams(layout.getLayoutParams());

DisplayMetrics displayMetrics = new DisplayMetrics();

getWindowManager().getDefaultDisplay().getMetrics(displayMetrics);

params.height = (int)(displayMetrics.heightPixels / 2.5);

params.width = displayMetrics.widthPixels / 2;

layout.addView(data.rendererView, params);

});

}

void stopRenderingVideo(RemoteVideoStream stream) {

StreamData data = streamData.get(stream.getId());

if (data == null || data.renderer == null) {

return;

}

runOnUiThread(() -> {

GridLayout layout = findViewById(R.id.remotevideocontainer);

for(int i = 0; i < layout.getChildCount(); ++ i) {

View childView = layout.getChildAt(i);

if ((int)childView.getTag() == data.stream.getId()) {

layout.removeViewAt(i);

}

}

});

data.rendererView = null;

// Dispose renderer

data.renderer.dispose();

data.renderer = null;

}

static class StreamData {

RemoteVideoStream stream;

VideoStreamRenderer renderer;

VideoStreamRendererView rendererView;

StreamData(RemoteVideoStream stream, VideoStreamRenderer renderer, VideoStreamRendererView rendererView) {

this.stream = stream;

this.renderer = renderer;

this.rendererView = rendererView;

}

}

通話狀態更新

通話狀態可能會從已連線變更為已中斷連線。 當通話連線之後,您就能處理遠端參與者,並在通話中斷連線時,處置 previewRenderer 來停止本機視訊。

private void handleCallOnStateChanged(PropertyChangedEvent args) {

if (call.getState() == CallState.CONNECTED) {

runOnUiThread(() -> Toast.makeText(this, "Call is CONNECTED", Toast.LENGTH_SHORT).show());

handleCallState();

}

if (call.getState() == CallState.DISCONNECTED) {

runOnUiThread(() -> Toast.makeText(this, "Call is DISCONNECTED", Toast.LENGTH_SHORT).show());

if (previewRenderer != null) {

previewRenderer.dispose();

}

switchSourceButton.setVisibility(View.INVISIBLE);

}

}

結束通話

在通話執行個體上呼叫 hangUp() 函式,以結束通話。 處置 previewRenderer 以停止本機視訊。

private void hangUp() {

try {

call.hangUp().get();

switchSourceButton.setVisibility(View.INVISIBLE);

} catch (ExecutionException | InterruptedException e) {

e.printStackTrace();

}

if (previewRenderer != null) {

previewRenderer.dispose();

}

}

隱藏和顯示本機視訊

通話開始之後,您就能使用 turnOffLocalVideo() 來停止本機視訊轉譯和串流,此方法會移除包裝本機轉譯器的檢視,並處置目前的串流。 若要繼續串流並再次轉譯本機預覽,請使用 turnOnLocalVideo(),此方法會顯示視訊預覽並開始串流。

public void turnOnLocalVideo() {

List<VideoDeviceInfo> cameras = deviceManager.getCameras();

if(!cameras.isEmpty()) {

try {

currentVideoStream = new LocalVideoStream(currentCamera, this);

showPreview(currentVideoStream);

call.startVideo(this, currentVideoStream).get();

switchSourceButton.setVisibility(View.VISIBLE);

} catch (CallingCommunicationException acsException) {

acsException.printStackTrace();

} catch (ExecutionException | InterruptedException e) {

e.printStackTrace();

}

}

}

public void turnOffLocalVideo() {

try {

LinearLayout container = findViewById(R.id.localvideocontainer);

for (int i = 0; i < container.getChildCount(); ++i) {

Object tag = container.getChildAt(i).getTag();

if (tag != null && (int)tag == 0) {

container.removeViewAt(i);

}

}

switchSourceButton.setVisibility(View.INVISIBLE);

previewRenderer.dispose();

previewRenderer = null;

call.stopVideo(this, currentVideoStream).get();

} catch (CallingCommunicationException acsException) {

acsException.printStackTrace();

} catch (ExecutionException | InterruptedException e) {

e.printStackTrace();

}

}

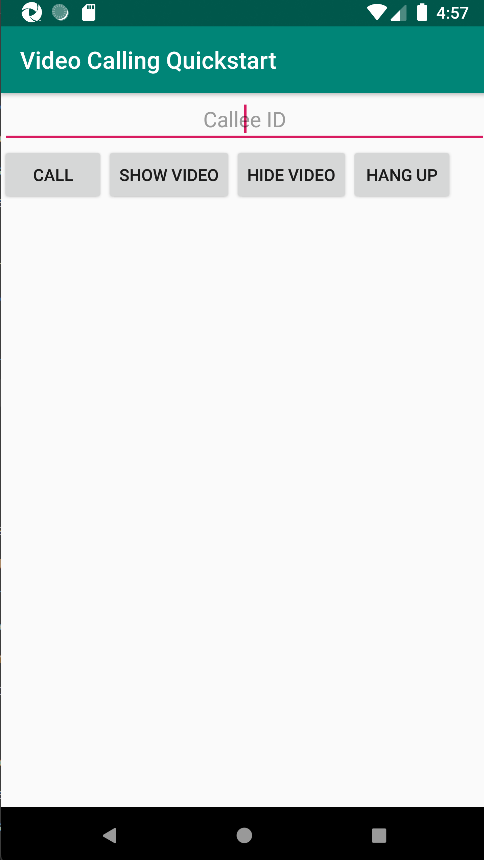

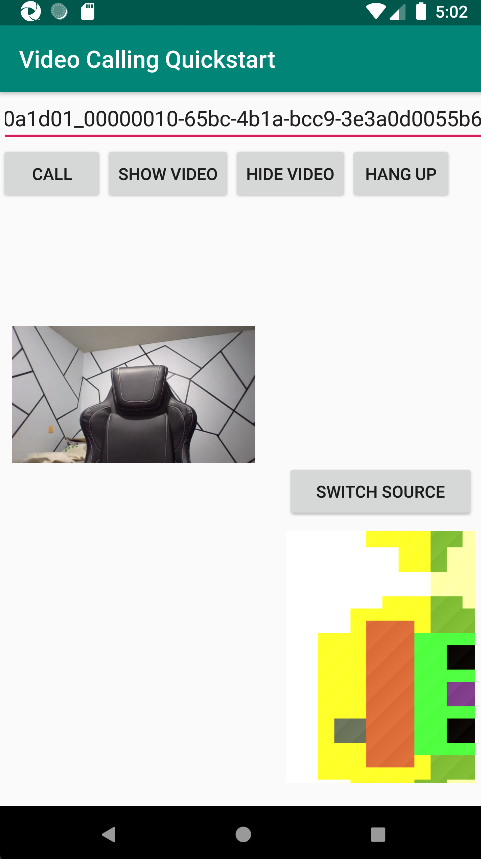

執行程式碼

您現在可以使用 Android Studio 工具列上的 [執行應用程式] 按鈕來啟動應用程式。

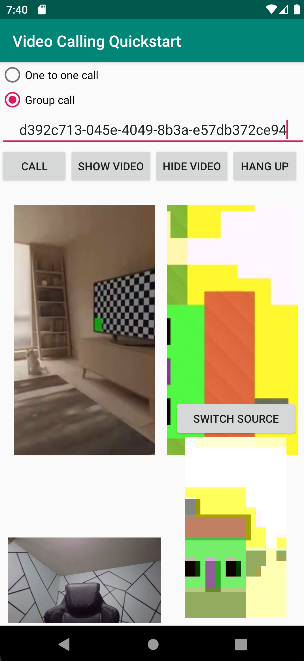

| 完成的應用程式 | 1:1 通話 |

|---|---|

|

|

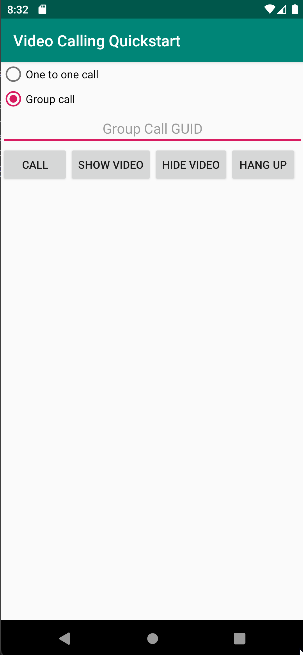

新增群組通話功能

現在您可以更新應用程式,讓使用者能夠在 1:1 通話或群組通話之間進行選擇。

更新配置

使用選項按鈕來選取 SDK 要建立 1:1 通話,還是要加入群組通話。 此選項按鈕位於頂端,因此,app/src/main/res/layout/activity_main.xml 的第一個區段結尾如下所示。

<?xml version="1.0" encoding="utf-8"?>

<androidx.constraintlayout.widget.ConstraintLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:app="http://schemas.android.com/apk/res-auto"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent"

tools:context=".MainActivity">

<LinearLayout

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:orientation="vertical">

<RadioGroup

android:layout_width="match_parent"

android:layout_height="wrap_content">

<RadioButton

android:id="@+id/one_to_one_call"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:text="One to one call" />

<RadioButton

android:id="@+id/group_call"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:text="Group call" />

</RadioGroup>

<EditText

android:id="@+id/call_id"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:ems="10"

android:gravity="center"

android:hint="Callee ID"

android:inputType="textPersonName"

app:layout_constraintBottom_toTopOf="@+id/call_button"

app:layout_constraintEnd_toEndOf="parent"

app:layout_constraintStart_toStartOf="parent"

app:layout_constraintTop_toTopOf="parent"

app:layout_constraintVertical_bias="0.064" />

.

.

.

</androidx.constraintlayout.widget.ConstraintLayout>

更新 MainActivity.Java

您現在可以更新元素和邏輯,來決定何時要建立 1:1 通話,以及何時要加入群組通話。 第一個部分的程式碼需要更新,才能新增相依性、項目及其他設定。

相依性:

import android.widget.RadioButton;

import com.azure.android.communication.calling.GroupCallLocator;

import com.azure.android.communication.calling.JoinCallOptions;

import java.util.UUID;

全域元素:

RadioButton oneToOneCall, groupCall;

更新 onCreate():

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

getAllPermissions();

createAgent();

handleIncomingCall();

Button callButton = findViewById(R.id.call_button);

callButton.setOnClickListener(l -> startCall());

Button hangupButton = findViewById(R.id.hang_up);

hangupButton.setOnClickListener(l -> hangUp());

Button startVideo = findViewById(R.id.show_preview);

startVideo.setOnClickListener(l -> turnOnLocalVideo());

Button stopVideo = findViewById(R.id.hide_preview);

stopVideo.setOnClickListener(l -> turnOffLocalVideo());

switchSourceButton = findViewById(R.id.switch_source);

switchSourceButton.setOnClickListener(l -> switchSource());

setVolumeControlStream(AudioManager.STREAM_VOICE_CALL);

oneToOneCall = findViewById(R.id.one_to_one_call);

oneToOneCall.setOnClickListener(this::onCallTypeSelected);

oneToOneCall.setChecked(true);

groupCall = findViewById(R.id.group_call);

groupCall.setOnClickListener(this::onCallTypeSelected);

}

更新 startCall():

private void startCall() {

Context context = this.getApplicationContext();

EditText callIdView = findViewById(R.id.call_id);

String callId = callIdView.getText().toString();

ArrayList<CommunicationIdentifier> participants = new ArrayList<CommunicationIdentifier>();

List<VideoDeviceInfo> cameras = deviceManager.getCameras();

if(oneToOneCall.isChecked()){

StartCallOptions options = new StartCallOptions();

if(!cameras.isEmpty()) {

currentCamera = getNextAvailableCamera(null);

currentVideoStream = new LocalVideoStream(currentCamera, context);

LocalVideoStream[] videoStreams = new LocalVideoStream[1];

videoStreams[0] = currentVideoStream;

VideoOptions videoOptions = new VideoOptions(videoStreams);

options.setVideoOptions(videoOptions);

showPreview(currentVideoStream);

}

participants.add(new CommunicationUserIdentifier(callId));

call = callAgent.startCall(

context,

participants,

options);

}

else{

JoinCallOptions options = new JoinCallOptions();

if(!cameras.isEmpty()) {

currentCamera = getNextAvailableCamera(null);

currentVideoStream = new LocalVideoStream(currentCamera, context);

LocalVideoStream[] videoStreams = new LocalVideoStream[1];

videoStreams[0] = currentVideoStream;

VideoOptions videoOptions = new VideoOptions(videoStreams);

options.setVideoOptions(videoOptions);

showPreview(currentVideoStream);

}

GroupCallLocator groupCallLocator = new GroupCallLocator(UUID.fromString(callId));

call = callAgent.join(

context,

groupCallLocator,

options);

}

remoteParticipantUpdatedListener = this::handleRemoteParticipantsUpdate;

onStateChangedListener = this::handleCallOnStateChanged;

call.addOnRemoteParticipantsUpdatedListener(remoteParticipantUpdatedListener);

call.addOnStateChangedListener(onStateChangedListener);

}

新增 onCallTypeSelected():

public void onCallTypeSelected(View view) {

boolean checked = ((RadioButton) view).isChecked();

EditText callIdView = findViewById(R.id.call_id);

switch(view.getId()) {

case R.id.one_to_one_call:

if (checked){

callIdView.setHint("Callee id");

}

break;

case R.id.group_call:

if (checked){

callIdView.setHint("Group Call GUID");

}

break;

}

}

執行升級的應用程式

此時,您可以使用 Android Studio 工具列上的 [執行應用程式] 按鈕來啟動應用程式。

| 畫面更新 | 群組通話 |

|---|---|

|

|

使用通訊服務的通話 SDK,在您的應用程式中新增一對一視訊通話,以開始使用 Azure 通訊服務。 您將了解如何使用適用於 iOS 的 Azure 通訊服務通話 SDK,開始並接聽視訊通話。

範例程式碼

如果您想要直接跳到結尾,您可以在 GitHub \(英文\) 上下載此快速入門作為範例。

必要條件

取得具備有效訂用帳戶的 Azure 帳戶。 免費建立帳戶。

執行 Xcode 的 Mac,以及安裝在您 Keychain 中的有效開發人員憑證。

建立作用中的通訊服務資源。 建立通訊服務資源。 您必須針對此快速入門記錄您的連接字串。

針對您的 Azure 通訊服務的使用者存取權杖。 您也可以使用 Azure CLI,並搭配您的連接字串執行命令,以建立使用者和存取權杖。

az communication identity token issue --scope voip --connection-string "yourConnectionString"如需詳細資訊,請參閱使用 Azure CLI 建立和管理存取權杖。

設定

建立 XCode 專案

在 Xcode 中,建立新的 iOS 專案,並選取 [單一檢視應用程式] 範本。 此教學課程使用 SwiftUI 架構 \(英文\),因此,您應將 [語言] 設定為 [Swift],並將 [使用者介面] 設定為 [SwiftUI]。 進行本快速入門期間,您不會建立測試。 您可以視需要取消核取 [包含測試]。

![顯示 Xcode 內 [新增專案] 視窗的螢幕快照。](media/ios/xcode-new-ios-project.png)

安裝 CocoaPods

使用此指南,在 Mac 上安裝 CocoaPods \(英文\)。

使用 CocoaPods 安裝套件和相依性

若要為應用程式建立

Podfile,請開啟終端,然後瀏覽至專案資料夾並執行 pod init。將下列程式碼新增至

Podfile並儲存。 請參閱 SDK 支援版本。

platform :ios, '13.0'

use_frameworks!

target 'VideoCallingQuickstart' do

pod 'AzureCommunicationCalling', '~> 1.0.0'

end

執行 pod install。

使用 Xcode 開啟

.xcworkspace。

直接使用 XCFramework

如果您不是使用 CocoaPods 作為相依性管理員,您可以直接從我們的發行版本頁面 \(英文\) 中下載 AzureCommunicationCalling.xcframework。

請務必知道 AzureCommunicationCalling 相依於 AzureCommunicationCommon,因此,您也必須在專案中安裝它。

注意

儘管 AzureCommunicationCommon 是純 Swift 套件,但是您無法使用 Swift Package Manager 來安裝它,以搭配 AzureCommunicationCalling 使用,因為後者是 Objective-C 架構,而 Swift Package Manager 在設計上故意不支援 Swift ObjC 介面標頭,這表示,如果使用 Swift Package Manager 安裝,就無法與 AzureCommunicationCalling 一起運作。 您必須透過另一個相依性管理員進行安裝,或者從 AzureCommunicationCommon 來源產生 xcframework,並匯入到您的專案。

要求存取麥克風和相機的權限

若要存取裝置的麥克風和相機,您必須以 NSMicrophoneUsageDescription 和 NSCameraUsageDescription 更新應用程式的資訊屬性清單。 您可以將相關聯的值設定為字串,其中包含系統用來向使用者要求存取權的對話。

以滑鼠右鍵按一下專案樹狀結構的 Info.plist 項目,然後選取 [開啟為] > [原始程式碼]。 將以下幾行新增至最上層 <dict> 區段中,然後儲存檔案。

<key>NSMicrophoneUsageDescription</key>

<string>Need microphone access for VOIP calling.</string>

<key>NSCameraUsageDescription</key>

<string>Need camera access for video calling</string>

設定應用程式架構

開啟專案的 ContentView.swift 檔案,並將匯入宣告新增至檔案頂端,以匯入 AzureCommunicationCalling 程式庫和 AVFoundation。 AVFoundation 可用於從程式碼擷取音訊權限。

import AzureCommunicationCalling

import AVFoundation

物件模型

下列類別和介面會處理適用於 iOS 的 Azure 通訊服務通話 SDK 的一些主要功能。

| 名稱 | 描述 |

|---|---|

CallClient |

CallClient 是通話 SDK 的主要進入點。 |

CallAgent |

CallAgent 可用來開始和管理通話。 |

CommunicationTokenCredential |

CommunicationTokenCredential 可用來作為權杖認證以將 CallAgent 具現化。 |

CommunicationIdentifier |

CommunicationIdentifier 可用來代表使用者的身分識別,其可以是下列其中一個選項:CommunicationUserIdentifier、PhoneNumberIdentifier 或 CallingApplication。 |

建立通話代理程式

使用一些簡單的 UI 控制項來取代 ContentView struct 的實作,讓使用者可以起始和結束通話。 在此快速入門中,我們會將商務邏輯附加至這些控制項。

struct ContentView: View {

@State var callee: String = ""

@State var callClient: CallClient?

@State var callAgent: CallAgent?

@State var call: Call?

@State var deviceManager: DeviceManager?

@State var localVideoStream:[LocalVideoStream]?

@State var incomingCall: IncomingCall?

@State var sendingVideo:Bool = false

@State var errorMessage:String = "Unknown"

@State var remoteVideoStreamData:[Int32:RemoteVideoStreamData] = [:]

@State var previewRenderer:VideoStreamRenderer? = nil

@State var previewView:RendererView? = nil

@State var remoteRenderer:VideoStreamRenderer? = nil

@State var remoteViews:[RendererView] = []

@State var remoteParticipant: RemoteParticipant?

@State var remoteVideoSize:String = "Unknown"

@State var isIncomingCall:Bool = false

@State var callObserver:CallObserver?

@State var remoteParticipantObserver:RemoteParticipantObserver?

var body: some View {

NavigationView {

ZStack{

Form {

Section {

TextField("Who would you like to call?", text: $callee)

Button(action: startCall) {

Text("Start Call")

}.disabled(callAgent == nil)

Button(action: endCall) {

Text("End Call")

}.disabled(call == nil)

Button(action: toggleLocalVideo) {

HStack {

Text(sendingVideo ? "Turn Off Video" : "Turn On Video")

}

}

}

}

// Show incoming call banner

if (isIncomingCall) {

HStack() {

VStack {

Text("Incoming call")

.padding(10)

.frame(maxWidth: .infinity, alignment: .topLeading)

}

Button(action: answerIncomingCall) {

HStack {

Text("Answer")

}

.frame(width:80)

.padding(.vertical, 10)

.background(Color(.green))

}

Button(action: declineIncomingCall) {

HStack {

Text("Decline")

}

.frame(width:80)

.padding(.vertical, 10)

.background(Color(.red))

}

}

.frame(maxWidth: .infinity, alignment: .topLeading)

.padding(10)

.background(Color.gray)

}

ZStack{

VStack{

ForEach(remoteViews, id:\.self) { renderer in

ZStack{

VStack{

RemoteVideoView(view: renderer)

.frame(width: .infinity, height: .infinity)

.background(Color(.lightGray))

}

}

Button(action: endCall) {

Text("End Call")

}.disabled(call == nil)

Button(action: toggleLocalVideo) {

HStack {

Text(sendingVideo ? "Turn Off Video" : "Turn On Video")

}

}

}

}.frame(maxWidth: .infinity, maxHeight: .infinity, alignment: .topLeading)

VStack{

if(sendingVideo)

{

VStack{

PreviewVideoStream(view: previewView!)

.frame(width: 135, height: 240)

.background(Color(.lightGray))

}

}

}.frame(maxWidth:.infinity, maxHeight:.infinity,alignment: .bottomTrailing)

}

}

.navigationBarTitle("Video Calling Quickstart")

}.onAppear{

// Authenticate the client

// Initialize the CallAgent and access Device Manager

// Ask for permissions

}

}

}

//Functions and Observers

struct PreviewVideoStream: UIViewRepresentable {

let view:RendererView

func makeUIView(context: Context) -> UIView {

return view

}

func updateUIView(_ uiView: UIView, context: Context) {}

}

struct RemoteVideoView: UIViewRepresentable {

let view:RendererView

func makeUIView(context: Context) -> UIView {

return view

}

func updateUIView(_ uiView: UIView, context: Context) {}

}

struct ContentView_Previews: PreviewProvider {

static var previews: some View {

ContentView()

}

}

驗證用戶端

為了將 CallAgent 執行個體初始化,您需要使用者存取權杖,才能撥打和接聽通話。 如果您沒有可使用的權杖,請參閱使用者存取權杖文件。

當您具有權杖後,將下列程式碼新增至 ContentView.swift 中的 onAppear 回呼。 您必須將 <USER ACCESS TOKEN> 取代為資源的有效使用者存取權杖:

var userCredential: CommunicationTokenCredential?

do {

userCredential = try CommunicationTokenCredential(token: "<USER ACCESS TOKEN>")

} catch {

print("ERROR: It was not possible to create user credential.")

return

}

將 CallAgent 初始化並存取裝置管理員

若要從 CallClient 建立 CallAgent 執行個體,請使用 callClient.createCallAgent 方法,在將其初始化之後非同步傳回 CallAgent 物件。 DeviceManager 讓您能夠列舉可於通話中用來傳輸音訊/視訊串流的本機裝置。 這也可讓您向使用者要求權限以存取麥克風/相機。

self.callClient = CallClient()

self.callClient?.createCallAgent(userCredential: userCredential!) { (agent, error) in

if error != nil {

print("ERROR: It was not possible to create a call agent.")

return

}

else {

self.callAgent = agent

print("Call agent successfully created.")

self.callAgent!.delegate = incomingCallHandler

self.callClient?.getDeviceManager { (deviceManager, error) in

if (error == nil) {

print("Got device manager instance")

self.deviceManager = deviceManager

} else {

print("Failed to get device manager instance")

}

}

}

}

要求權限

我們需要將下列程式碼新增至 onAppear 回呼,以要求音訊和視訊的權限。

AVAudioSession.sharedInstance().requestRecordPermission { (granted) in

if granted {

AVCaptureDevice.requestAccess(for: .video) { (videoGranted) in

/* NO OPERATION */

}

}

}

設定音訊工作階段

您可以使用 AVAudioSession 物件來設定應用程式的音訊工作階段。 以下是為應用程式啟用藍牙音訊裝置的範例:

func configureAudioSession() -> Error? {

// Retrieve the audio session.

let audioSession: AVAudioSession = AVAudioSession.sharedInstance()

// set options to allow bluetooth device

let options: AVAudioSession.CategoryOptions = .allowBluetooth

var configError: Error?

do {

// Set the audio session category.

try audioSession.setCategory(.playAndRecord, options: options)

print("configureAudioSession successfully")

} catch {

print("configureAudioSession failed")

configError = error

}

return configError

}

顯示本機視訊

開始通話之前,您可以管理與視訊相關的設定。 在此快速入門中,我們將介紹在通話之前或期間切換本機視訊的實作。

首先,需要使用 deviceManager 來存取本機相機。 選取所需的相機之後,即可建構 LocalVideoStream 並建立 VideoStreamRenderer,然後將其附加至 previewView。 在通話期間,可以使用 startVideo 或 stopVideo,開始或停止將 LocalVideoStream 傳送至遠端參與者。 此函式也適用於處理來電。

func toggleLocalVideo() {

// toggling video before call starts

if (call == nil)

{

if(!sendingVideo)

{

self.callClient = CallClient()

self.callClient?.getDeviceManager { (deviceManager, error) in

if (error == nil) {

print("Got device manager instance")

self.deviceManager = deviceManager

} else {

print("Failed to get device manager instance")

}

}

guard let deviceManager = deviceManager else {

return

}

let camera = deviceManager.cameras.first

let scalingMode = ScalingMode.fit

if (self.localVideoStream == nil) {

self.localVideoStream = [LocalVideoStream]()

}

localVideoStream!.append(LocalVideoStream(camera: camera!))

previewRenderer = try! VideoStreamRenderer(localVideoStream: localVideoStream!.first!)

previewView = try! previewRenderer!.createView(withOptions: CreateViewOptions(scalingMode:scalingMode))

self.sendingVideo = true

}

else{

self.sendingVideo = false

self.previewView = nil

self.previewRenderer!.dispose()

self.previewRenderer = nil

}

}

// toggle local video during the call

else{

if (sendingVideo) {

call!.stopVideo(stream: localVideoStream!.first!) { (error) in

if (error != nil) {

print("cannot stop video")

}

else {

self.sendingVideo = false

self.previewView = nil

self.previewRenderer!.dispose()

self.previewRenderer = nil

}

}

}

else {

guard let deviceManager = deviceManager else {

return

}

let camera = deviceManager.cameras.first

let scalingMode = ScalingMode.fit

if (self.localVideoStream == nil) {

self.localVideoStream = [LocalVideoStream]()

}

localVideoStream!.append(LocalVideoStream(camera: camera!))

previewRenderer = try! VideoStreamRenderer(localVideoStream: localVideoStream!.first!)

previewView = try! previewRenderer!.createView(withOptions: CreateViewOptions(scalingMode:scalingMode))

call!.startVideo(stream:(localVideoStream?.first)!) { (error) in

if (error != nil) {

print("cannot start video")

}

else {

self.sendingVideo = true

}

}

}

}

}

撥出電話

startCall 方法會設定為點選 [開始通話] 按鈕時要執行的動作。 在此快速入門中,撥出的通話預設為僅限音訊。 若要透過視訊開始通話,則需要使用 LocalVideoStream 設定 VideoOptions,並使用 startCallOptions 傳遞它,以設定通話的初始選項。

func startCall() {

let startCallOptions = StartCallOptions()

if(sendingVideo)

{

if (self.localVideoStream == nil) {

self.localVideoStream = [LocalVideoStream]()

}

let videoOptions = VideoOptions(localVideoStreams: localVideoStream!)

startCallOptions.videoOptions = videoOptions

}

let callees:[CommunicationIdentifier] = [CommunicationUserIdentifier(self.callee)]

self.callAgent?.startCall(participants: callees, options: startCallOptions) { (call, error) in

setCallAndObserver(call: call, error: error)

}

}

CallObserver 和 RemoteParticipantObserver 可用來管理通話中的事件和遠端參與者。 我們將在 setCallAndObserver 函式中設定觀察者。

func setCallAndObserver(call: Call!, error: Error?) {

if error == nil {

self.call = call

self.callObserver = CallObserver(self)

self.call!.delegate = self.callObserver

self.remoteParticipantObserver = RemoteParticipantObserver(self)

} else {

print("Failed to get call object")

}

}

接聽來電

若要接聽來電,請實作 IncomingCallHandler 顯示來電橫幅,以便接聽或拒絕通話。 將下列實作放置於 IncomingCallHandler.swift 中。

final class IncomingCallHandler: NSObject, CallAgentDelegate, IncomingCallDelegate {

public var contentView: ContentView?

private var incomingCall: IncomingCall?

private static var instance: IncomingCallHandler?

static func getOrCreateInstance() -> IncomingCallHandler {

if let c = instance {

return c

}

instance = IncomingCallHandler()

return instance!

}

private override init() {}

public func callAgent(_ callAgent: CallAgent, didRecieveIncomingCall incomingCall: IncomingCall) {

self.incomingCall = incomingCall

self.incomingCall?.delegate = self

contentView?.showIncomingCallBanner(self.incomingCall!)

}

public func callAgent(_ callAgent: CallAgent, didUpdateCalls args: CallsUpdatedEventArgs) {

if let removedCall = args.removedCalls.first {

contentView?.callRemoved(removedCall)

self.incomingCall = nil

}

}

}

我們需要將下列程式碼新增至 ContentView.swift 中的 onAppear 回呼,以建立 IncomingCallHandler 的執行個體:

let incomingCallHandler = IncomingCallHandler.getOrCreateInstance()

incomingCallHandler.contentView = self

成功建立 CallAgent 後,將委派設定為 CallAgent:

self.callAgent!.delegate = incomingCallHandler

一旦有來電,IncomingCallHandler 就會呼叫 showIncomingCallBanner 函式,以顯示 answer 和 decline 按鈕。

func showIncomingCallBanner(_ incomingCall: IncomingCall?) {

isIncomingCall = true

self.incomingCall = incomingCall

}

附加至 answer 和 decline 的動作會以下列程式碼來實作。 為了透過視訊接聽通話,需要開啟本機視訊,並使用 localVideoStream 設定 AcceptCallOptions 選項。

func answerIncomingCall() {

isIncomingCall = false

let options = AcceptCallOptions()

if (self.incomingCall != nil) {

guard let deviceManager = deviceManager else {

return

}

if (self.localVideoStream == nil) {

self.localVideoStream = [LocalVideoStream]()

}

if(sendingVideo)

{

let camera = deviceManager.cameras.first

localVideoStream!.append(LocalVideoStream(camera: camera!))

let videoOptions = VideoOptions(localVideoStreams: localVideoStream!)

options.videoOptions = videoOptions

}

self.incomingCall!.accept(options: options) { (call, error) in

setCallAndObserver(call: call, error: error)

}

}

}

func declineIncomingCall() {

self.incomingCall!.reject { (error) in }

isIncomingCall = false

}

遠端參與者視訊串流

我們可以建立 RemoteVideoStreamData 類別,以處理轉譯遠端參與者的視訊串流。

public class RemoteVideoStreamData : NSObject, RendererDelegate {

public func videoStreamRenderer(didFailToStart renderer: VideoStreamRenderer) {

owner.errorMessage = "Renderer failed to start"

}

private var owner:ContentView

let stream:RemoteVideoStream

var renderer:VideoStreamRenderer? {

didSet {

if renderer != nil {

renderer!.delegate = self

}

}

}

var views:[RendererView] = []

init(view:ContentView, stream:RemoteVideoStream) {

owner = view

self.stream = stream

}

public func videoStreamRenderer(didRenderFirstFrame renderer: VideoStreamRenderer) {

let size:StreamSize = renderer.size

owner.remoteVideoSize = String(size.width) + " X " + String(size.height)

}

}

訂閱事件

我們可以實作 CallObserver 類別以訂閱事件集合,當值在通話期間變更時收到通知。

public class CallObserver: NSObject, CallDelegate, IncomingCallDelegate {

private var owner: ContentView

init(_ view:ContentView) {

owner = view

}

public func call(_ call: Call, didChangeState args: PropertyChangedEventArgs) {

if(call.state == CallState.connected) {

initialCallParticipant()

}

}

// render remote video streams when remote participant changes

public func call(_ call: Call, didUpdateRemoteParticipant args: ParticipantsUpdatedEventArgs) {

for participant in args.addedParticipants {

participant.delegate = owner.remoteParticipantObserver

for stream in participant.videoStreams {

if !owner.remoteVideoStreamData.isEmpty {

return

}

let data:RemoteVideoStreamData = RemoteVideoStreamData(view: owner, stream: stream)

let scalingMode = ScalingMode.fit

data.renderer = try! VideoStreamRenderer(remoteVideoStream: stream)

let view:RendererView = try! data.renderer!.createView(withOptions: CreateViewOptions(scalingMode:scalingMode))

data.views.append(view)

self.owner.remoteViews.append(view)

owner.remoteVideoStreamData[stream.id] = data

}

owner.remoteParticipant = participant

}

}

// Handle remote video streams when the call is connected

public func initialCallParticipant() {

for participant in owner.call!.remoteParticipants {

participant.delegate = owner.remoteParticipantObserver

for stream in participant.videoStreams {

renderRemoteStream(stream)

}

owner.remoteParticipant = participant

}

}

//create render for RemoteVideoStream and attach it to view

public func renderRemoteStream(_ stream: RemoteVideoStream!) {

if !owner.remoteVideoStreamData.isEmpty {

return

}

let data:RemoteVideoStreamData = RemoteVideoStreamData(view: owner, stream: stream)

let scalingMode = ScalingMode.fit

data.renderer = try! VideoStreamRenderer(remoteVideoStream: stream)

let view:RendererView = try! data.renderer!.createView(withOptions: CreateViewOptions(scalingMode:scalingMode))

self.owner.remoteViews.append(view)

owner.remoteVideoStreamData[stream.id] = data

}

}

遠端參與者管理

在通話執行個體上,所有遠端參與者均會以 RemoteParticipant 類型表示,且可透過 remoteParticipants 集合取得。

我們可以實作 RemoteParticipantObserver 類別,以訂閱遠端參與者遠端視訊串流的相關更新。

public class RemoteParticipantObserver : NSObject, RemoteParticipantDelegate {

private var owner:ContentView

init(_ view:ContentView) {

owner = view

}

public func renderRemoteStream(_ stream: RemoteVideoStream!) {

let data:RemoteVideoStreamData = RemoteVideoStreamData(view: owner, stream: stream)

let scalingMode = ScalingMode.fit

data.renderer = try! VideoStreamRenderer(remoteVideoStream: stream)

let view:RendererView = try! data.renderer!.createView(withOptions: CreateViewOptions(scalingMode:scalingMode))

self.owner.remoteViews.append(view)

owner.remoteVideoStreamData[stream.id] = data

}

// render RemoteVideoStream when remote participant turns on the video, dispose the renderer when remote video is off

public func remoteParticipant(_ remoteParticipant: RemoteParticipant, didUpdateVideoStreams args: RemoteVideoStreamsEventArgs) {

for stream in args.addedRemoteVideoStreams {

renderRemoteStream(stream)

}

for stream in args.removedRemoteVideoStreams {

for data in owner.remoteVideoStreamData.values {

data.renderer?.dispose()

}

owner.remoteViews.removeAll()

}

}

}

執行程式碼

您可以選取 [產品] > [執行] 或使用 (⌘-R) 鍵盤快速鍵,在 iOS 模擬器上建置並執行應用程式。

在此快速入門中,您將了解如何使用適用於 Windows 的 Azure 通訊服務通話 SDK,開始進行 1:1 視訊通話。

UWP 範例程式碼

必要條件

若要完成本教學課程,您需要下列必要條件:

具有有效訂用帳戶的 Azure 帳戶。 免費建立帳戶。

安裝包含通用 Windows 平台開發工作負載的 Visual Studio 2022。

已部署通訊服務資源。 建立通訊服務資源。 您必須針對此快速入門記錄您的連接字串。

針對您的 Azure 通訊服務的使用者存取權杖。 您也可以使用 Azure CLI,並搭配您的連接字串執行命令,以建立使用者和存取權杖。

az communication identity token issue --scope voip --connection-string "yourConnectionString"如需詳細資訊,請參閱使用 Azure CLI 建立和管理存取權杖。

設定

建立專案

在 Visual Studio 中,使用 [空白應用程式 (通用 Windows)] 範本建立新專案,以設定單頁通用 Windows 平台 (UWP) 應用程式。

![顯示 Visual Studio 內 [新增 UWP 專案] 視窗的螢幕快照。](media/windows/create-a-new-project.png)

Install the package

以滑鼠右鍵按一下您的專案,然後移至 Manage Nuget Packages,以安裝 Azure.Communication.Calling.WindowsClient1.2.0-beta.1 或更高版本。 請確定已選取 [包含發行前版本]。

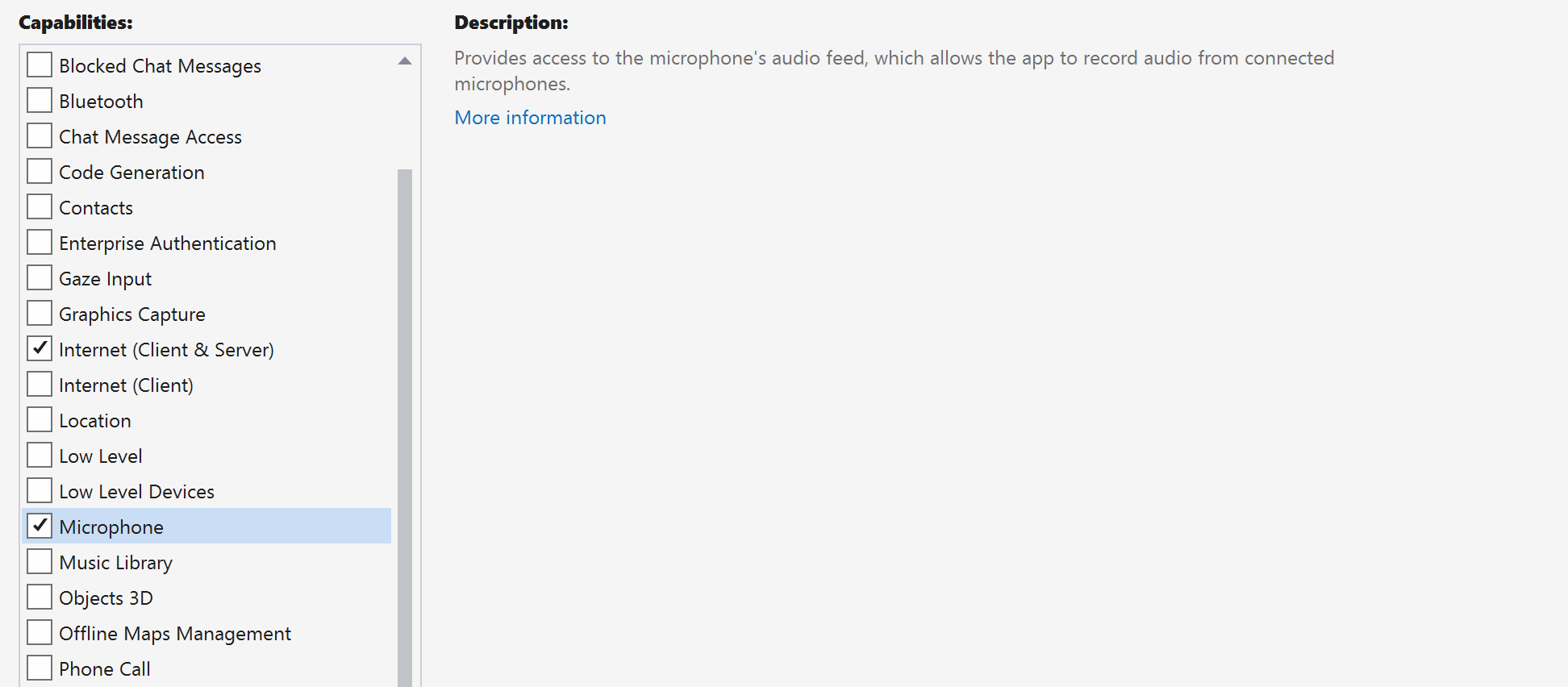

要求存取

移至 [Package.appxmanifest],然後按一下 [Capabilities]。

檢查 Internet (Client & Server),以取得存取網際網路的輸入和輸出權限。

檢查 Microphone,以存取麥克風的音訊摘要。

檢查 WebCam,以存取裝置的相機。

以滑鼠右鍵按一下並選擇 [檢視程式碼],藉以將下列程式碼新增至您的 Package.appxmanifest。

<Extensions>

<Extension Category="windows.activatableClass.inProcessServer">

<InProcessServer>

<Path>RtmMvrUap.dll</Path>

<ActivatableClass ActivatableClassId="VideoN.VideoSchemeHandler" ThreadingModel="both" />

</InProcessServer>

</Extension>

</Extensions>

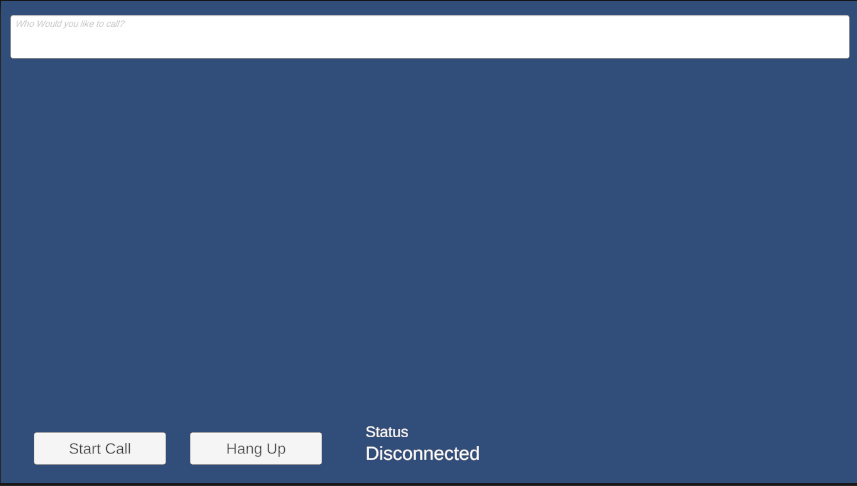

設定應用程式架構

我們必須設定基本配置來附加邏輯。 為了撥打外撥電話,我們需要 TextBox 來提供被通話者的使用者識別碼。 我們也需要 [Start Call] 按鈕和 [Hang Up] 按鈕。

同時還需要預覽本機視訊,並轉譯另一位參與者的遠端視訊。 因此,我們需要兩個元素來顯示視訊串流。

開啟專案的 MainPage.xaml,並使用下列實作取代內容。

<Page

x:Class="CallingQuickstart.MainPage"

xmlns="http://schemas.microsoft.com/winfx/2006/xaml/presentation"

xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml"

xmlns:local="using:CallingQuickstart"

xmlns:d="http://schemas.microsoft.com/expression/blend/2008"

xmlns:mc="http://schemas.openxmlformats.org/markup-compatibility/2006"

mc:Ignorable="d"

Background="{ThemeResource ApplicationPageBackgroundThemeBrush}">

<Grid x:Name="MainGrid" HorizontalAlignment="Stretch">

<Grid.RowDefinitions>

<RowDefinition Height="*"/>

<RowDefinition Height="Auto"/>

<RowDefinition Height="200*"/>

<RowDefinition Height="60*"/>

<RowDefinition Height="Auto"/>

</Grid.RowDefinitions>

<Grid Grid.Row="0" x:Name="AppTitleBar" Background="LightSeaGreen">

<!-- Width of the padding columns is set in LayoutMetricsChanged handler. -->

<!-- Using padding columns instead of Margin ensures that the background paints the area under the caption control buttons (for transparent buttons). -->

<TextBlock x:Name="QuickstartTitle" Text="Calling Quickstart sample title bar" Style="{StaticResource CaptionTextBlockStyle}" Padding="4,4,0,0"/>

</Grid>

<TextBox Grid.Row="1" x:Name="CalleeTextBox" PlaceholderText="Who would you like to call?" TextWrapping="Wrap" VerticalAlignment="Center" />

<Grid Grid.Row="2" Background="LightGray">

<Grid.RowDefinitions>

<RowDefinition/>

</Grid.RowDefinitions>

<Grid.ColumnDefinitions>

<ColumnDefinition Width="*"/>

<ColumnDefinition Width="*"/>

</Grid.ColumnDefinitions>

<MediaPlayerElement x:Name="LocalVideo" HorizontalAlignment="Center" Stretch="UniformToFill" Grid.Column="0" VerticalAlignment="Center" AutoPlay="True" />

<MediaPlayerElement x:Name="RemoteVideo" HorizontalAlignment="Center" Stretch="UniformToFill" Grid.Column="1" VerticalAlignment="Center" AutoPlay="True" />

</Grid>

<StackPanel Grid.Row="3" Orientation="Vertical" Grid.RowSpan="2">

<StackPanel Orientation="Horizontal" Margin="10">

<TextBlock VerticalAlignment="Center">Cameras:</TextBlock>

<ComboBox x:Name="CameraList" HorizontalAlignment="Left" Grid.Column="0" DisplayMemberPath="Name" SelectionChanged="CameraList_SelectionChanged" Margin="10"/>

</StackPanel>

<StackPanel Orientation="Horizontal">

<Button x:Name="CallButton" Content="Start/Join call" Click="CallButton_Click" VerticalAlignment="Center" Margin="10,0,0,0" Height="40" Width="123"/>

<Button x:Name="HangupButton" Content="Hang up" Click="HangupButton_Click" VerticalAlignment="Center" Margin="10,0,0,0" Height="40" Width="123"/>

<CheckBox x:Name="MuteLocal" Content="Mute" Margin="10,0,0,0" Click="MuteLocal_Click" Width="74"/>

<CheckBox x:Name="BackgroundBlur" Content="Background blur" Width="142" Margin="10,0,0,0" Click="BackgroundBlur_Click"/>

</StackPanel>

</StackPanel>

<TextBox Grid.Row="4" x:Name="Stats" Text="" TextWrapping="Wrap" VerticalAlignment="Center" Height="30" Margin="0,2,0,0" BorderThickness="2" IsReadOnly="True" Foreground="LightSlateGray" />

</Grid>

</Page>

開啟 App.xaml.cs (以滑鼠右鍵按一下並選擇 [檢視程式碼]),並將這一行新增至頂端:

using CallingQuickstart;

開啟 MainPage.xaml.cs (按一下滑鼠右鍵並選擇 [檢視程式碼]),然後使用下列實作取代內容:

using Azure.Communication.Calling.WindowsClient;

using System;

using System.Collections.Generic;

using System.Linq;

using System.Threading.Tasks;

using Windows.ApplicationModel;

using Windows.ApplicationModel.Core;

using Windows.Media.Core;

using Windows.Networking.PushNotifications;

using Windows.UI;

using Windows.UI.ViewManagement;

using Windows.UI.Xaml;

using Windows.UI.Xaml.Controls;

using Windows.UI.Xaml.Media;

using Windows.UI.Xaml.Navigation;

namespace CallingQuickstart

{

public sealed partial class MainPage : Page

{

private const string authToken = "<Azure Communication Services auth token>";

private CallClient callClient;

private CallTokenRefreshOptions callTokenRefreshOptions;

private CallAgent callAgent;