Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Healthcare data foundations offer ready-to-run data pipelines that are designed to efficiently structure data for analytics and AI/machine learning modeling. Deploy the healthcare data foundations capability first before deploying any other healthcare data solutions capability.

Deploy healthcare data foundations

The healthcare data foundations capability automatically deploys via the Setup your solution wizard on the healthcare data solutions home page. Deploying this capability is a mandatory step after you deploy your healthcare data solutions environment. For the detailed steps, see Deploy healthcare data foundations.

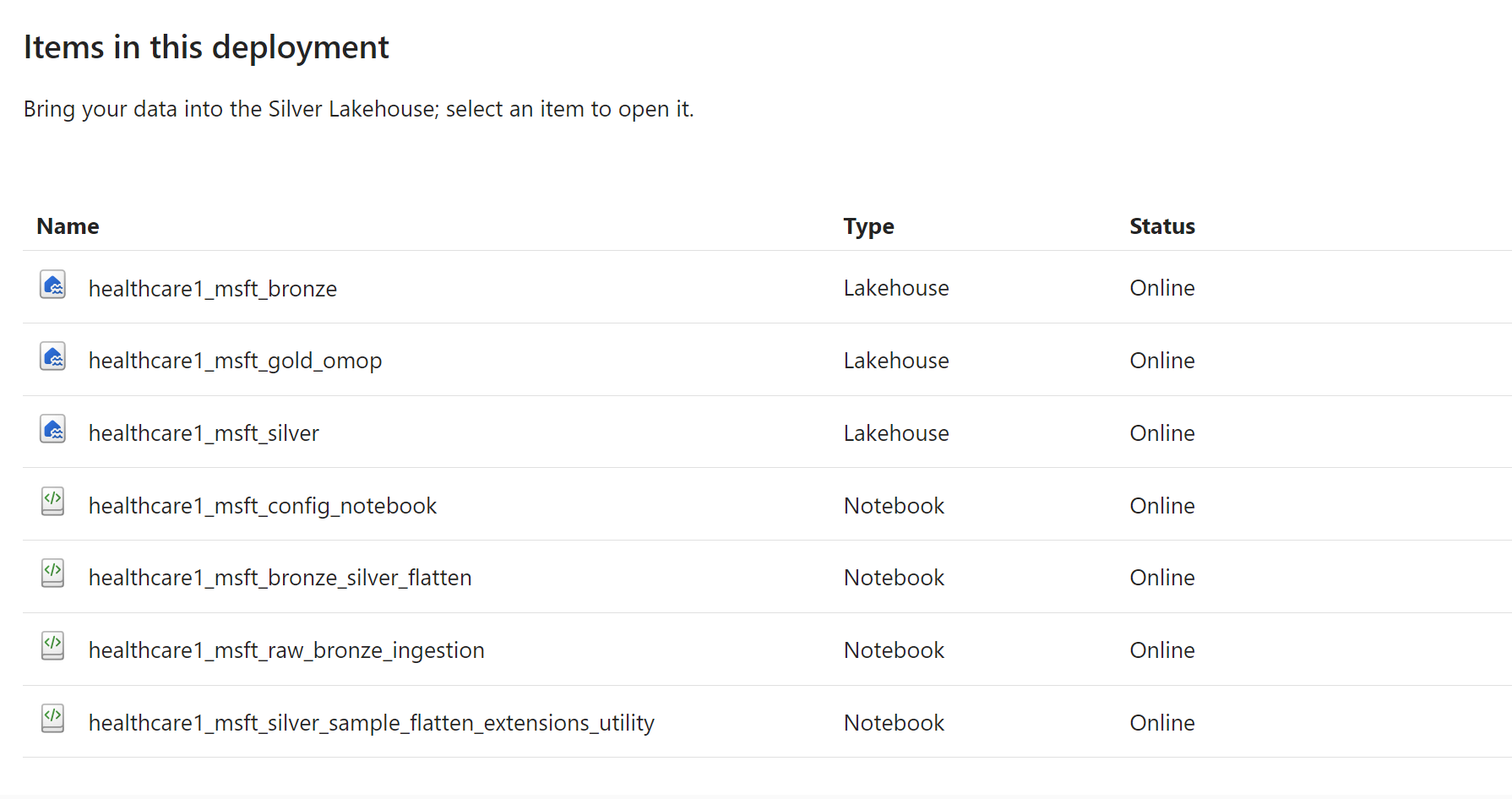

Post deployment, you can find the capability listed on the healthcare data solutions home page under Data preparation. Select the capability to explore the deployed artifacts.

Artifacts

The following table lists the details for the Fabric environment, the data pipeline, and the notebooks deployed by the healthcare data foundations capability. To learn more about the deployed lakehouses, see Medallion lakehouse design.

Caution

The following artifacts deploy with preconfigured values required for healthcare data solutions in Microsoft Fabric. Make sure that you don't modify any of the configuration values for these artifacts.

| Artifact | Type | Description |

|---|---|---|

| healthcare#_environment | Fabric environment | Preconfigures the required Fabric runtime version (Runtime 1.2 (Apache Spark 3.4 and Delta Lake 2.4)) and provides the other public and custom libraries required by healthcare data solutions in Microsoft Fabric. The data pipelines use this environment instead of the workspace-level runtime settings. |

| healthcare#_msft_config_notebook | Notebook | Helps read and populate the global configuration values from the admin lakehouse. This notebook's parameters are preconfigured during the deployment process. |

| healthcare#_msft_bronze_silver_flatten | Notebook | Helps flattens the clinical dataset from the ClinicalFhir table in the bronze lakehouse to the respective FHIR resource table and other tables in the silver lakehouse healthcare data model. Only the primary-level fields flatten in the silver lakehouse. Nested or deeper hierarchical structures within the data retain their original structure. Note: You shouldn't have more than one instance of this notebook running, as it causes inconsistent results. |

| healthcare#_msft_fhir_flattening_sample | Notebook | FHIR extensions are child elements that represent more information about an element in a FHIR resource. Currently, these extensions are supported as strings within the healthcare data model in the silver lakehouse. This notebook provides examples on how to access this extension data and utilize it within a dataframe. |

| healthcare#_msft_fhir_ndjson_bronze_ingestion | Notebook | Facilitates the ingestion of FHIR NDSJON data into delta tables within the bronze lakehouse. Note: You shouldn't have more than one instance of this notebook running, as it causes inconsistent results. |

| healthcare#_msft_raw_process_movement | Notebook | Uses the healthcare data solutions library to extract ZIP files and organize them for multiple modalities, which contain various namespaces and file extensions. The notebook moves the files based on the fileOrchestrationConfig.json configuration, which contains mapping details such as modality, modality_format, and extension. It adds a timestamp prefix to the original file names to ensure uniqueness of files across multiple source systems. |

| healthcare#_msft_clinical_data_foundation_ingestion | Data pipeline | Orchestrates the execution of the notebook/activities required for end-to-end ingestion of clinical data from the unified folder structure in the bronze lakehouse to the healthcare data model in the silver lakehouse. |

References

- How to use Microsoft Fabric notebooks

- What is a lakehouse in Microsoft Fabric?

- Ingest data into your Warehouse using data pipelines

- Create, configure, and use an environment in Microsoft Fabric