Tutorial: Live Video with Computer Vision for Spatial Analysis (preview)

![]()

Alternatively, check out topics under Create video applications in the service.

Note

Azure Video Analyzer has been retired and is no longer available.

Azure Video Analyzer for Media is not affected by this retirement. It is now rebranded to Azure Video Indexer. Click here to read more.

This tutorial shows you how to use Azure Video Analyzer together with Computer Vision for spatial analysis AI service from Azure Cognitive Services to analyze a live video feed from a (simulated) IP camera. You'll see how this inference server enables you to analyze the streaming video to understand spatial relationships between people and movement in physical space. A subset of the frames in the video feed is sent to this inference server, and the results are sent to IoT Edge Hub and when some conditions are met, video clips are recorded and stored as videos in the Video Analyzer account.

In this tutorial you will:

- Set up resources

- Examine the code

- Run the sample code

- Monitor events

If you don't have an Azure subscription, create an Azure free account before you begin.

Suggested pre-reading

Read these articles before you begin:

- Video Analyzer overview

- Video Analyzer terminology

- Pipeline concepts

- Event-based video recording

- Azure Cognitive Service Computer Vision container for spatial analysis.

Prerequisites

The following are prerequisites for connecting the spatial-analysis module to Azure Video Analyzer module.

An Azure account that includes an active subscription. Create an account for free if you don't already have one.

Note

You will need an Azure subscription where you have access to both Contributor role, and User Access Administrator role. If you do not have the right permissions, please reach out to your account administrator to grant you those permissions.

Visual Studio Code, with the following extensions:

Tip

When you're installing the Azure IoT Tools extension, you might be prompted to install Docker. Feel free to ignore the prompt.

Note

Make sure the network that your development machine is connected to permits Advanced Message Queueing Protocol over port 5671. This setup enables Azure IoT Tools to communicate with Azure IoT Hub.

Set up Azure resources

Choose a compute device

To run the Spatial Analysis container, you need a compute device with a NVIDIA Tesla T4 GPU. We recommend that you use Azure Stack Edge with GPU acceleration, however the container runs on any other desktop machine or Azure VM that has Ubuntu Desktop 18.04 LTS installed on the host computer.

Azure Stack Edge is a Hardware-as-a-Service solution and an AI-enabled edge computing device with network data transfer capabilities. For detailed preparation and setup instructions, see the Azure Stack Edge documentation.

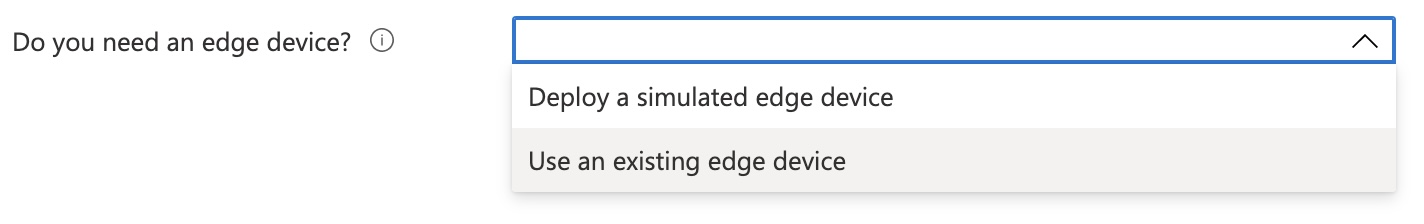

Set up the edge device

Next, deploy the other Azure resources.

Note

The button above creates and uses the default Virtual Machine which does NOT have the NVIDIA GPU. Please use the "Use existing edge device" option when asked in the Azure Resource Manager (ARM) template and use the IoT Hub and the device information from the step above.

The deployment process will take about 20 minutes. Upon completion, you will have certain Azure resources deployed in the Azure subscription, including:

- Video Analyzer account - This cloud service is used to register the Video Analyzer edge module, and for playing back recorded video and video analytics.

- Storage account - For storing recorded video and video analytics.

- Managed Identity - This is the user assigned managed identity used to manage access to the above storage account.

- Virtual machine - This is a virtual machine that will serve as your simulated edge device.

- IoT Hub - This acts as a central message hub for bi-directional communication between your IoT application, IoT Edge modules and the devices it manages.

In addition to the resources mentioned above, following items are also created in the 'deployment-output' file share in your storage account, for use in quickstarts and tutorials:

- appsettings.json - This file contains the device connection string and other properties needed to run the sample application in Visual Studio Code.

- env.txt - This file contains the environment variables that you will need to generate deployment manifests using Visual Studio Code.

- deployment.json - This is the deployment manifest used by the template to deploy edge modules to the simulated edge device.

Tip

If you run into issues creating all of the required Azure resources, please use the manual steps in this quickstart.

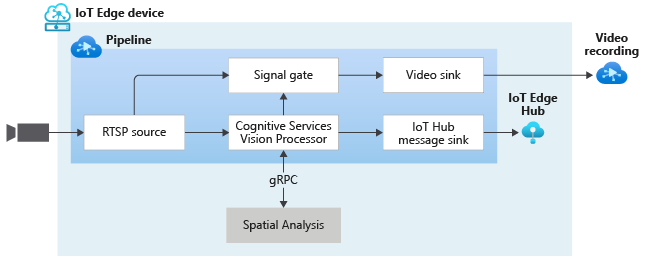

Overview

This diagram shows how the signals flow in this tutorial. An edge module simulates an IP camera hosting a Real-Time Streaming Protocol (RTSP) server. An RTSP source node pulls the video feed from this server and sends video frames to the CognitiveServicesVisionProcessor node.

The CognitiveServicesVisionProcessor node plays the role of a proxy. It converts the video frames to the specified image type. Then it relays the image over shared memory to another edge module that runs AI operations behind a gRPC endpoint. In this example, that edge module is the spatial-analysis module. The CognitiveServicesVisionProcessor node does two things:

- It gathers the results and publishes events to the IoT Hub sink node. The node then sends those events to IoT Edge Hub.

- It also captures a 30-second video clip from the RTSP source using a signal gate processor and stores it as a Video sink.

Create the Computer Vision resource

You need to create an Azure resource of type Computer Vision for the Standard S1 tier either on Azure portal or via Azure CLI.

Set up your development environment

Get the sample code

Clone the AVA C# samples repository.

Start Visual Studio Code, and open the folder where the repo has been downloaded.

In Visual Studio Code, browse to the src/cloud-to-device-console-app folder and create a file named appsettings.json. This file contains the settings needed to run the program.

Browse to the file share in the storage account created in the setup step above, and locate the appsettings.json file under the "deployment-output" file share. Click on the file, and then hit the "Download" button. The contents should open in a new browser tab, which should look like:

{ "IoThubConnectionString" : "HostName=xxx.azure-devices.net;SharedAccessKeyName=iothubowner;SharedAccessKey=XXX", "deviceId" : "avasample-iot-edge-device", "moduleId" : "avaedge" }The IoT Hub connection string lets you use Visual Studio Code to send commands to the edge modules via Azure IoT Hub. Copy the above JSON into the src/cloud-to-device-console-app/appsettings.json file.

Next, browse to the src/edge folder and create a file named .env. This file contains properties that Visual Studio Code uses to deploy modules to an edge device.

Browse to the file share in the storage account created in the setup step above, and locate the env.txt file under the "deployment-output" file share. Click on the file, and then hit the "Download" button. The contents should open in a new browser tab, which should look like:

SUBSCRIPTION_ID="<Subscription ID>" RESOURCE_GROUP="<Resource Group>" AVA_PROVISIONING_TOKEN="<Provisioning token>" VIDEO_INPUT_FOLDER_ON_DEVICE="/home/localedgeuser/samples/input" VIDEO_OUTPUT_FOLDER_ON_DEVICE="/var/media" APPDATA_FOLDER_ON_DEVICE="/var/lib/videoanalyzer" CONTAINER_REGISTRY_USERNAME_myacr="<your container registry username>" CONTAINER_REGISTRY_PASSWORD_myacr="<your container registry password>"Copy the JSON from your env.txt into the src/edge/.env file.

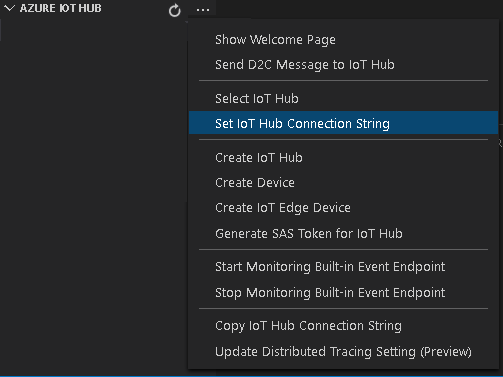

Connect to the IoT Hub

In Visual Studio Code, set the IoT Hub connection string by selecting the More actions icon next to the AZURE IOT HUB pane in the lower-left corner. Copy the string from the src/cloud-to-device-console-app/appsettings.json file.

Note

You might be asked to provide Built-in endpoint information for the IoT Hub. To get that information, in Azure portal, navigate to your IoT Hub and look for Built-in endpoints option in the left navigation pane. Click there and look for the Event Hub-compatible endpoint under Event Hub compatible endpoint section. Copy and use the text in the box. The endpoint will look something like this:

Endpoint=sb://iothub-ns-xxx.servicebus.windows.net/;SharedAccessKeyName=iothubowner;SharedAccessKey=XXX;EntityPath=<IoT Hub name>In about 30 seconds, refresh Azure IoT Hub in the lower-left section. You should see the edge device

avasample-iot-edge-device, which should have the following modules deployed:- Edge Hub (module name edgeHub)

- Edge Agent (module name edgeAgent)

- Video Analyzer (module name avaedge)

- RTSP simulator (module name rtspsim)

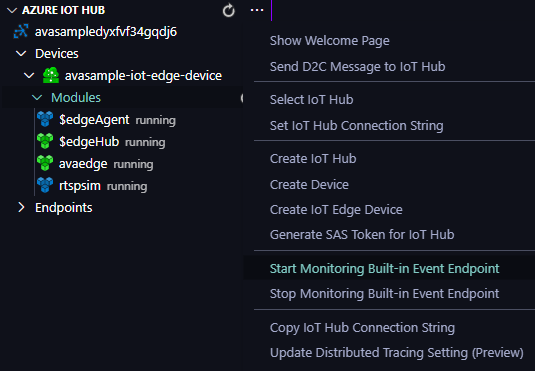

Prepare to monitor the modules

When you use run this quickstart or tutorial, events will be sent to the IoT Hub. To see these events, follow these steps:

Open the Explorer pane in Visual Studio Code, and look for Azure IoT Hub in the lower-left corner.

Expand the Devices node.

Right-click on

avasample-iot-edge-device, and select Start Monitoring Built-in Event Endpoint.Note

You might be asked to provide Built-in endpoint information for the IoT Hub. To get that information, in Azure portal, navigate to your IoT Hub and look for Built-in endpoints option in the left navigation pane. Click there and look for the Event Hub-compatible endpoint under Event Hub compatible endpoint section. Copy and use the text in the box. The endpoint will look something like this:

Endpoint=sb://iothub-ns-xxx.servicebus.windows.net/;SharedAccessKeyName=iothubowner;SharedAccessKey=XXX;EntityPath=<IoT Hub name>

Configure deployment template

Look for the deployment file in /src/edge/deployment.spatialAnalysis.ase.template.json. From the template, there are avaedge module, rtspsim module, and our spatialanalysis module.

In your deployment template file:

The deployment manifest uses port 50051 to communicate between

avaedgeandspatialanalysismodule. If the port is being used by any other application, then set the port binding in thespatialanalysismodule to an open port."PortBindings": { "50051/tcp": [ { "HostPort": "50051" } ] }IpcModeinavaedgeandspatialanalysismodule createOptions should be same and set to host.For the RTSP simulator to work, ensure that you have set up the Volume Bounds when using an Azure Stack Edge device.

Connect to the SMB share and copy the sample retail shop video file to the Local share.

See that the rtspsim module has the following configuration:

"createOptions": { "HostConfig": { "Mounts": [ { "Target": "/live/mediaServer/media", "Source": "<your Local Docker Volume Mount name>", "Type": "volume" } ], "PortBindings": { "554/tcp": [ { "HostPort": "554" } ] } } }

The following table shows the various Environment Variables used by the IoT Edge Module. You can also set them in the deployment manifest mentioned above, using the env attribute in spatialanalysis:

| Setting Name | Value | Description |

|---|---|---|

| DISPLAY | :1 | This value needs to be same as the output of echo $DISPLAY on the host computer. Azure Stack Edge devices do not have a display. This setting is not applicable |

| ARCHON_SHARED_BUFFER_LIMIT | 377487360 | Do not modify |

| ARCHON_LOG_LEVEL | Info; Verbose | Logging level, select one of the two values |

| QT_X11_NO_MITSHM | 1 | Do not modify |

| OMP_WAIT_POLICY | PASSIVE | Do not modify |

| EULA | accept | This value needs to be set to accept for the container to run |

| ARCHON_TELEMETRY_IOTHUB | true | Set this value to true to send the telemetry events to IoT Hub |

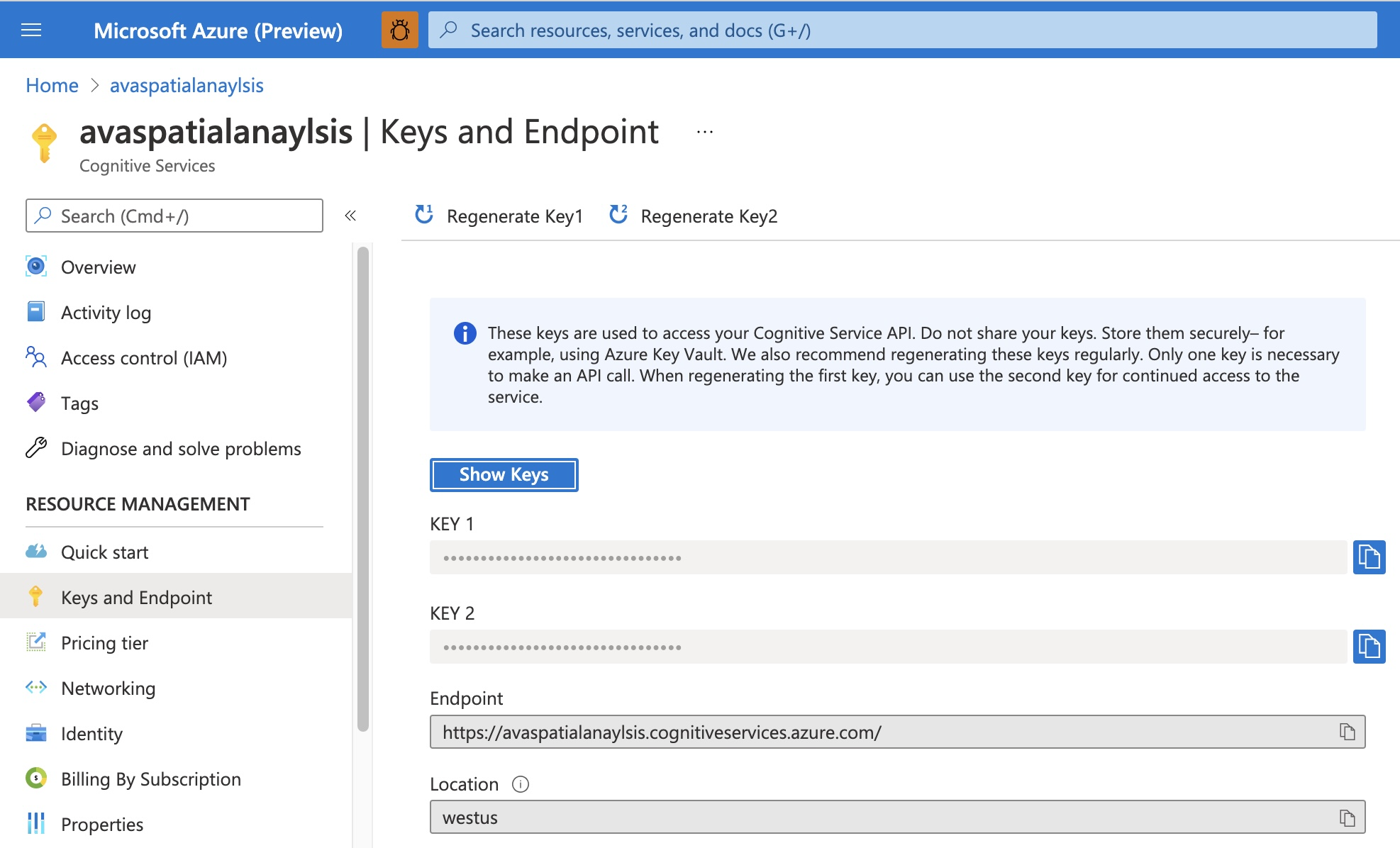

| BILLING | your Endpoint URI | Collect this value from Azure portal from your Computer Vision resource. You can find it in the Keys and Endpoint blade for your resource. |

| APIKEY | your API Key | Collect this value from Azure portal from your Computer Vision resource. You can find it in the Keys and Endpoint blade for your resource. |

| LAUNCHER_TYPE | avaBackend | Do not modify |

| ARCHON_GRAPH_READY_TIMEOUT | 600 | Add this environment variable if your GPU is not T4 or NVIDIA 2080 Ti |

Important

The Eula, Billing, and ApiKey options must be specified to run the container; otherwise, the container won't start.

Gathering Keys and Endpoint URI

An API key is used to start the spatial-analysis container, and is available on the Azure portal's Keys and Endpoint page of your Computer Vision resource. Navigate to that page, and find the key and the endpoint URI that is needed by the spatialAnalysis container.

Generate and deploy the deployment manifest

The deployment manifest defines what modules are deployed to an edge device. It also defines configuration settings for those modules.

Follow these steps to generate the manifest from the template file and then deploy it to the edge device.

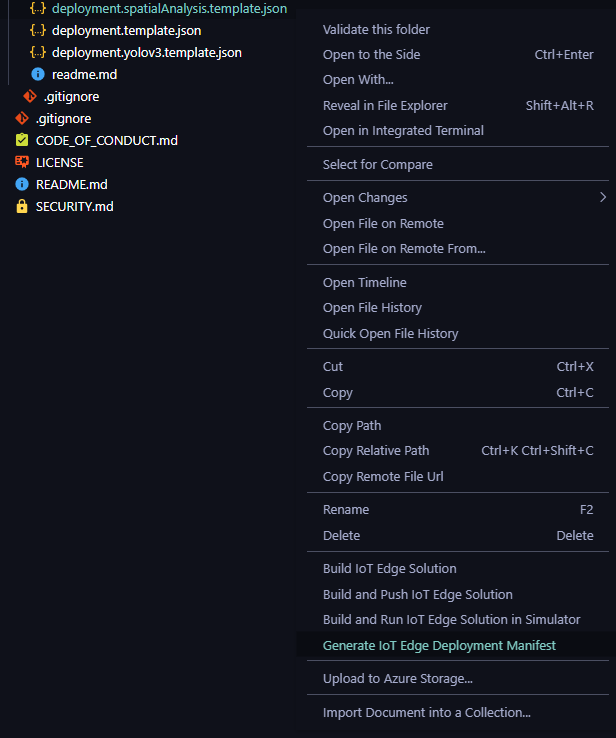

Open Visual Studio Code.

Next to the

AZURE IOT HUBpane, select the More actions icon to set the IoT Hub connection string. You can copy the string from thesrc/cloud-to-device-console-app/appsettings.jsonfile.

In your Folder explorer, right click on your deployment template file and select Generate IoT Edge Deployment Manifest.

This action should create a manifest file in the src/edge/config folder.

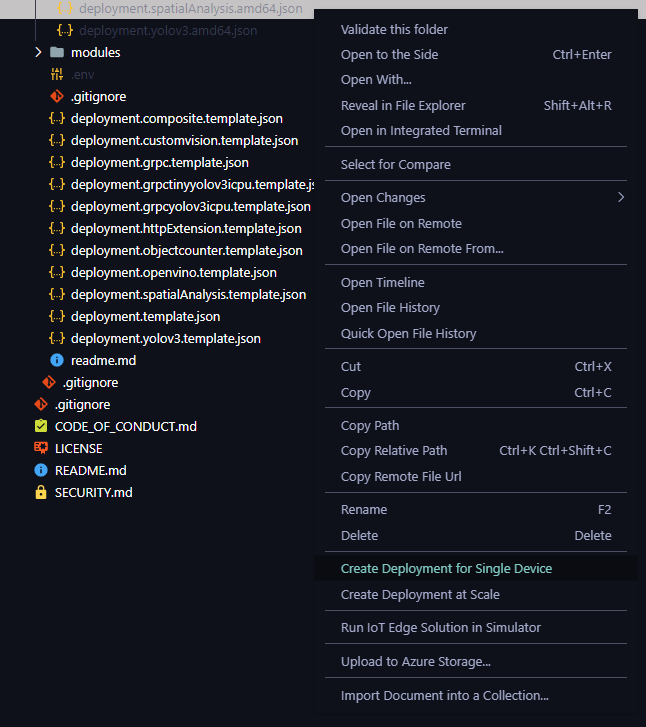

Right-click on the generated manifest file and select Create Deployment for Single Device, and then select the name of your edge device.

At the top of the page, you will be prompted to select an IoT Hub device, choose your edge device name from the drop-down menu.

After about 30-seconds, in the lower-left corner of the window, refresh AZURE IOT HUB pane. The edge device now shows the following deployed modules:

- Azure Video Analyzer (module name avaedge).

- Real-Time Streaming Protocol (RTSP) simulator (module name rtspsim).

- Spatial Analysis (module name spatialanalysis).

Upon successful deployment, there will be a message in OUTPUT window like this:

[Edge] Start deployment to device [<edge device name>]

[Edge] Deployment succeeded.

Then you can find avaedge, spatialanalysis and rtspsim modules under Devices/Modules, and their status should be "running".

Prepare to monitor events

To see these events, follow these steps:

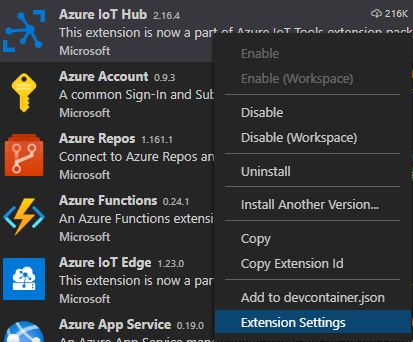

In Visual Studio Code, open the Extensions tab (or press Ctrl+Shift+X) and search for Azure IoT Hub.

Right-click and select Extension Settings.

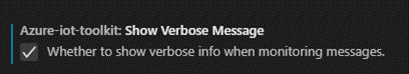

Search and enable “Show Verbose Message”.

Open the Explorer pane and look for AZURE IOT HUB in the lower-left corner, right click and select Start Monitoring Built-in Event Endpoint.

Run the program

There is a program.cs which will invoke the direct methods in src/cloud-to-device-console-app/operations.json. You will need to edit the operations.json file and update the pipeline topology URL, the name of the topology, as well as the RTSP URL.

In operations.json:

Set the pipeline topology like this:

{ "opName": "pipelineTopologySet", "opParams": { "pipelineTopologyUrl": "https://raw.githubusercontent.com/Azure/video-analyzer/main/pipelines/live/topologies/spatial-analysis/person-zone-crossing-operation-topology.json" } },

- Under

livePipelineSet, edit the name of the topology to match the value in the preceding link:"topologyName" : "PersonZoneCrossingTopology"

- Under

pipelineTopologyDelete, edit the name:"name" : "PersonZoneCrossingTopology"

Important

The topology used above has a hard-coded name for the VideoSink resource videoSink. If you decide to choose a different video source, remember to change this value.

Create a live pipeline like this, set the parameters in pipeline topology here:

{ "opName": "livePipelineSet", "opParams": { "name": "Sample-Pipeline-1", "properties": { "topologyName": "PersonZoneCrossingTopology", "description": "Sample pipeline description", "parameters": [ { "name": "rtspUrl", "value": "rtsp://rtspsim:554/media/retailshop-15fps.mkv" }, { "name": "rtspUserName", "value": "testuser" }, { "name": "rtspPassword", "value": "testpassword" } ] } } },

Run a debug session by selecting F5 and follow TERMINAL instructions, it will set pipelineTopology, set live pipeline, activate live pipeline, and finally delete the resources.

Note

The program will pause at the activate live pipeline step. Open the Terminal tab and press Enter to continue and start the deactivating and deleting on resources steps.

Interpret results

The spatialanalysis is a large container and its startup time can take up to 30 seconds. Once the spatialanalysis container is up and running, it will start to send the inferences events. You will see events such as:

[IoTHubMonitor] [3:37:28 PM] Message received from [ase03-edge/avaedge]:

{

"sdp": "SDP:\nv=0\r\no=- 1620671848135494 1 IN IP4 172.27.86.122\r\ns=Matroska video+audio+(optional)subtitles, streamed by the LIVE555 Media Server\r\ni=media/cafeteria.mkv\r\nt=0 0\r\na=tool:LIVE555 Streaming Media v2020.08.19\r\na=type:broadcast\r\na=control:*\r\na=range:npt=0-300.066\r\na=x-qt-text-nam:Matroska video+audio+(optional)subtitles, streamed by the LIVE555 Media Server\r\na=x-qt-text-inf:media/retailshop-15fps.mkv\r\nm=video 0 RTP/AVP 96\r\nc=IN IP4 0.0.0.0\r\nb=AS:500\r\na=rtpmap:96 H264/90000\r\na=fmtp:96 packetization-mode=1;profile-level-id=640028;sprop-parameter-sets=Z2QAKKzZQHgCHoQAAAMABAAAAwDwPGDGWA==,aOvssiw=\r\na=control:track1\r\n"

}

[IoTHubMonitor] [3:37:30 PM] Message received from [ase03-edge/avaedge]:

{

"type": "video",

"location": "/videos/<your video name>",

"startTime": "2021-05-10T18:37:27.931Z"

}

[IoTHubMonitor] [3:37:40 PM] Message received from [ase03-edge/avaedge]:

{

"state": "initializing"

}

[IoTHubMonitor] [3:37:50 PM] Message received from [ase03-edge/avaedge]:

{

"state": "initializing"

}

[IoTHubMonitor] [3:38:18 PM] Message received from [ase03-edge/avaedge]:

{

"type": "video",

"location": "/videos/<your video name>",

"startTime": "2021-05-10T18:37:27.931Z"

}

Note

You will see the "initializing" messages. These messages show up while the spatialAnalysis module is starting up and can take up to 60 seconds to get to a running state. Please be patient and you should see the inference event flow through.

When a pipeline topology is instantiated, you should see "MediaSessionEstablished" event, here is a sample MediaSessionEstablished event.

The spatialanalysis module will also send out AI Insight events to Azure Video Analyzer and then to IoTHub, it will also show in OUTPUT window. These AI insights are recorded along with video via the video sink node. You can use Video Analyzer to view these, as discussed below.

Supported Spatial Analysis Operations

Here are the operations that the spatialAnalysis module offers and is supported by Azure Video Analyzer:

- personZoneCrossing

- personCrossingLine

- personDistance

- personCount

- customOperation

Read our supported Spatial Analysis operations reference document to learn more about the different operations and the properties supported in them.

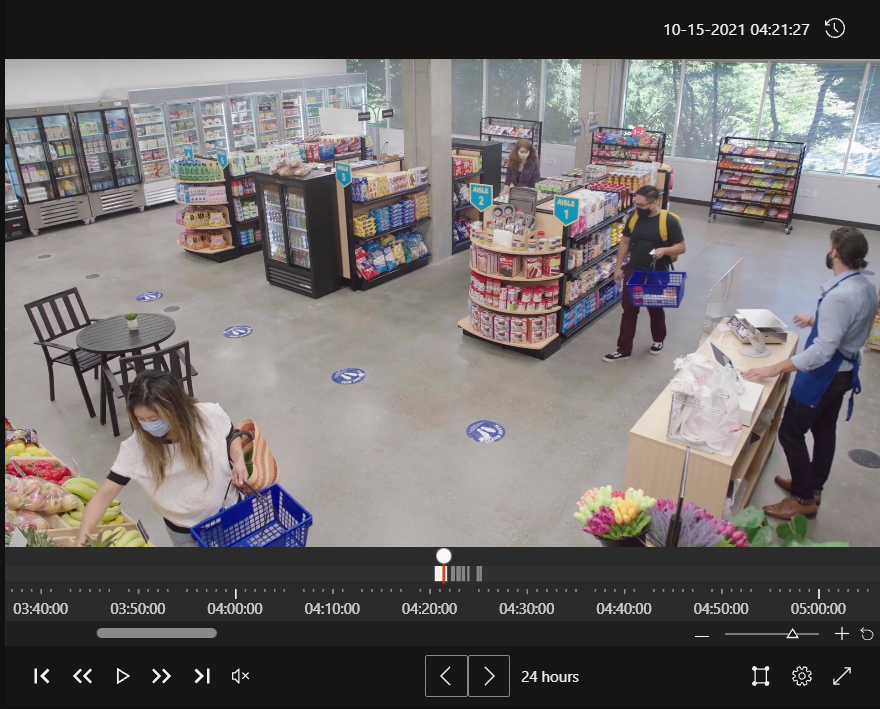

Playing back the recording

You can examine the Video Analyzer video resource that was created by the live pipeline by logging in to the Azure portal and viewing the video.

Open your web browser, and go to the Azure portal. Enter your credentials to sign in to the portal. The default view is your service dashboard.

Locate your Video Analyzers account among the resources you have in your subscription, and open the account pane.

Select Videos in the Video Analyzers list.

You'll find a video listed with the name

personzonecrossing. This is the name chosen in your pipeline topology file.Select the video.

On the video details page, click the Play icon

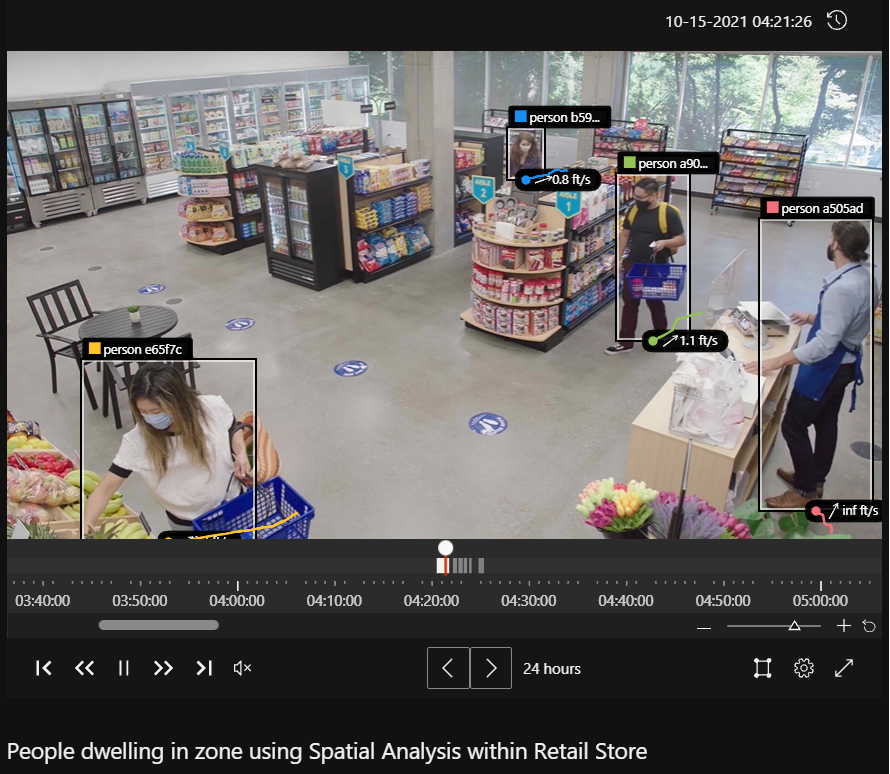

To view the inference metadata on the video, click the Metadata rendering icon

You will find 3 options to view as overlay on the video:

- Bounding boxes: Display a bounding box boxes around each person with a unique id

- Attributes - Display person attributes such as its speed (in ft/s) and orientation (using an arrow), when available

- Object path - Display a short trail for each person's movement, when available

Note

Because the source of the video was a container simulating a camera feed, the time stamps in the video are related to when you activated the live pipeline and when you deactivated it.

Next steps

Try different operations that the spatialAnalysis module offers, refer to the following pipelineTopologies: