Cet article fournit des réponses à certaines des questions les plus courantes sur les outils de gestion du cycle de vie Fabric.

Questions générales

Qu’est-ce que la gestion du cycle de vie dans Microsoft Fabric ?

La gestion du cycle de vie comporte deux parties, l'intégration et le déploiement. Pour comprendre ce qu’est l’intégration dans Fabric, reportez-vous à la présentation de l’intégration Git. Pour comprendre ce que sont les pipelines de déploiement dans Fabric, reportez-vous à la présentation des pipelines de déploiement.

Qu’est-ce que l’intégration Git ?

Pour obtenir une brève explication de l’intégration Git, consultez la vue d’ensemble de l’intégration Git. Une réponse multiligne ou mise en forme à la question. Utilisez le formatage Markdown de votre choix, à condition de maintenir l’indentation des lignes après les caractères « | ».

Qu'est-ce que les pipelines de déploiement ?

Pour obtenir une brève explication des pipelines de déploiement, consultez la vue d’ensemble des pipelines de déploiement.

Questions sur la gestion des licences

Quelles sont les licences nécessaires pour travailler avec la gestion du cycle de vie ?

Pour plus d'informations sur les licences, voir Licences Fabric.

De quel type de capacité ai-je besoin ?

Tous les espaces de travail doivent être attribués à une licence Fabric. Cependant, vous pouvez utiliser différents types de capacité pour différents espaces de travail.

Pour plus d'informations sur les types de capacité, voir Capacité et SKU.

Notes

- Les références PPU, EM et A fonctionnent uniquement avec les éléments Power BI. Si vous ajoutez d'autres articles Fabric à l'espace de travail, vous avez besoin d'un SKU d'essai, P ou F.

- Lorsque vous créez un espace de travail avec un PPU, seuls les autres utilisateurs PPU peuvent accéder à l'espace de travail et consommer son contenu.

autorisations

Qu’est-ce que le modèle d’autorisations de pipelines de déploiement ?

Le modèle d’autorisations de pipelines de déploiement est décrit dans la section Autorisations.

De quelles autorisations ai-je besoin pour configurer des règles de déploiement ?

Pour configurer des règles de déploiement dans des pipelines de déploiement, vous devez être le propriétaire du modèle sémantique.

Questions d'intégration Git

Puis-je me connecter à un référentiel qui se trouve dans une région différente de celle de mon espace de travail ?

Si la capacité de l'espace de travail se trouve dans un emplacement géographique alors que le référentiel Azure DevOps se trouve dans un autre emplacement, l'administrateur Fabric peut décider d'activer ou non les exportations intergéographiques. Pour plus d’informations, consultez Les utilisateurs peuvent exporter des éléments vers des référentiels Git dans d’autres emplacements géographiques.

Comment démarrer avec l’intégration Git ?

Démarrez avec l’intégration Git en utilisant les instructions de démarrage.

Pourquoi mon article a-t-il été supprimé de l'espace de travail ?

Il peut y avoir plusieurs raisons pour lesquelles un élément a été supprimé de l'espace de travail.

- Si l'élément n'a pas été validé et que vous l'avez sélectionné dans une action d'annulation, l'élément est supprimé de l'espace de travail.

- Si l'élément a été validé, il peut être supprimé si vous changez de branche et que l'élément n'existe pas dans la nouvelle branche.

Questions relatives au pipeline de déploiement

Quelles sont les limitations générales de déploiement à garder à l'esprit ?

Les considérations suivantes sont importantes à garder à l’esprit :

Comment puis-je attribuer des espaces de travail à toutes les étapes d’un pipeline ?

Vous pouvez soit attribuer un espace de travail à votre pipeline et le déployer sur l'ensemble du pipeline, soit attribuer un espace de travail différent à chaque étape du pipeline. Pour plus d’informations, consultez Attribuer un espace de travail à un pipeline de déploiement.

Que puis-je faire si j’ai un jeu de données avec directQuery ou le mode de connectivité composite qui utilise des tables de date/heure automatiques ou de variantes ?

Les jeux de données qui utilisent le mode de connectivité DirectQuery ou Composite et qui ont des tables de variation ou de date/heure automatiques ne sont pas pris en charge dans les pipelines de déploiement. Si votre déploiement échoue et que vous pensez que c’est parce que vous avez un jeu de données avec une table de variation, vous pouvez rechercher la propriété variations dans les colonnes de votre table. Vous pouvez utiliser l’une des méthodes suivantes pour modifier votre modèle sémantique afin qu’il fonctionne dans les pipelines de déploiement.

Dans votre jeu de données, au lieu d’utiliser le mode DirectQuery ou Composite, utilisez le mode importation.

Supprimez les tables date/heure automatique de votre modèle sémantique. Si nécessaire, supprimez toutes les variantes restantes de toutes les colonnes de vos tables. La suppression d’une variante peut invalider les mesures créées par l’utilisateur, les colonnes calculées et les tables calculées. Utilisez cette méthode uniquement si vous comprenez comment fonctionne votre modèle de modèle sémantique, car cela peut entraîner une altération des données dans vos visuels.

Pourquoi certaines mosaïques n'affichent-elles pas d'informations après le déploiement ?

Lorsque vous épinglez une vignette à un tableau de bord, si la vignette s’appuie sur un élément non pris en charge (tout élément non pris en charge #B0 cette liste #C1 n’est pas pris en charge) ou sur un élément que vous n’avez pas les autorisations de déployer, après le déploiement du tableau de bord, la vignette ne s’affiche pas. Par exemple, si vous créez une mosaïque à partir d'un rapport qui s'appuie sur un modèle sémantique dont vous n'êtes pas administrateur, vous recevez un avertissement d’erreur lors du déploiement du rapport. Cependant, lors du déploiement du tableau de bord avec le mosaïque, vous n'avez pas de message d'erreur, le déploiement réussira, mais le mosaïque n'affichera aucune information.

Rapports paginés

Qui est le propriétaire d’un rapport paginé déployé ?

Le propriétaire d’un rapport paginé déployé est l’utilisateur qui a déployé le rapport. Lorsque vous déployez un rapport paginé pour la première fois, vous devenez le propriétaire du rapport.

Si vous déployez un rapport paginé dans une étape qui contient déjà une copie de ce rapport paginé, vous écrasez le rapport précédent et devenez son propriétaire, au lieu du propriétaire précédent. Dans de tels cas, vous avez besoin d'informations d'identification pour la source de données sous-jacente, afin que les données puissent être utilisées dans le rapport paginé.

Où sont mes sous-rapports de rapports paginés ?

Les sous-rapports des rapports paginés sont conservés dans le même dossier que celui qui contient votre rapport paginé. Pour éviter les problèmes de rendu, lorsque vous utilisez la copie sélective pour copier un rapport paginé avec des sous-rapports, sélectionnez le rapport parent et les sous-rapports.

Comment créer une règle de déploiement pour un rapport paginé avec un modèle sémantique Fabric ?

Vous pouvez créer des règles de rapport paginé si vous souhaitez orienter le rapport paginé vers le modèle sémantique à la même étape. Lorsque vous créez une règle de déploiement pour un rapport paginé, vous devez sélectionner une base de données et un serveur.

Si vous définissez une règle de déploiement pour un rapport paginé qui n'a pas de modèle sémantique Fabric, en raison du caractère externe de la source de données cible, vous devez spécifier à la fois le serveur et la base de données.

Cependant, les rapports paginés qui utilisent un modèle sémantique Fabric utilisent un modèle sémantique interne. Dans de tels cas, vous ne pouvez pas vous fier au nom de la source de données pour identifier le modèle sémantique Fabric auquel vous vous connectez. Le nom de la source de données ne change pas lorsque vous le mettez à jour à la phase cible, en créant une règle de source de données ou en appelant l’API de mise à jour de la source de données. Lorsque vous définissez une règle de déploiement, vous devez conserver le format de la base de données et remplacer l’ID d’objet du modèle sémantique dans le champ de base de données. Comme le modèle sémantique est interne, le serveur reste le même.

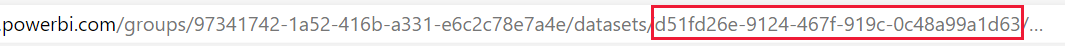

Base de données : le format de base de données pour un rapport paginé avec un modèle sémantique Fabric est

sobe_wowvirtualserver-<dataset ID>. Par exemple :sobe_wowvirtualserver-d51fd26e-9124-467f-919c-0c48a99a1d63. Remplace le<dataset ID>par l’ID de votre jeu de données. Vous pouvez récupérer l’ID de jeu de données à partir de l’URL, en sélectionnant le GUID qui vient aprèsdatasets/et avant la barre oblique suivante.

Serveur : serveur qui héberge votre base de données. Conservez le serveur existant tel quel.

Après le déploiement, puis-je télécharger le fichier RDL du rapport paginé ?

Après un déploiement, si vous téléchargez le RDL du rapport paginé, il se peut qu'il ne soit pas mis à jour avec la dernière version que vous pouvez voir dans le service Power BI.

Dataflows

Qu’advient-il de la configuration de l’actualisation incrémentielle après le déploiement de dataflows ?

Lorsque vous disposez d’un flux de données qui contient des modèles sémantiques configurés avec une actualisation incrémentielle, la stratégie d’actualisation n’est pas copiée ou écrasée pendant le déploiement. Après avoir déployé un flux de données qui inclut un modèle sémantique avec une actualisation incrémentielle sur une étape qui n’inclut pas ce flux de données, et si vous avez une stratégie d’actualisation, vous devez la reconfigurer à l’étape cible. Si vous déployez un flux de données avec actualisation incrémentielle à une étape où il réside déjà, la stratégie d'actualisation incrémentielle n'est pas copiée. Dans de tels cas, si vous souhaitez mettre à jour la stratégie d'actualisation à l'étape cible, vous devez le faire manuellement.

Datamarts

Où est le jeu de données de mon datamart ?

Les pipelines de déploiement n’affichent pas les jeux de données qui appartiennent à des datamarts dans les phases de pipeline. Lors du déploiement d’un datamart, son jeu de données est également déployé. Vous pouvez afficher le jeu de données de votre datamart dans l’espace de travail de la phase où il se trouve.