Send data to Microsoft Fabric from a data processor pipeline

Important

Azure IoT Operations Preview – enabled by Azure Arc is currently in PREVIEW. You shouldn't use this preview software in production environments.

You will need to deploy a new Azure IoT Operations installation when a generally available release is made available, you won't be able to upgrade a preview installation.

See the Supplemental Terms of Use for Microsoft Azure Previews for legal terms that apply to Azure features that are in beta, preview, or otherwise not yet released into general availability.

Use the Fabric Lakehouse destination to write data to a lakehouse in Microsoft Fabric from a data processor pipeline. The destination stage writes parquet files to a lakehouse that lets you view the data in delta tables. The destination stage batches messages before it sends them to Microsoft Fabric.

Prerequisites

To configure and use a Microsoft Fabric destination pipeline stage, you need:

- A deployed instance of the data processor.

- A Microsoft Fabric subscription. Or, sign up for a free Microsoft Fabric trial capacity.

- A lakehouse in Microsoft Fabric.

Set up Microsoft Fabric

Before you can write to Microsoft Fabric from a data pipeline, you need to grant access to the lakehouse from the pipeline. You can use either a service principal or a managed identity to authenticate the pipeline. The advantage of using a managed identity is that you don't need to manage the lifecycle of the service principal. The managed identity is automatically managed by Azure and is tied to the lifecycle of the resource it's assigned to.

Before you configure either service principal or managed identity access to a lakehouse, enable service principal authentication.

To create a service principal with a client secret:

Use the following Azure CLI command to create a service principal.

az ad sp create-for-rbac --name <YOUR_SP_NAME>The output of this command includes an

appId,displayName,password, andtenant. Make a note of these values to use when you configure access to your cloud resource such as Microsoft Fabric, create a secret, and configure a pipeline destination:{ "appId": "<app-id>", "displayName": "<name>", "password": "<client-secret>", "tenant": "<tenant-id>" }

To add the service principal to your Microsoft Fabric workspace:

Make a note of your workspace ID and lakehouse ID. You can find these values in the URL that you use to access your lakehouse:

https://msit.powerbi.com/groups/<your workspace ID>/lakehouses/<your lakehouse ID>?experience=data-engineeringIn your workspace, select Manage access:

Select Add people or groups:

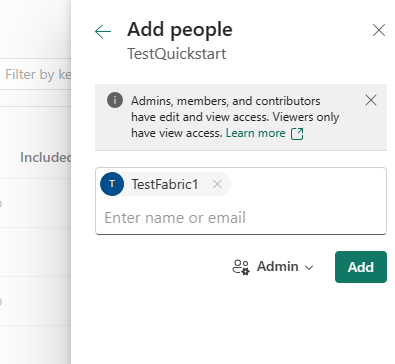

Search for your service principal by name. Start typing to see a list of matching service principals. Select the service principal you created earlier:

Grant your service principal admin access to the workspace.

Configure your secret

For the destination stage to connect to Microsoft Fabric, it needs access to a secret that contains the authentication details. To create a secret:

Use the following command to add a secret to your Azure Key Vault that contains the client secret you made a note of when you created the service principal:

az keyvault secret set --vault-name <your-key-vault-name> --name AccessFabricSecret --value <client-secret>Add the secret reference to your Kubernetes cluster by following the steps in Manage secrets for your Azure IoT Operations Preview deployment.

Configure the destination stage

The Fabric Lakehouse destination stage JSON configuration defines the details of the stage. To author the stage, you can either interact with the form-based UI, or provide the JSON configuration on the Advanced tab:

| Field | Type | Description | Required | Default | Example |

|---|---|---|---|---|---|

| Display name | String | A name to show in the data processor UI. | Yes | - | MQTT broker output |

| Description | String | A user-friendly description of what the stage does. | No | Write to topic default/topic1 |

|

| WorkspaceId | String | The lakehouse workspace ID. | Yes | - | |

| LakehouseId | String | The lakehouse Lakehouse ID. | Yes | - | |

| Table | String | The name of the table to write to. | Yes | - | |

| File path1 | Template | The file path for where to write the parquet file to. | No | {{{instanceId}}}/{{{pipelineId}}}/{{{partitionId}}}/{{{YYYY}}}/{{{MM}}}/{{{DD}}}/{{{HH}}}/{{{mm}}}/{{{fileNumber}}} |

|

| Batch2 | Batch | How to batch data. | No | 60s |

10s |

| Authentication4 | String | The authentication details to connect to Azure Data Explorer. Service principal or Managed identity |

Service principal | Yes | - |

| Retry | Retry | The retry policy to use. | No | default |

fixed |

| Columns > Name | string | The name of the column. | Yes | temperature |

|

| Columns > Type3 | string enum | The type of data held in the column, using one of the Delta primitive types. | Yes | integer |

|

| Columns > Path | Path | The location within each record of the data from where to read the value of the column. | No | .{{name}} |

.temperature |

1File path: To write files to Microsoft Fabric, you need a file path. You can use templates to configure file paths. File paths must contain the following components in any order:

instanceIdpipelineIdpartitionIdYYYYMMDDHHmmfileNumber

The files names are incremental integer values as indicated by fileNumber. Be sure to include a file extension if you want your system to recognize the file type.

2Batching: Batching is mandatory when you write data to Microsoft Fabric. The destination stage batches messages over a configurable time interval.

If you don't configure a batching interval, the stage uses 60 seconds as the default.

3Type: The data processor writes to Microsoft Fabric by using the delta format. The data processor supports all delta primitive data types except for decimal and timestamp without time zone.

To ensure all dates and times are represented correctly in Microsoft Fabric, make sure the value of the property is a valid RFC 3339 string and that the data type is either date or timestamp.

1Authentication: Currently, the destination stage supports service principal based authentication or managed identity when it connects to Microsoft Fabric.

Service principal based authentication

To configure service principal based authentication, provide the following values. You made a note of these values when you created the service principal and added the secret reference to your cluster.

| Field | Description | Required |

|---|---|---|

| TenantId | The tenant ID. | Yes |

| ClientId | The app ID you made a note of when you created the service principal that has access to the database. | Yes |

| Secret | The secret reference you created in your cluster. | Yes |

Sample configuration

The following JSON example shows a complete Microsoft Fabric lakehouse destination stage configuration that writes the entire message to the quickstart table in the database`:

{

"displayName": "Fabric Lakehouse - 520f54",

"type": "output/fabric@v1",

"viewOptions": {

"position": {

"x": 0,

"y": 784

}

},

"workspace": "workspaceId",

"lakehouse": "lakehouseId",

"table": "quickstart",

"columns": [

{

"name": "Timestamp",

"type": "timestamp",

"path": ".Timestamp"

},

{

"name": "AssetName",

"type": "string",

"path": ".assetname"

},

{

"name": "Customer",

"type": "string",

"path": ".Customer"

},

{

"name": "Batch",

"type": "integer",

"path": ".Batch"

},

{

"name": "CurrentTemperature",

"type": "float",

"path": ".CurrentTemperature"

},

{

"name": "LastKnownTemperature",

"type": "float",

"path": ".LastKnownTemperature"

},

{

"name": "Pressure",

"type": "float",

"path": ".Pressure"

},

{

"name": "IsSpare",

"type": "boolean",

"path": ".IsSpare"

}

],

"authentication": {

"type": "servicePrincipal",

"tenantId": "tenantId",

"clientId": "clientId",

"clientSecret": "secretReference"

},

"batch": {

"time": "5s",

"path": ".payload"

},

"retry": {

"type": "fixed",

"interval": "20s",

"maxRetries": 4

}

}

The configuration defines that:

- Messages are batched for 5 seconds.

- Uses the batch path

.payloadto locate the data for the columns.

Example

The following example shows a sample input message to the Microsoft Fabric lakehouse destination stage:

{

"payload": {

"Batch": 102,

"CurrentTemperature": 7109,

"Customer": "Contoso",

"Equipment": "Boiler",

"IsSpare": true,

"LastKnownTemperature": 7109,

"Location": "Seattle",

"Pressure": 7109,

"Timestamp": "2023-08-10T00:54:58.6572007Z",

"assetName": "oven"

},

"qos": 0,

"systemProperties": {

"partitionId": 0,

"partitionKey": "quickstart",

"timestamp": "2023-11-06T23:42:51.004Z"

},

"topic": "quickstart"

}