Dataflows

Summary

| Item | Description |

|---|---|

| Release State | General Availability |

| Products | Excel Power BI (Semantic models) Power BI (Dataflows) Fabric (Dataflow Gen2) Power Apps (Dataflows) Dynamics 365 Customer Insights (Dataflows) |

| Authentication types | Organizational account |

Note

Some capabilities may be present in one product but not others due to deployment schedules and host-specific capabilities.

Prerequisites

You must have an existing Dataflow with maker permissions to access the portal, and read permissions to access data from the dataflow.

Capabilities supported

- Import

- DirectQuery (Power BI semantic models)

Note

DirectQuery requires Power BI premium. More information: Premium features of dataflows

Get data from Dataflows in Power Query Desktop

To get data from Dataflows in Power Query Desktop:

Select Dataflows in the get data experience. The get data experience in Power Query Desktop varies between apps. For more information about the Power Query Desktop get data experience for your app, go to Where to get data.

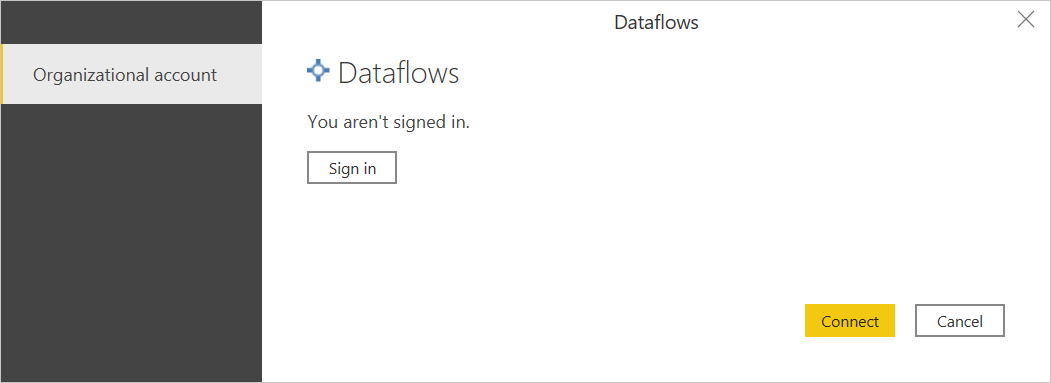

If you're connecting to this site for the first time, select Sign in and input your credentials. Then select Connect.

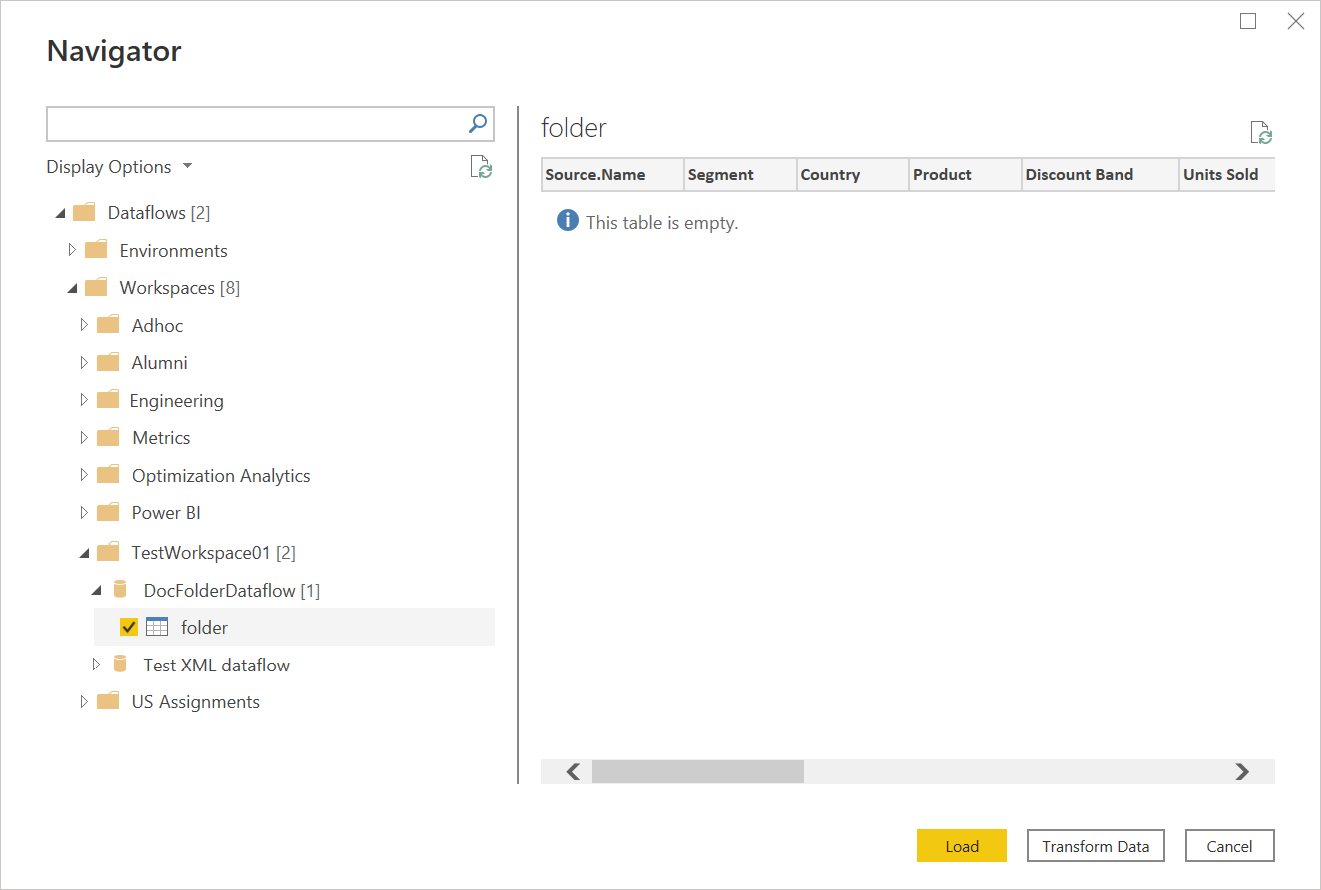

In Navigator, select the Dataflow you require, then either load or transform the data.

Get data from Dataflows in Power Query Online

To get data from Dataflows in Power Query Online:

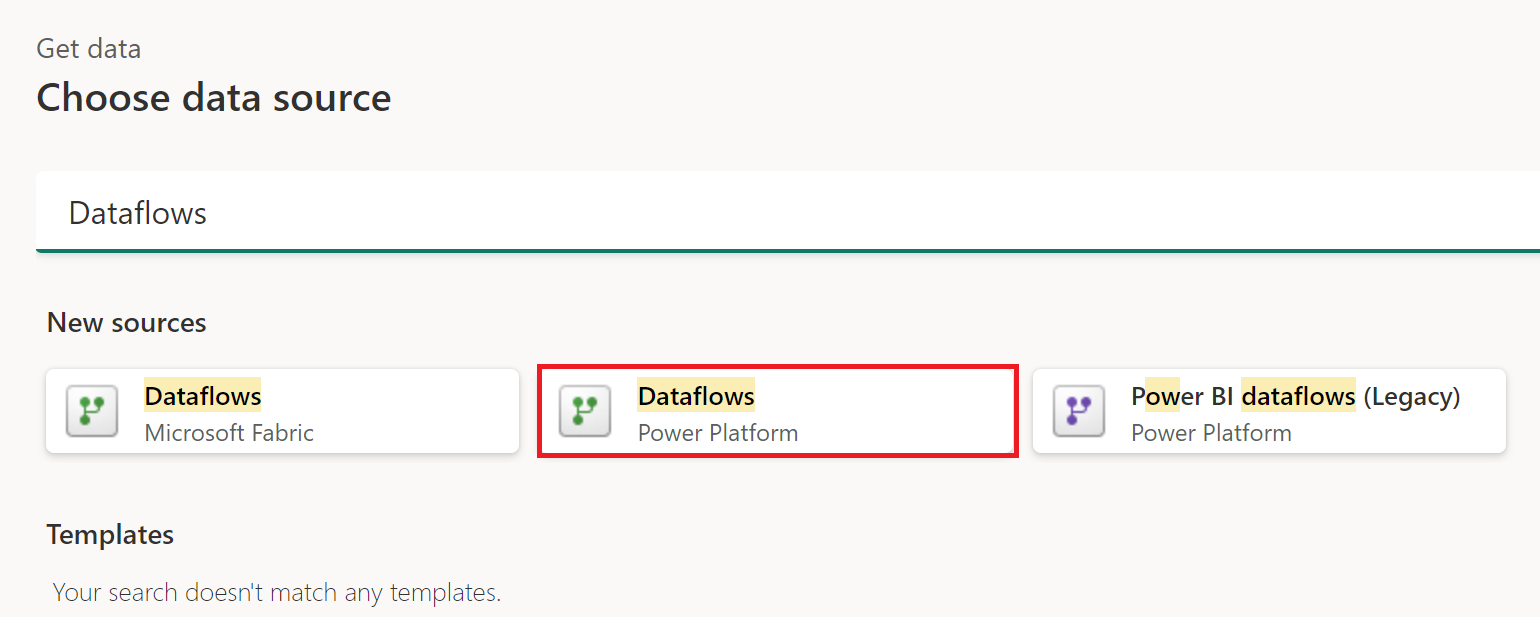

Select the Dataflows option in the get data experience. Different apps have different ways of getting to the Power Query Online get data experience. For more information about how to get to the Power Query Online get data experience from your app, go to Where to get data.

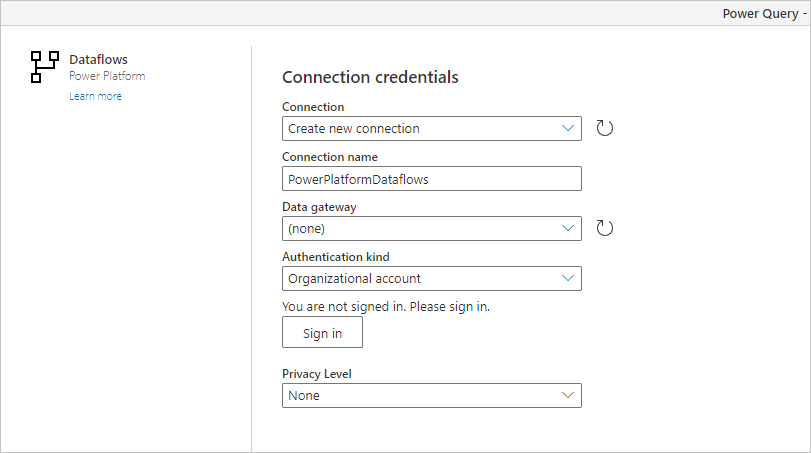

Adjust the connection name.

If necessary, enter an on-premises data gateway if you're going to be using on-premises data. For example, if you're going to combine data from Dataflows and an on-premises SQL Server database.

Sign in to your organizational account.

When you've successfully signed in, select Next.

In the navigation page, select the data you require, and then select Transform Data.

Known issues and limitations

- The Power Query Dataflows connector inside Excel doesn't currently support sovereign cloud clusters (for example, China, Germany, US).

- Consuming data from a dataflow gen2 with the dataflow connector requieres Admin, Member or Contributor permissions. Viewer permissions is not sufficient and is not supported for consuming data from the dataflow.

Frequently asked questions

DirectQuery is not working for me in Power BI—what should I do?

To get DirectQuery to run, you need to have Power BI Premium and adjust a few configuration items in your Power BI workspace. These actions are explained in the dataflows premium features article.

My dataflow table doesn't show up in the dataflow connector in Power BI

You're probably using a Dataverse table as the destination for your standard dataflow. Use the Dataverse/CDS connector instead or consider switching to an analytical dataflow.

There's a difference in the data when I remove duplicates in dataflows—how can I resolve this?

There could be a difference in data between design-time and refresh-time. We don't guarantee which instance is being kept during refresh time. For information on how to avoid inconsistencies in your data, go to Working with duplicates.

I'm using the Dataflow connector in DirectQuery mode—is case insensitive search supported?

No, case insensitive search on columns isn't supported in DirectQuery mode. If you need to use case insensitive search, you can use import mode instead. For more information, go to DirectQuery in Power BI.

I'm getting data via the dataflow connector, but I'm receiving a 429 error code—how can I resolve this?

When you are receiving an error code 429, it's possibly due to exceeding the limit of 1000 requests per minute. This error typically resolves by itself if you wait a minute or two after the cooldown period ended. This limit is in place to prevent dataflows and other Power BI functionality from having a degraded performance. Consequences due to the continued high load on the service might result in additional degraded performance, so we ask users to significantly reduce the number of requests to less than 1000 (limit) or fix your script/model to this specific limit (1000) to efficiently mitigate impact and avoid further issues. You should also avoid nested joins that re-request dataflow data; instead, stage data and perform merges within your dataflow instead of your semantic model.