Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Applies to:

- Microsoft Cloud for Sustainability

- Microsoft Cloud for Financial Services

- Microsoft Cloud for Healthcare

- Microsoft Cloud for Retail

Industry Data Models serve as the foundation for their respective Microsoft Industry Clouds. Depending on the data estate maturity level, integrating the solutions with other systems may be necessary.

Selecting the appropriate integration pattern is a crucial to ensure a successful implementation between Microsoft Industry Clouds solutions and external systems. This article presents the integration patterns, tools, and technologies relevant to integration, and the factors to consider when making decisions.

The need for integration

Third-party systems may have separate processes and even different business logic. If the third-party system uses the same underlying Industry Cloud common data model (CMD); the need for data transfer, synchronization, and programming to transform the data is eliminated.

We use data integration patterns in the following scenarios:

- Primary or transactional data that isn't the central part of a single, continuous management process. Data is synchronized between a process in one system to Microsoft industry cloud.

- Data is shared or exchanged between systems when needed for calculations.

- Data is shared or exchanged between systems, so actions in one system are reflected in the other.

- Aggregate data from a system with a detailed level of data is exchanged to a system with a higher-level representation of data.

How to select the right integration pattern

There are many technical options for integration development and each have its own pros and cons. To identify the right pattern for integration extension, you can consider the below factors and weigh each of them across the options:

| Decision factor | Description |

|---|---|

| Data types and formats | What type and format of data is being integrated? |

| Data volatility | From low volatility/slowly changing to high volatility/rapidly changing. |

| Data volume | From low volume data to high volume. |

| Data availability | When do you want the data to be ready, from source to target? Do you need it in real-time, or do you just need to collect all the data at the end of the day and send it in a scheduled batch to its target? |

| Service protection and throttling | Ensuring consistent availability and performance for everyone by applying limits. These limits shouldn't affect normal users, only clients that perform extraordinary requests. A common pattern for online services should be used to provide error codes when making too many requests. |

| Level of data transformation required | Requirement to convert or aggregate the source data to target. |

| Triggers and trigger actions | What action triggers sending data from the source to the target? What specific actions to be automated after the data arrives at the target? |

| Error handling | Monitoring that is put in place to detect any issues with the interfaces. |

| Scalability | Handle the expected transaction volumes in the present, short-term, and long-term. |

| System of record | Considering which system is the system of record, or owner, of the information. |

| Data flow direction | Does the target system need to pull it or does the source system need to push it? |

Based on these factors, you can identify the integration pattern and choose the right tool or technology for implementation.

Integration patterns

In this section, we explore the following integration patterns that can be used while integrating with Dataverse.

- Real-time/synchronous integration

- Near real-time/asynchronous integration

- Batch integration

- Presentation layer integration

In each pattern, a unique structure is presented, and it can be actualized by using one or more patterns. The subsequent sections provide insight into how these patterns are materialized with specific technologies, along with considerations and the appropriate scenarios in which to apply them.

Real-time/synchronous integration

Real-time integration is indispensable in scenarios where the source system requires instant or minimal latency responses to the data it sends. This requirement becomes crucial when the business use case mandates both the source and destination systems to consistently remain synchronized, ensuring uninterrupted data coherence between the two entities. Synchronous integration becomes essential when the destination system necessitates an immediate response to seamlessly proceed with an ongoing process, enabling timely execution of subsequent actions.

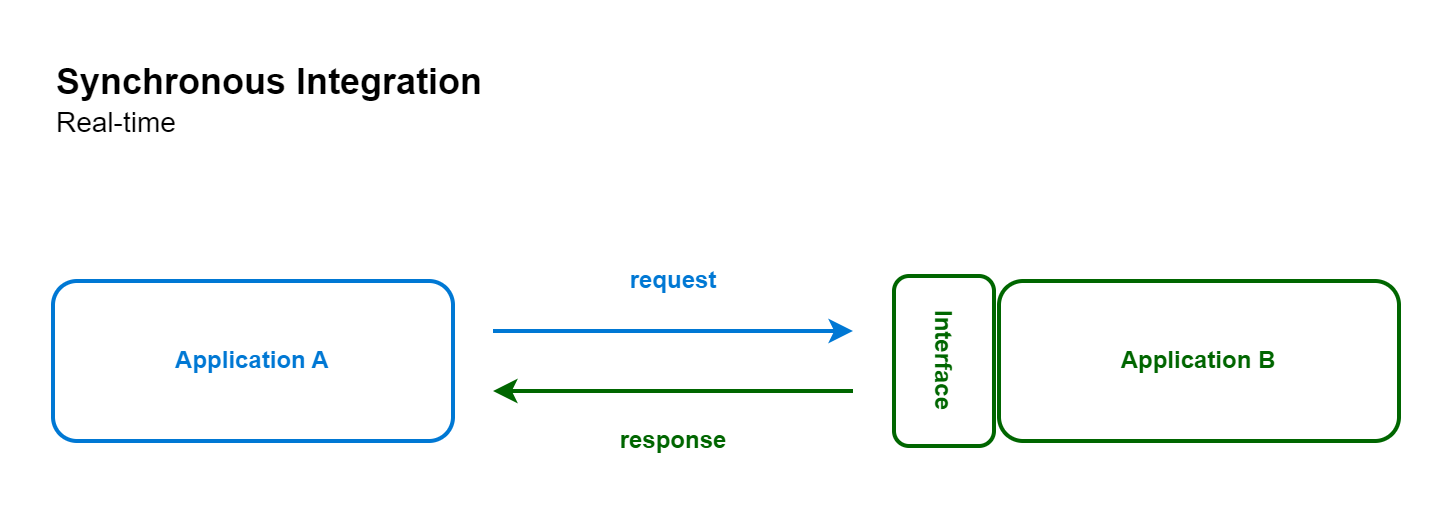

This form of integration is often synonymous with synchronous integration. The following diagram illustrates the prevalent pattern of synchronous integration, where Application A initiates a request to an Application B, and promptly receives a response, ensuring timely and responsive data exchange.

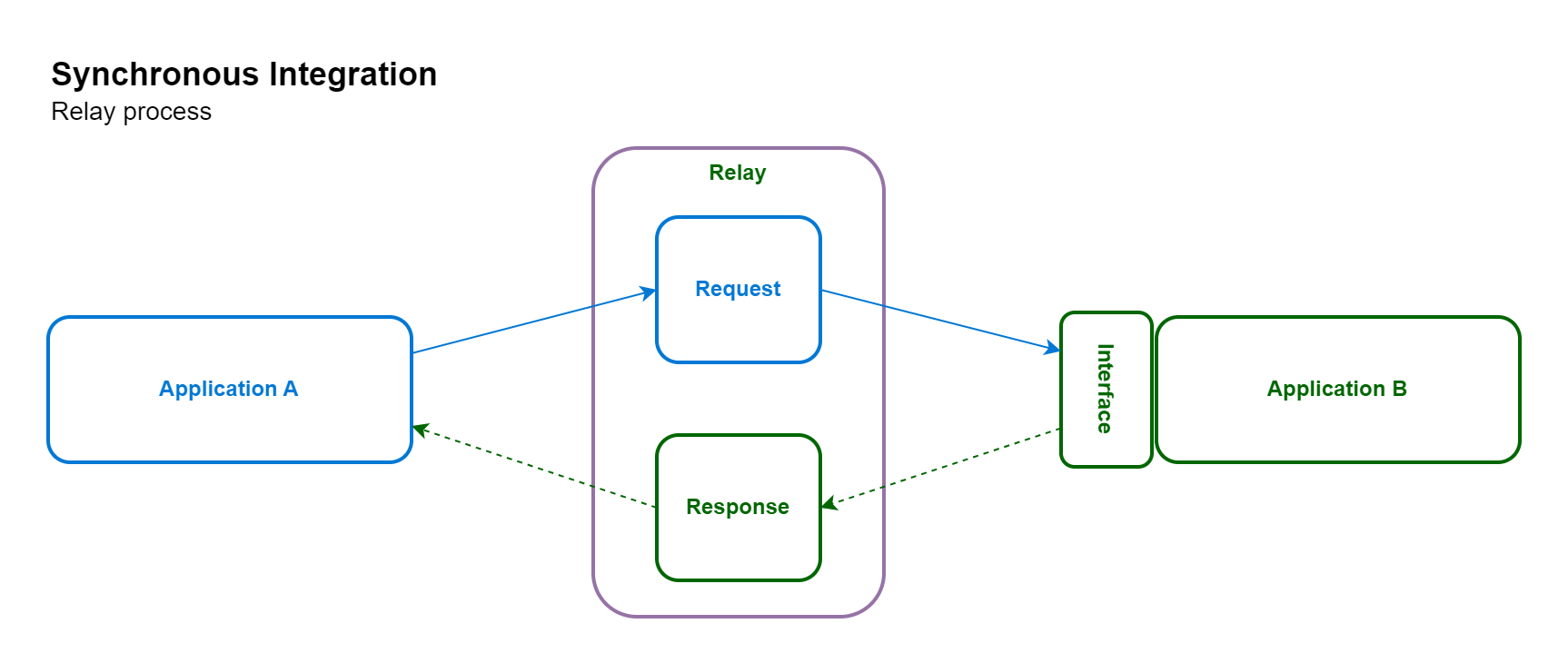

Some of the mentioned technology choices can be expanded to incorporate an intermediary system, serving as a relay to facilitate the transaction process. This relay option effectively separates the source and the target applications by managing the communication of requests and responses on their behalf.

You can implement these synchronous data integration patterns with different technologies that are available in our industry cloud solutions. The following table provides best practice patterns on when to use them.

| Technology Option | Data Direction | Purpose | Use When |

|---|---|---|---|

| Dataverse Web API | Pull / push data from External to Dataverse | OData v4 implementation to provide CRUD operations using a standard set of interfaces, providing an interface that is open to a wide technological audience. | Mostly for transactional app integration when discrete CRUD operations are required. It can also be used for any custom integration, but it comes with complexities related to throttling, parallelism and retry logic, particularly on high data volumes. |

| APIs published by Microsoft Industry Clouds | Pull / push data from External to Dataverse | Custom APIs created by Microsoft Industry Clouds to support special operations like accessing emissions data related to your Azure usage. | Specific operations published by Microsoft Industry Clouds. Prioritize the use of these custom APIs before creating your own custom APIs. |

| Custom Dataverse API | Pull / push data from External to Dataverse | Creating your own API in Dataverse. | When one or more operations need to be consolidated into a single operation, or need to expose a new type of trigger event. |

| Virtual tables | Pull / push data from Dataverse to External | Connect to external data sources and treat them as native Dataverse entities. | Pulling reference data and low volume CRUD scenarios. |

| Connectors | Bi-directional | Enable seamless data exchange between Microsoft Services and external systems, applications, and data sources. | Microsoft published connectors are for commonly used integrations like connecting Microsoft Services with each other and first-party applications. Verified published connectors are employed for specialized integrations with third-party applications, ensuring compatibility and reliability. Custom connectors can be used when Microsoft or partner connectors don't solve the customer’s business needs. |

Near real-time/asynchronous integration

Asynchronous integration is recommended in scenarios where there's no immediate requirement for real-time responses in a business process or action. Typically, it's employed when there's a substantial volume of message communication between applications and systems. Asynchronous integration patterns ensure that communication between systems doesn't block or slow down processes, allowing each system to work independently and asynchronously. Some of the most common ways to implement asynchronous integrations are with message queues, publish-subscribe, and batch integrations. You can use these integrations separately or combined, depending on the requirements. They're often referred to collectively as event-driven architecture (EDA).

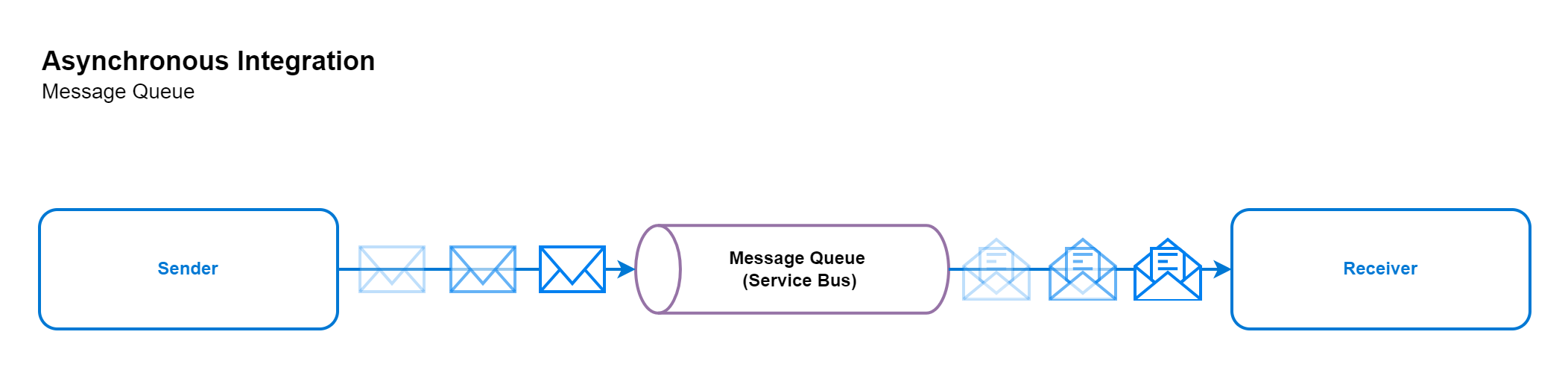

In the following message queue pattern, the sender adopts an event-driven framework, and the consumer creates a binding directly to an event. When the message is sent, the receiver is notified directly and receives the data contained in the event message.

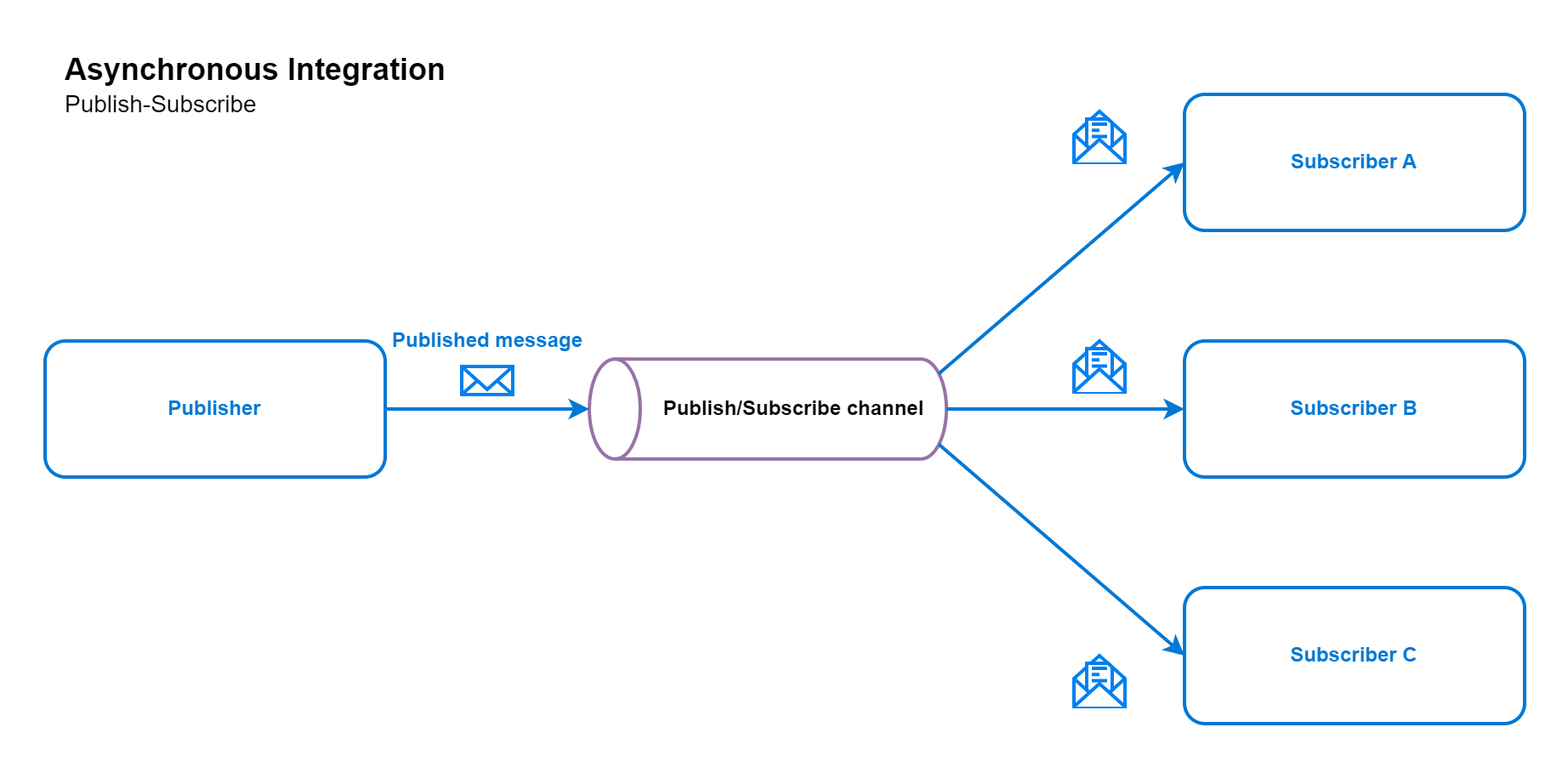

In the following publish-subscribe pattern, the publisher generates a message in a standardized published format and transmits it to a dedicated publish/subscribe channel, which can have one or more subscribers. Each subscriber is subscribed to a specific channel or topic, allowing it to receive and process the published message (event) as required. The publish-subscribe pattern is chosen for one-to-many communication scenarios, because multiple subscribers can independently receive and process the messages (events).

These asynchronous data integration patterns can be implemented with different options, the following table provides you with the available options and best practices on when to use them.

| Technology option | Event-driven or publish-subscribe | Purpose | Considerations | Use when |

|---|---|---|---|---|

| Power Automate | Both | Low-code automation needs. | Follow the Power Automate and each connectors limitation, such as throttling. | Use for Dataverse trigger flows or when you want to run power automate flows on a schedule. |

| Custom connectors based on Logic Apps | Event-driven | Building data connectors for the solution to get data from ISV solutions. | Must go through privacy, security, and compliance reviews before they're moved into production. | Use of ISV integration scenarios where no native connectors exist. |

| Logic Apps and Azure Service Bus | Publish-subscribe | Receiving messages by the publisher to a service bus and logic apps consumes the message to send to subscriber applications. | Mind the Logic Apps configuration and execution limits. | Use for native triggers in Logic Apps connectors and Custom integration with multiple subscriber scenarios. |

| Azure Functions, Web Apps feature of Azure App Service, and Azure Service Bus | Publish-subscribe | Use a message queue to implement the communication channel between the application and the instances of the consumer service. | Consider the message ordering and other design considerations. | High-volume and volatility scenarios, where integration can't be developed with low-code options (Power Automate or Logic Apps). |

| Service endpoint | Both | Sending the context information to a queue, topic, webhook, or event hub. | Not suitable for long-running transactions. | When the integration requirement is mostly met with sending the Dataverse context to target directly and ordering of messages isn't critical. |

Batch integration

Batching is the practice of gathering and transporting a set of messages or records in a batch to limit chatter and overhead. Batch processing collects over a period of time data and then processes it in batches. This approach is useful when dealing with large volumes of data or when the processing requires significant resources. This pattern also scopes for replicating the master data to replica storage for analytics purposes.

| Technology option | Data direction | Purpose | Considerations | Use when |

|---|---|---|---|---|

| Azure Data Factory | Both directions | Creating dataflows to transform the data received from Dataverse or prior to ingest into Dataverse | Data Factory service limits | Mass ingestion or data export scenario with complex, multi-stage transformation. |

| Power Automate | N/A | Automate workflows and tasks for Microsoft | Limited scalability and long processing | Use Power Automate when you need to automate repetitive tasks, trigger actions based on events, and integrate applications without heavy code development. |

| Power Query Dataflow | From external systems to Dataverse | Data preparation tool that allows you to ingest, transform and load data into Dataverse environments. | Limitations | Basic scenarios where target is Dataverse and existing connectors aren't a fit and other given scenarios for Power BI. |

| Azure Synapse Pipelines | Both directions | Creating pipelines to transform the data received from Dataverse or prior to ingest into Dataverse | N/A | Analytics and data warehousing scenarios. |

| Azure Synapse Link for Dataverse | From Dataverse to Azure Synapse Analytics or Azure Data Lake Storage v2 (ADLS) | Replicating the Dataverse data to Azure Synapse Analytics or ADLS v2 and enables you to run analytics, business intelligence, machine learning and custom reporting scenarios on your data. | Tables that aren't supported. | Data analytics and custom reporting. Also, as an intermediate stage of data export. |

| Azure Logic Apps | N/A | Create workflows with powerful integration capabilities. | Complex batch operations might require significant configuration and orchestration. Not optimized for specialized batch processing scenarios. | Azure Logic Apps are suitable for orchestrating business processes and integrating services. |

| SQL Server Integration Services | Both directions | Using a third-party connector to pull and push data from/to Dataverse. | As it isn't a PaaS solution, scaling, memory usage, performance, and cost should be evaluated. | Any limitations when cloud extract, transform, and load (ETL) tools may not be an option. |

Presentation layer integration

Presentation or user interface integration is at the top level of system, this is what the user sees and interacts with. In certain use cases integration needs to happen at this level by combining the information from different systems or data sources and showing it in a single user interface. Model-driven applications is a component of this, it contributes to the comprehensive user experience by enabling data-driven interactions and facilitating seamless navigation within the integrated environment. Presentation integration is needed when there is a desire to maintain the existing business logic or application structure while enabling easy data aggregation, user interface customization, or enhancement of the user experience. Conversely, it does carry inherent constraints, including complexities in integration and maintenance, significant interdependence among integrated systems, potential performance implications, and considerations regarding data consistency.

- Enabling data aggregation

- User interface customization

- Enhanced user experience

Conversely, it has inherent constraints, including:

- Complexities in integration and maintenance

- Significant interdependence among integrated systems

- Potential performance implications

- Considerations regarding data consistency

| Technology option | Purpose | Considerations | Use when |

|---|---|---|---|

| First-party native UI integrations | Use of Microsoft Bing maps, Microsoft Teams, and other first-party native UI integrations. | Not customizable in most cases. | Specific scenarios supported in native UI integration. |

| Custom pages | Embedding a canvas app into model-driven app. | Known limitations | Preferred to take a low-code integration approach and when a canvas app is suitable for usability. |

| Power Apps component framework (PCF) | A custom reusable control to display or interact with the end-user while keeping the responsive design. | Power Apps component limitations framework. | Preferred method when a custom UI needs to be developed within model-driven in the absence of a canvas app. |

| Power BI tiles | Showing the Power BI tile in a model-driven app form. | Power BI Licensing, authorization of Power BI data. | Displaying a Power BI tile within a model-driven app |

| Power BI embedded dashboard | Displaying Power BI embedded dashboard in the model-driven app. | Power BI Licensing, authorization of Power BI data. | Displaying the analytics hosted in Power BI. |

| Embedding as HTML iFrame | Embedding the other system UI into a model-driven app. | Single sign-on (SSO), Cross-Origin Resource Sharing (CORS) configuration and responsive design. | Complex UI scenarios when there's no service available. |

| Custom web resource | Creating a custom UI layout within model-driven app. | Assess the accessibility and responsive design of the custom UI. | Scenarios where other UI integrations aren't an option. |

Summary of integration patterns

In the world of software integration, there are various patterns and mechanisms available to exchange data between different systems. Each pattern has its own advantages and disadvantages, and choosing the right one can greatly affect the performance and efficiency of the integrated systems.

The following table summarizes these integration patterns: real-time or synchronous integration, asynchronous integration, batching integration, and presentation layer integration. You can explore the mechanisms, triggers, pros, cons, and use cases for each pattern, to help you make an informed decision when selecting an integration approach for your system.

| Integration pattern | Mechanism | Trigger | Pros | Cons | Use when |

|---|---|---|---|---|---|

| Real-time or synchronous | Data is exchanged synchronously, invoking actions via point-to-point integration or using relay. | User action or system event. | Fast request and response round trip. Real-time values and information. | Generally, not a best practice to use because of the risk of processes getting stuck and creating tight coupled integration. Risk of ripple effect from transient errors. Sensitive to latency. | Use when real-time information is critical. |

| Asynchronous | Data is exchanged or ingested unattended on a periodic schedule or as a trickle feed using messaging patterns. | Scheduled for a period or triggered by a new message published by the source system. | Loose coupling of systems makes the solution robust. Load balancing over time and resources. It can be very close to real time. Timely error handling. | Delay in response and visibility to changes across systems. | Almost real-time data synchronization needs for low or medium data volumes. |

| Batching | Batching is the practice of gathering and transporting a set of messages or records in a batch to limit chatter and overhead. | Scheduled or manual trigger. | Great for use with messaging services and other asynchronous integration patterns. Fewer individual packages and less message traffic. | Data freshness is lower. Load in the receiving system can be affected if business logic is executed on message arrival. | High volume or volatility scenarios where gathering and transporting a set of messages or records in a batch fashion is feasible, data replication scenarios. |

| Presentation layer | Information from one system is seamlessly integrated into the UI of another system. | N/A | Eliminates the complexity of data synchronization, because data remains in the originating system. In certain industries, it eliminates blockers related to data residency due to regulatory requirements. | Difficult to use the data for calculations for processing, more complexities to satisfy single sign-on, cross-origin resource sharing, and authorization alignment. | When the requirement is satisfied by displaying the source system or UI directly without the need to synchronize data between source and target system. |