Not

Åtkomst till denna sida kräver auktorisation. Du kan prova att logga in eller byta katalog.

Åtkomst till denna sida kräver auktorisation. Du kan prova att byta katalog.

Copy data to Azure Data Box Heavy

Viktigt!

Azure Data Box har nu stöd för tilldelning på åtkomstnivå på blobnivå. Stegen i den här handledningen återspeglar den uppdaterade datakopieringsprocessen och är specifika för blockblobar.

Informationen i detta avsnitt gäller för beställningar som gjorts efter den 1 april 2024.

I den här handledningen beskrivs hur du ansluter till din värddator och kopierar data med hjälp av det lokala webbgränssnittet.

I den här tutorialen lär du dig följande:

- Connect to Data Box Heavy

- Copy data to Data Box Heavy

You can copy data from your source server to your Data Box via SMB, NFS, REST, data copy service or to managed disks.

In each case, make sure that the share names, folder names, and data size follow guidelines described in the Azure Storage and Data Box Heavy service limits.

Förutsättningar

Innan du börjar bör du kontrollera att:

- You complete the Tutorial: Set up Azure Data Box Heavy.

- You receive your Data Box Heavy and that the order status in the portal is Delivered.

- You have a host computer that has the data that you want to copy over to Data Box Heavy. Your host computer must:

- Köra ett operativsystem som stöds.

- Vara ansluten till en höghastighetsnätverk. For fastest copy speeds, two 40-GbE connections (one per node) can be utilized in parallel. If you do not have 40-GbE connection available, we recommend that you have at least two 10-GbE connections (one per node).

Connect to Data Box Heavy shares

Based on the storage account selected, Data Box Heavy creates up to:

- Tre aktier för varje associerat lagringskonto för GPv1 och GPv2.

- En andel för premiumlagring.

- En delning för ett bloblagringskonto, innehållande en mapp för var och en av de fyra åtkomstnivåerna.

I följande tabell identifieras namnen på de Data Box-resurser som du kan ansluta till och vilken typ av data som laddas upp till mållagringskontot. Den identifierar också hierarkin med resurser och kataloger som du kopierar dina källdata till.

| Lagringstyp | Share name | Entitet på första nivån | Entitet på andra nivån | Entitet på tredje nivån |

|---|---|---|---|---|

| Block blob | <lagringskontoNamn>_BlockBlob | <\accessTier> | <\containerName> | <\blockBlob> |

| Page blob | <\storageAccountName>_PageBlob | <\containerName> | <\pageBlob> | |

| Fil lagring | <\storageAccountName>_AzFile | <\fildelningsnamn> | <\file> |

Du kan inte kopiera filer direkt till rotmappen för någon Data Box-resurs. Skapa i stället mappar i Data Box-resursen beroende på ditt användningsfall.

Blockblobar stöder tilldelning av åtkomstnivåer på filnivå. When copying files to the block blob share, the recommended best-practice is to add new subfolders within the appropriate access tier. After creating new subfolders, continue adding files to each subfolder as appropriate.

En ny container skapas för vilken som helst mapp som finns vid roten av blockblobdelningen. Any file within that folder is copied to the storage account's default access tier as a block blob.

Mer information om blobåtkomstnivåer finns i Åtkomstnivåer för blobdata. Mer detaljerad information om metodtips för åtkomstnivå finns i Metodtips för att använda blobåtkomstnivåer.

I följande tabell visas UNC-sökvägen till resurserna i din Data Box och motsvarande URL för Azure Storage-sökväg som data laddas upp till. Den slutliga URL:en för Azure Storage-sökvägen kan härledas från den delade UNC-sökvägen.

| Azure Storage-typer | Data Box-resurser |

|---|---|

| Azure Block blobs | \\<DeviceIPAddress>\<storageaccountname_BlockBlob>\<accessTier>\<ContainerName>\myBlob.txthttps://<storageaccountname>.blob.core.windows.net/<ContainerName>/myBlob.txt |

| Azure Page blobs | \\<DeviceIPAddress>\<storageaccountname_PageBlob>\<ContainerName>\myBlob.vhdhttps://<storageaccountname>.blob.core.windows.net/<ContainerName>/myBlob.vhd |

| Azure Files | \\<DeviceIPAddress>\<storageaccountname_AzFile>\<ShareName>\myFile.txthttps://<storageaccountname>.file.core.windows.net/<ShareName>/myFile.txt |

Mer information om blobåtkomstnivåer finns i Åtkomstnivåer för blobdata. Mer detaljerad information om metodtips för åtkomstnivå finns i Metodtips för att använda blobåtkomstnivåer.

The steps to connect using a Windows or a Linux client are different.

Anmärkning

Follow the same steps to connect to both the nodes of the device in parallel.

Connect on a Windows system

If using a Windows Server host computer, follow these steps to connect to the Data Box Heavy.

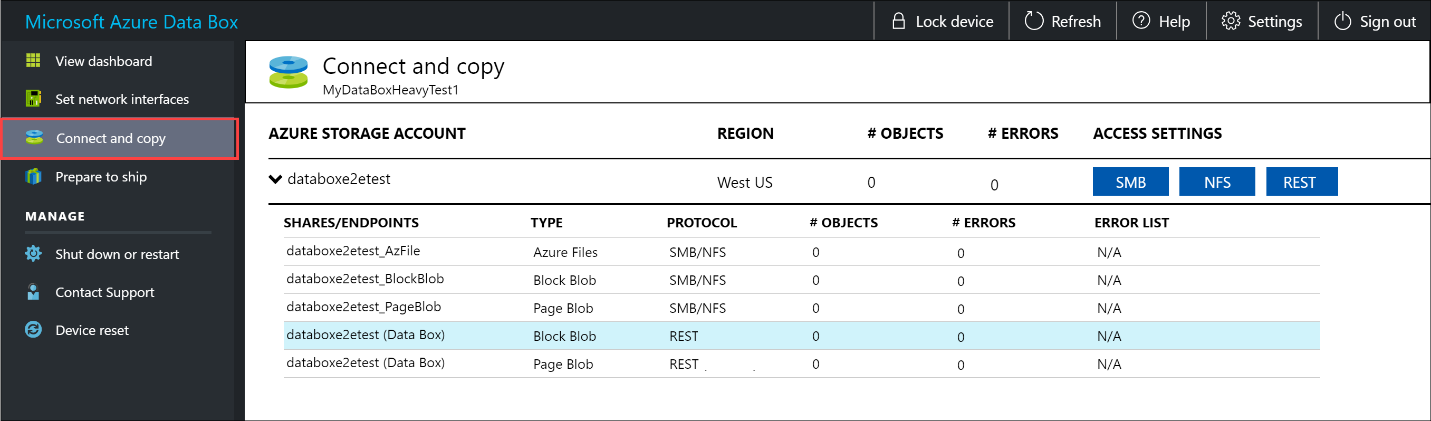

Det första steget är att autentisera och starta en session. Gå till Anslut och kopiera. Click Get credentials to get the access credentials for the shares associated with your storage account.

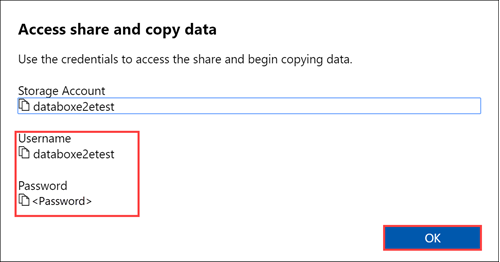

In the Access share and copy data dialog box, copy the Username and the Password corresponding to the share. Klicka på OK.

To access the shares associated with your storage account (databoxe2etest in the following example) from your host computer, open a command window. Skriv i kommandotolken:

net use \\<IP address of the device>\<share name> /u:<user name for the share>Depending upon your data format, the share paths are as follows:

- Azure Block blob -

\\10.100.10.100\databoxe2etest_BlockBlob - Azure Page blob -

\\10.100.10.100\databoxe2etest_PageBlob - Azure Files -

\\10.100.10.100\databoxe2etest_AzFile

- Azure Block blob -

Ange lösenordet för delningen när du tillfrågas. The following sample can be used to connect to BlockBlob share on the Data Box having in IP address of 10.100.10.100.

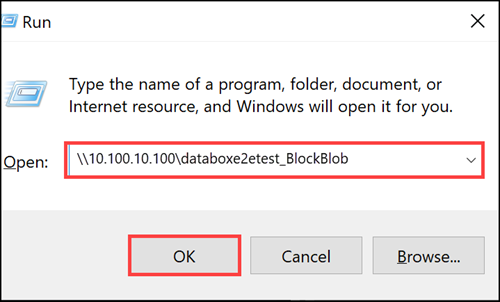

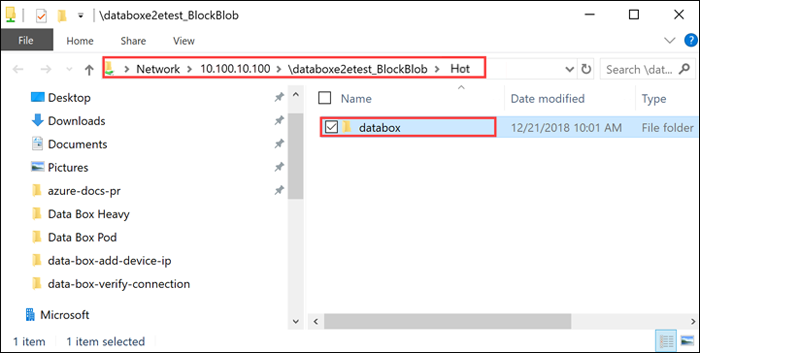

net use \\10.100.10.100\databoxe2etest_BlockBlob /u:databoxe2etest Enter the password for 'databoxe2etest' to connect to '10.100.10.100': The command completed successfully.Tryck på Windows + R. I fönstret Kör anger du

\\<device IP address>. Öppna Utforskaren genom att klicka på OK.

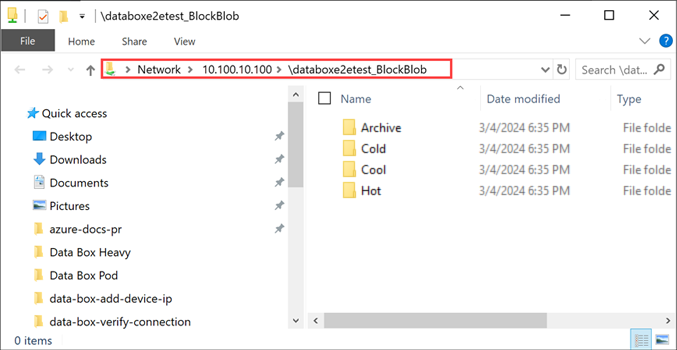

You should now see the shares as folders. Note that in this example the BlockBlob share is being used. Accordingly, the four folders representing the four available access tiers are present. These folders are not available in other shares.

Skapa alltid en mapp för de filer som du vill kopiera under resursen och kopiera sedan filerna till den mappen. You cannot copy files directly to the root folder in the storage account. Any folders created under the PageBlob share represents containers into which data is uploaded as blobs. Similarly, any sub-folders created within the folders representing access tiers in the BlockBlob share also represents a blob storage container. Folders created within the AzFile share represent file shares.

Folders created at the root of the BlockBlob share will be created as blob containers. The access tier of these container will be inherited from the storage account.

Connect on a Linux system

If using a Linux client, use the following command to mount the SMB share.

sudo mount -t nfs -o vers=2.1 10.126.76.172:/databoxe2etest_BlockBlob /home/databoxubuntuhost/databox

The vers parameter is the version of SMB that your Linux host supports. Plug in the appropriate version in the above command.

For versions of SMB that the Data Box Heavy supports, see Supported file systems for Linux clients.

Copy data to Data Box Heavy

Once you're connected to the Data Box Heavy shares, the next step is to copy data.

Copy considerations

Before you begin the data copy, review the following considerations:

Make sure that you copy the data to shares that correspond to the appropriate data format. For instance, copy the block blob data to the share for block blobs. Copy the VHDs to page blob.

If the data format doesn't match the appropriate share type, then at a later step, the data upload to Azure will fail.

While copying data, make sure that the data size conforms to the size limits described in the Azure storage and Data Box Heavy limits.

If data, which is being uploaded by Data Box Heavy, is concurrently uploaded by other applications outside of Data Box Heavy, then this could result in upload job failures and data corruption.

We recommend that:

- You don't use both SMB and NFS at the same time.

- Copy the same data to same end destination on Azure.

I sådana fall kan slutresultatet inte fastställas.

Always create a folder for the files that you intend to copy under the share and then copy the files to that folder. The folder created under block blob and page blob shares represents a container to which the data is uploaded as blobs. Du kan inte kopiera filer direkt till root-mappen i lagringskontot.

After you've connected to the SMB share, begin data copy.

You can use any SMB compatible file copy tool such as Robocopy to copy your data. Multiple copy jobs can be initiated using Robocopy. Använd följande kommando:

robocopy <Source> <Target> * /e /r:3 /w:60 /is /nfl /ndl /np /MT:32 or 64 /fft /Log+:<LogFile>The attributes are described in the following table.

Egenskap Beskrivning /e Kopierar underkataloger, inklusive tomma kataloger. /r: Anger antalet återförsök vid misslyckade kopieringar. /w: Anger väntetiden mellan återförsök i sekunder. /is Includes the same files. /nfl Anger att filnamn inte ska loggas. /ndl Anger att katalognamn inte ska loggas. /np Specifies that the progress of the copying operation (the number of files or directories copied so far) will not be displayed. Displaying the progress significantly lowers the performance. /MT Use multithreading, recommended 32 or 64 threads. This option not used with encrypted files. You may need to separate encrypted and unencrypted files. However, single threaded copy significantly lowers the performance. /fft Use to reduce the time stamp granularity for any file system. /b Copies files in Backup mode. /z Copies files in Restart mode, use this if the environment is unstable. This option reduces throughput due to additional logging. /zb Uses Restart mode. If access is denied, this option uses Backup mode. This option reduces throughput due to checkpointing. /efsraw Copies all encrypted files in EFS raw mode. Use only with encrypted files. log+:<LogFile> Appends the output to the existing log file. The following sample shows the output of the robocopy command to copy files to the Data Box Heavy.

C:\Users>Robocopy C:\Git\azure-docs-pr\contributor-guide \\10.100.10.100\devicemanagertest1_AzFile\templates /MT:24 ------------------------------------------------------------------------------- ROBOCOPY :: Robust File Copy for Windows ------------------------------------------------------------------------------- Started : Thursday, April 4, 2019 2:34:58 PM Source : C:\Git\azure-docs-pr\contributor-guide\ Dest : \\10.100.10.100\devicemanagertest1_AzFile\templates\ Files : *.* Options : *.* /DCOPY:DA /COPY:DAT /MT:24 /R:5 /W:60 ------------------------------------------------------------------------------ 100% New File 206 C:\Git\azure-docs-pr\contributor-guide\article-metadata.md 100% New File 209 C:\Git\azure-docs-pr\contributor-guide\content-channel-guidance.md 100% New File 732 C:\Git\azure-docs-pr\contributor-guide\contributor-guide-index.md 100% New File 199 C:\Git\azure-docs-pr\contributor-guide\contributor-guide-pr-criteria.md New File 178 C:\Git\azure-docs-pr\contributor-guide\contributor-guide-pull-request-co100% .md New File 250 C:\Git\azure-docs-pr\contributor-guide\contributor-guide-pull-request-et100% e.md 100% New File 174 C:\Git\azure-docs-pr\contributor-guide\create-images-markdown.md 100% New File 197 C:\Git\azure-docs-pr\contributor-guide\create-links-markdown.md 100% New File 184 C:\Git\azure-docs-pr\contributor-guide\create-tables-markdown.md 100% New File 208 C:\Git\azure-docs-pr\contributor-guide\custom-markdown-extensions.md 100% New File 210 C:\Git\azure-docs-pr\contributor-guide\file-names-and-locations.md 100% New File 234 C:\Git\azure-docs-pr\contributor-guide\git-commands-for-master.md 100% New File 186 C:\Git\azure-docs-pr\contributor-guide\release-branches.md 100% New File 240 C:\Git\azure-docs-pr\contributor-guide\retire-or-rename-an-article.md 100% New File 215 C:\Git\azure-docs-pr\contributor-guide\style-and-voice.md 100% New File 212 C:\Git\azure-docs-pr\contributor-guide\syntax-highlighting-markdown.md 100% New File 207 C:\Git\azure-docs-pr\contributor-guide\tools-and-setup.md ------------------------------------------------------------------------------ Total Copied Skipped Mismatch FAILED Extras Dirs : 1 1 1 0 0 0 Files : 17 17 0 0 0 0 Bytes : 3.9 k 3.9 k 0 0 0 0 C:\Users>Du kan optimera prestanda med hjälp av följande robocopy-parametrar när du kopierar data. (The numbers below represent the best case scenarios.)

Plattform Mestadels små filer < 512 KB Främst medelstora filer 512 kB–1 MB Mestadels stora filer > 1 MB Data Box Heavy 6 Robocopy sessions

24 threads per sessions6 Robocopy sessions

16 threads per sessions6 Robocopy sessions

16 threads per sessionsFor more information on Robocopy command, go to Robocopy and a few examples.

Open the target folder to view and verify the copied files.

As the data is copied:

- The file names, sizes, and format are validated to ensure those meet the Azure object and storage limits as well as Azure file and container naming conventions.

- To ensure data integrity, checksum is also computed inline.

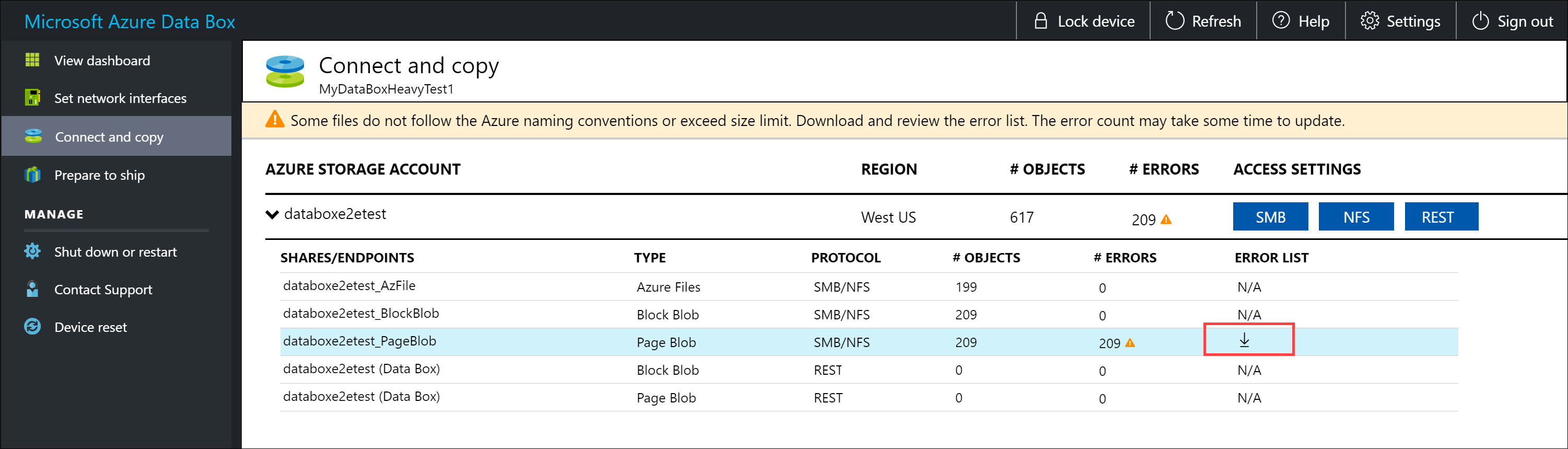

If you have any errors during the copy process, download the error files for troubleshooting. Select the arrow icon to download the error files.

For more information, see View error logs during data copy to Data Box Heavy. For a detailed list of errors during data copy, see Troubleshoot Data Box Heavy issues.

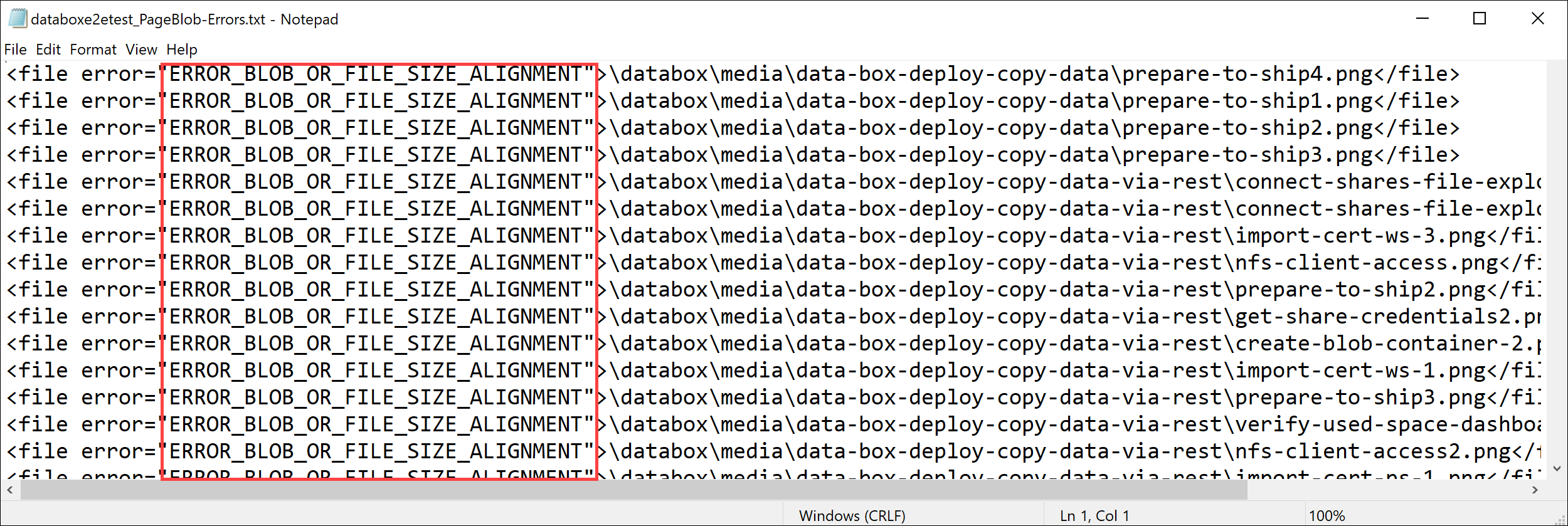

Open the error file in Notepad. The following error file indicates that the data is not aligned correctly.

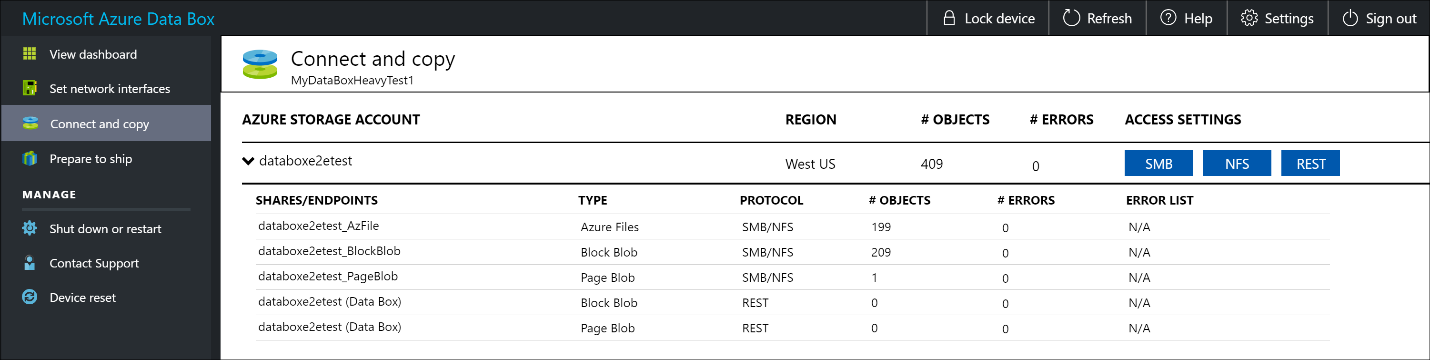

For a page blob, the data needs to be 512 bytes aligned. After this data is removed, the error resolves as shown in the following screenshot.

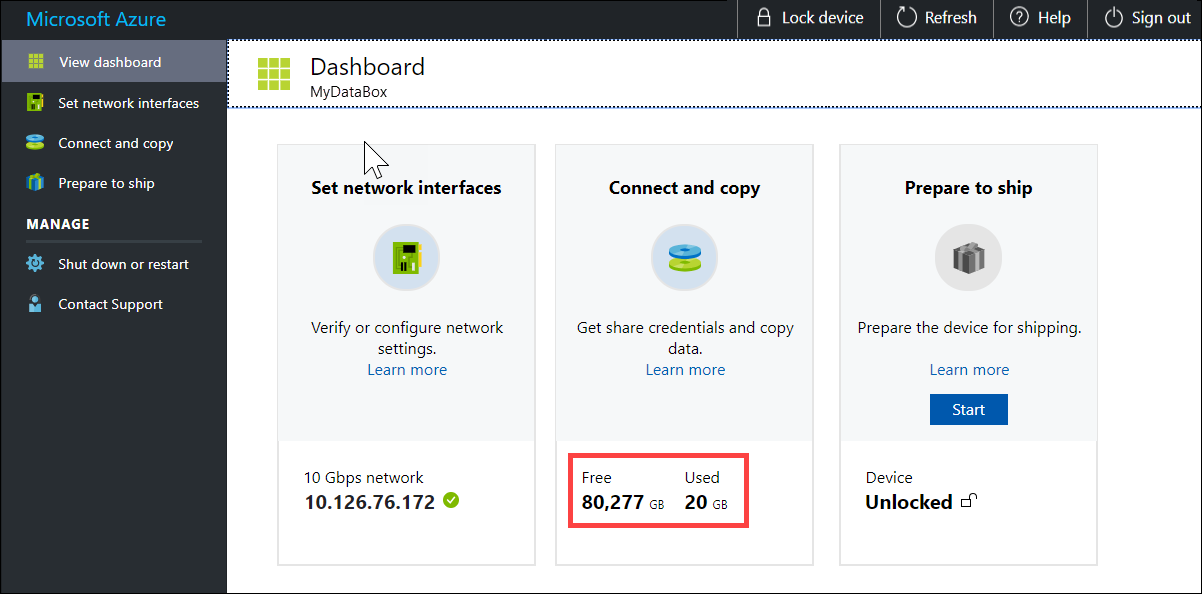

After the copy is complete, go to View Dashboard page. Verify the used space and the free space on your device.

Repeat the above steps to copy data on to the second node of the device.

Nästa steg

I den här självstudien om Azure Data Box Heavy har du bland annat lärt dig att:

- Connect to Data Box Heavy

- Copy data to Data Box Heavy

Advance to the next tutorial to learn how to ship your Data Box Heavy back to Microsoft.

Copy data via SMB

If using a Windows host, use the following command to connect to the SMB shares:

\\<IP address of your device>\ShareNameDu kan hämta autentiseringsuppgifterna för åtkomst till resursen på sidan Connect & copy (Anslut och kopiera) i det lokala webbgränssnittet i Data Box.

Use an SMB compatible file copy tool such as Robocopy to copy data to shares.

For step-by-step instructions, go to Tutorial: Copy data to Azure Data Box via SMB.

Copy data via NFS

If using an NFS host, use the following command to mount the NFS shares:

sudo mount <Data Box device IP>:/<NFS share on Data Box device> <Path to the folder on local Linux computer>To get the share access credentials, go to the Connect & copy page in the local web UI of the Data Box Heavy.

Use

cporrsynccommand to copy your data.Repeat these steps to connect and copy data to the second node of your Data Box Heavy.

For step-by-step instructions, go to Tutorial: Copy data to Azure Data Box via NFS.

Copy data via REST

- To copy data using Data Box Blob storage via REST APIs, you can connect over http or https.

- To copy data to Data Box Blob storage, you can use AzCopy.

- Repeat these steps to connect and copy data to the second node of your Data Box Heavy.

For step-by-step instructions, go to Tutorial: Copy data to Azure Data Box Blob storage via REST APIs.

Copy data via data copy service

- To copy data by using the data copy service, you need to create a job. In the local web UI of your Data Box Heavy, go to Manage > Copy data > Create.

- Fill out the parameters and create a job.

- Repeat these steps to connect and copy data to the second node of your Data Box Heavy.

For step-by-step instructions, go to Tutorial: Use the data copy service to copy data into Azure Data Box Heavy.

Copy data to managed disks

- When ordering the Data Box Heavy device, you should have selected managed disks as your storage destination.

- You can connect to Data Box Heavy via SMB or NFS shares.

- You can then copy data via SMB or NFS tools.

- Repeat these steps to connect and copy data to the second node of your Data Box Heavy.

For step-by-step instructions, go to Tutorial: Use Data Box Heavy to import data as managed disks in Azure.