This article describes how to deliver a mission-critical advanced analytical solution with Azure Data Factory. This architecture is an extension of the baseline architecture and the enterprise hardened architecture. This article provides specific guidance about the recommended changes needed to manage a workload as a mission-critical operation.

This architecture aligns with the Cloud Adoption Framework for Azure best practices and guidance and the recommendations for mission-critical workloads.

Create a mission-critical architecture

In the baseline architecture, Contoso operates a medallion lakehouse that supports their first data workloads for the financial department. Contoso hardens and extends this system to support the analytical data needs of the enterprise. This strategy provides data science capabilities and self-service functionality.

In the enterprise hardened architecture, Contoso has implemented a medallion lakehouse architecture that supports their enterprise analytical data needs and enables business users by using a domain model. As Contoso continues its global expansion, the finance department has used Azure Machine Learning to create a deal fraud model. This model now needs further refinement to function as a mission-critical, operational service.

Key requirements

There are several key requirements to deliver a mission-critical advanced analytical solution by using Data Factory:

The machine learning model must be designed as a mission-critical, operational service that is globally available to the various deal-operational systems.

The machine learning model outcomes and performance metrics must be available for retraining and auditing.

The machine learning model auditing trails must be retained for 10 years.

The machine learning model currently targets the US, Europe, and South America, with plans to expand into Asia in the future. The solution must adhere to data compliance requirements, like the General Data Protection Regulation for European countries or regions.

The machine learning model is expected to support up to 1,000 concurrent users in any given region during peak business hours. To minimize costs, the machine learning processing must scale back when not in use.

Key design decisions

A requirement doesn't justify the cost and complexity of redesigning the platform to meet mission-critical specifications. The machine learning model should instead be containerized and then deployed to a mission-critical solution. This approach minimizes cost and complexity by isolating the model service and adhering to mission-critical guidance. This design requires the model to be developed on the platform and then containerized for deployment.

After the model is containerized, it can be served out through an API by using a scale-unit architecture in US, European, and South American Azure regions. Only paired regions that have availability zones are in scope, which supports redundancy requirements.

Because of the simplicity of a single API service, we recommend that you use the web app for containers feature to host the app. This feature provides simplicity. You can also use Azure Kubernetes Service (AKS), which provides more control but increases complexity.

The model is deployed through an MLOps framework. Data Factory is used to move data in and out of the mission-critical implementation.

To do containerization, you need:

An API front end to serve the model results.

To offload audit and performance metrics to a storage account, which can then be transferred back to the main platform through Data Factory by using a scheduled job.

Deployment and rollback deployment pipelines, which enable each regional deployment to synchronize with the correct current version of the model.

Service health modeling to measure and manage the overall health of a workload.

Audit trails can be initially stored within a Log Analytics workspace for real-time analysis and operational support. After 30 days, or 90 days if using Microsoft Sentinel, audit trails can be automatically transferred to Azure Data Explorer for long-term retention. This approach allows for interactive queries of up to two years within the Log Analytics workspace and the ability to keep older, infrequently used data at a reduced cost for up to 12 years. Use Azure Data Explorer for data storage to enable running cross-platform queries and visualize data across both Azure Data Explorer and Microsoft Sentinel. This approach provides a cost-effective solution for meeting long-term storage requirements while maintaining support optionality. If there's no requirement to hold excessive data, you should consider deleting it.

Architecture

Workflow

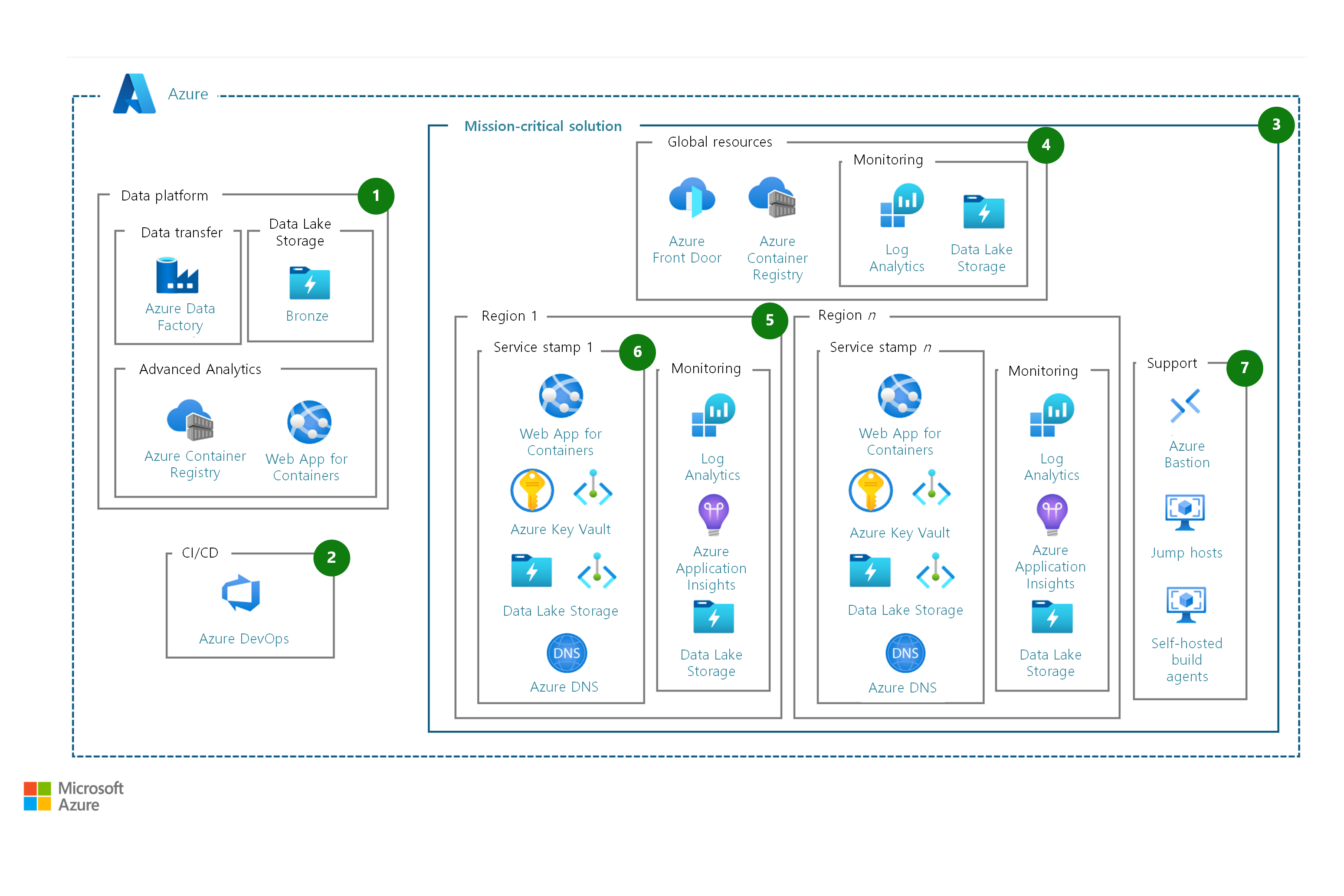

The following workflow corresponds to the preceding diagram:

The machine learning model is developed on the data platform. This design change requires the following updates to the architecture:

Azure Container Registry enables the build, storage, and management of Docker container images and artifacts in a private registry that supports the machine learning model deployment.

The web app for containers feature enables the continuous integration and continuous deployment activities that are required to deliver the machine learning model outputs as an API service.

Data Factory enables the migration of any data required by the model to run and enables the ingestion of model output and performance metrics from the mission-critical implementation.

The data lake bronze layer (or the raw layer) directory structure stores the model output and performance metrics by using the archive tier to meet the data retention requirement.

Azure DevOps orchestrates the deployment of the model codebase and the creation and retirement of regional deployments for all supporting services.

The machine learning model is deployed as a dedicated mission-critical workload within its own defined subscription. This approach ensures that the model avoids any component limits or service limits that the platform might impose.

A set of shared resources span the entire solution and are therefore defined as global:

Container Registry enables the distribution of the current machine learning model version across the regional deployments.

Azure Front Door provides load-balancing services to distribute traffic across regional deployments.

A monitoring capability uses Log Analytics and Azure Data Lake Storage.

The regional deployment stamp is a set of solution components that you can deploy into any target region. This approach provides scale, service resiliency, and regional-specific service.

Depending on the nature of the machine learning model, there might be regional data compliance requirements that require the machine learning model to adhere to sovereignty regulations. This design supports these requirements.

Each regional deployment comes with its own monitoring and storage stack, which provides isolation from the rest of solution.

The scale unit of the solution has the following components:

The web app for containers feature hosts the machine learning model and serves its outputs. As the core service component in this solution, you should consider the scale limits for web app for containers as the key constraints. If these limits don't support the solutions requirements, considering using AKS instead.

Azure Key Vault enforces appropriate controls over secrets, certificates, and keys at the regional scope, secured through Azure Private Link.

Data Lake Storage provides data storage, which is secured through Private Link.

Azure DNS provides name resolution that enables service resiliency and simplifies load balancing across the solution.

To facilitate support and troubleshooting of the solution, the following components are also included:

Azure Bastion provides a secure connection to jump hosts without requiring a public IP address.

Azure Virtual Machines acts as a jump host for the solution, which enables a better security posture.

Self-hosted build agents provide scale and performance to support solution deployments.

Network design

Download a Visio file of this architecture.

You should use a next-generation firewall like Azure Firewall to secure network connectivity between your on-premises infrastructure and your Azure virtual network.

You can deploy a self-hosted integration runtime (SHIR) on a virtual machine (VM) in your on-premises environment or in Azure. Consider deploying the VM in Azure as part of the shared support resource landing zone to simplify governance and security. You can use the SHIR to securely connect to on-premises data sources and perform data integration tasks in Data Factory.

Machine learning-assisted data labeling doesn't support default storage accounts because they're secured behind a virtual network. First create a storage account for machine learning-assisted data labeling. Then apply the labeling and secure it behind the virtual network.

Private endpoints provide a private IP address from your virtual network to an Azure service. This process effectively brings the service into your virtual network. This functionality makes the service accessible only from your virtual network or connected networks, which ensures a more secure and private connection. Private endpoints use Private Link, which secures the connection to the platform as a service (PaaS) solution. If your workload uses any resources that don't support private endpoints, you might be able to use service endpoints. We recommend that you use private endpoints for mission-critical workloads whenever possible.

For more information, see Networking and connectivity.

Important

Determine whether your use case is operational, like this scenario, or if it's related to the data platform. If your use case includes the data platform, such as data science or analytics, it might not qualify as mission-critical. Mission-critical workloads require substantial resources and should only be defined as such if they justify the resource investment.

Alternatives

You can use AKS to host the containers. For this use case, the management burden required for AKS makes it a less ideal option.

You can use Azure Container Apps instead of the web apps for containers feature. Private endpoints aren't currently supported for Container Apps, but the service can be integrated into an existing or new virtual network.

You can use Azure Traffic Manager as a load-balancing alternative. Azure Front Door is preferred for this scenario because of the extra available functionality and a quicker failover performance.

If the model requires read and write capabilities as part of its data processing, consider using Azure Cosmos DB.

Considerations

These considerations implement the pillars of the Azure Well-Architected Framework, which is a set of guiding tenets that can be used to improve the quality of a workload. For more information, see Well-Architected Framework.

Reliability

Reliability ensures your application can meet the commitments you make to your customers. For more information, see Design review checklist for Reliability.

Compared to the baseline architecture, this architecture:

Aligns with the mission-critical baseline architecture.

Follows the guidance from the mission-critical reliability design considerations.

Deploys an initial health model for the solution to maximize reliability.

Security

Security provides assurances against deliberate attacks and the abuse of your valuable data and systems. For more information, see Design review checklist for Security.

Compared to the baseline architecture, this architecture:

Follows the guidance from the mission-critical security design considerations.

Implements the security guidance from the mission-critical reference architecture.

Cost optimization

Cost optimization is about looking at ways to reduce unnecessary expenses and improve operational efficiencies. For more information, see Design review checklist for Cost Optimization.

Mission-critical designs are expensive, which makes implementing controls important. Some controls include:

Aligning the component SKU selection to the solution scale-unit boundaries to prevent overprovisioning.

Available and practical operating expense-saving benefits, such as Azure reservations for stable workloads, savings plans for dynamic workloads, and Log Analytics commitment tiers.

Cost and budget alerting through Microsoft Cost Management.

Operational excellence

Operational excellence covers the operations processes that deploy an application and keep it running in production. For more information, see Design review checklist for Operational Excellence.

Compared to the baseline architecture, this architecture:

Follows the guidance from the mission-critical operational excellence design considerations.

Separates out global and regional monitoring resources to prevent a single of point failure in Observability.

Implements the deployment and testing guidance and operational procedures from the mission-critical reference architecture.

Aligns the solution with Azure engineering roadmaps and regional rollouts to account for constantly evolving services in Azure.

Performance efficiency

Performance efficiency is the ability of your workload to scale to meet the demands placed on it by users in an efficient manner. For more information, see Design review checklist for Performance Efficiency.

Compared to the baseline architecture, this architecture:

Follows the guidance from the mission-critical performance efficiency design considerations.

Completes a Well-Architected assessment for mission-critical workloads to provide a baseline of readiness for the solution. Regularly revisit this assessment as part of a proactive cycle of measurement and management.

Antipatterns

The shopping list approach: Business stakeholders are often presented with a shopping list of features and service levels, without the context of cost or complexity. It's important to ensure that any solution is based upon validated requirements and the solution design is supported by financial modeling with options. This approach allows stakeholders to make informed decisions and pivot if necessary.

Not challenging the requirements: Mission-critical designs can be expensive and complex to implement and maintain. Business stakeholders should be questioned about their requirements to ensure that "mission-critical" is truly necessary.

Deploy and forget: The model is deployed without continuous monitoring, updates, or support mechanisms in place. After the model is deployed, it requires little to no ongoing maintenance and is left to operate in isolation. This neglect can lead to performance degradation, drift in model accuracy, and vulnerabilities to emerging data patterns. Ultimately, neglect undermines the reliability and effectiveness of the model in serving its intended purpose.