Events

Apr 29, 2 PM - Apr 30, 7 PM

Join the ultimate Windows Server virtual event April 29-30 for deep-dive technical sessions and live Q&A with Microsoft engineers.

Sign up nowThis browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

This article provides information about how to set up an Azure Local instance in System Center Virtual Machine Manager (VMM). You can deploy an Azure Local instance by provisioning from bare-metal servers or by adding existing hosts. Learn more about the new Azure Local.

VMM 2022 supports Azure Local, version 22H2 (supported from VMM 2022 UR1).

VMM 2019 Update Rollup 3 (UR3) supports Azure Stack HCI, version 20H2. The current product is Azure Local, version 23H2.

Important

Azure Local instances that are managed by Virtual Machine Manager must not join the preview channel yet. System Center (including Virtual Machine Manager, Operations Manager, and other components) does not currently support Azure Local preview versions. For the latest updates, see the System Center blog.

Ensure that you're running VMM 2019 UR3 or later.

What’s supported?

Addition, creation, and management of Azure Local instances. See detailed steps to create and manage Azure Local instances.

Ability to provision and deploy VMs on the Azure Local instances and perform VM life cycle operations. VMs can be provisioned using VHD(x) files, templates, or from an existing VM. Learn more.

Deployment and management of SDN network controller on Azure Local instances.

Management of storage pool settings, creation of virtual disks, creation of cluster shared volumes (CSVs), and application of QoS settings.

Moving VMs between Windows Server and Azure Local instances works via Network Migration and migrating an offline (shut down) VM. In this scenario, VMM does export and import under the hood, even though it's performed as a single operation.

The PowerShell cmdlets used to manage Windows Server clusters can be used to manage Azure Local instances as well.

Ensure that you're running VMM 2022 UR1 or later.

What’s supported?

Addition, creation, and management of Azure Local instances. See detailed steps to create and manage Azure Local instances.

Ability to provision and deploy VMs on the Azure Local instances and perform VM life cycle operations. VMs can be provisioned using VHD(x) files, templates, or from an existing VM. Learn more.

Deployment and management of SDN network controller on Azure Local instances.

Management of storage pool settings, creation of virtual disks, creation of cluster shared volumes (CSVs), and application of QoS settings.

Moving VMs between Windows Server and Azure Local instances works via Network Migration and migrating an offline (shut down) VM. In this scenario, VMM does export and import under the hood, even though it's performed as a single operation.

The PowerShell cmdlets used to manage Windows Server clusters can be used to manage Azure Local instances as well.

Register and unregister Azure Local instances

With VMM 2022, we're introducing VMM PowerShell cmdlets to register and unregister Azure Local instances.

Use the following cmdlets to register an Azure Local instances:

Register-SCAzStackHCI -VMHostCluster <HostCluster> -SubscriptionID <string>

Use the following command to unregister a cluster:

Unregister-SCAzStackHCI -VMHostCluster <HostCluster> -SubscriptionID <string>

For detailed information on the supported parameter, see Register-SCAzStackHCI and Unregister-SCAzStackHCI.

What’s not supported?

Management of Azure Local stretched clusters is currently not supported in VMM.

Azure Local machines are intended as virtualization hosts where you run all your workloads in virtual machines. The Azure Local terms allow you to run only what's necessary for hosting virtual machines. Azure Local instances shouldn't be used for other purposes like WSUS servers, WDS servers, or library servers. Refer to Use cases for Azure Local, When to use Azure Local, and Roles you can run without virtualizing.

Live migration between any version of Windows Server and Azure Local instances isn't supported.

Note

Live migration between Azure Local instances works, as well as between Windows Server clusters.

Note

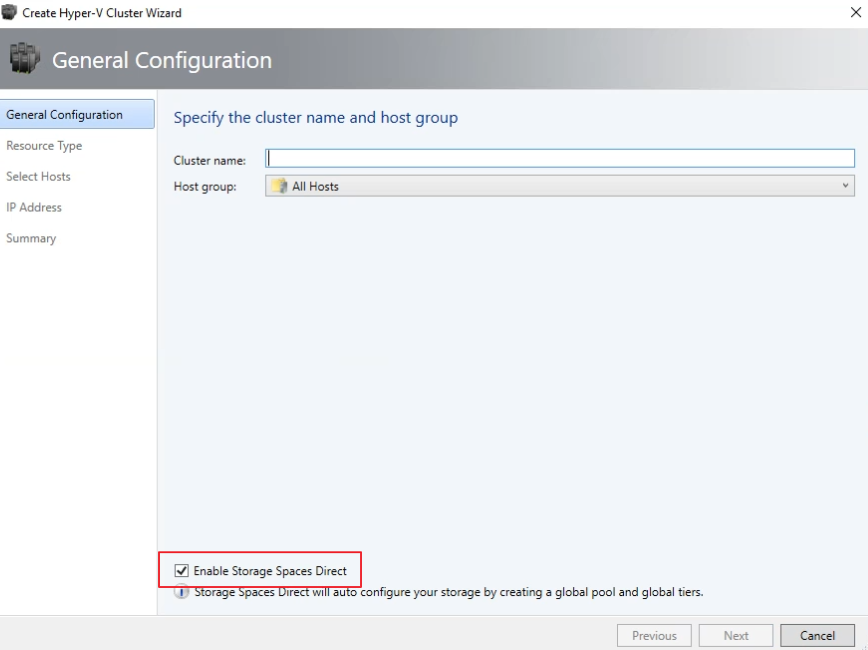

You must enable S2D when creating an Azure Local instance. To enable S2D, in the cluster creation wizard, go to General Configuration. Under Specify the cluster name and host group, select Enable Storage Spaces Direct as shown below:

After you enable a cluster with S2D, VMM does the following:

When you use VMM to create a hyper-converged cluster, the pool and the storage tiers are automatically created by running Enable-ClusterStorageSpacesDirect -Autoconfig $True.

After these prerequisites are in place, you provision a cluster, and set up storage resources on it. You can then deploy VMs on the cluster.

Follow these steps:

You can provision a cluster by Hyper-V hosts and bare-metal machines:

If you need to add the Azure Local machines to the VMM fabric, follow these steps. If they’re already in the VMM fabric, skip to the next step.

Note

Note

Typically, S2D node requires RDMA, QoS, and SET settings. To configure these settings for a node using bare metal computers, you can use the post deployment script capability in PCP. Here's the sample PCP post deployment script. You can also use this script to configure RDMA, QoS, and SET while adding a new node to an existing S2D deployment from bare metal computers.

Note

After the cluster is provisioned and managed in the VMM fabric, you need to set up networking for cluster nodes.

Note

Configuration of DCB settings is an optional step to achieve high performance during S2D cluster creation workflow. Skip to step 4 if you do not wish to configure DCB settings.

If you have vNICs deployed, for optimal performance, we recommend you to map all your vNICs with the corresponding pNICs. Affinities between vNIC and pNIC are set randomly by the operating system, and there could be scenarios where multiple vNICs are mapped to the same pNIC. To avoid such scenarios, we recommend you to manually set affinity between vNIC and pNIC by following the steps listed here.

When you create a network adapter port profile, we recommend you to allow IEEE priority. Learn more.

You can also set the IEEE Priority using the following PowerShell commands:

Set-VMNetworkAdapterVlan -VMNetworkAdapterName 'SMB2' -VlanId '101' -Access -ManagementOS

Set-VMNetworkAdapter -ManagementOS -Name 'SMB2' -IeeePriorityTag on

Use the following steps to configure DCB settings:

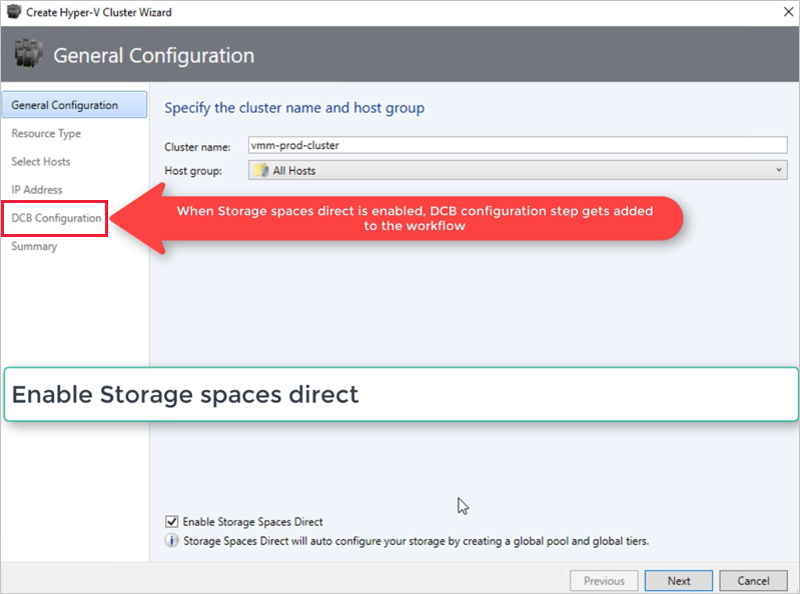

Create a new Hyper-V cluster, select Enable Storage Spaces Direct. DCB Configuration option gets added to the Hyper-V cluster creation workflow.

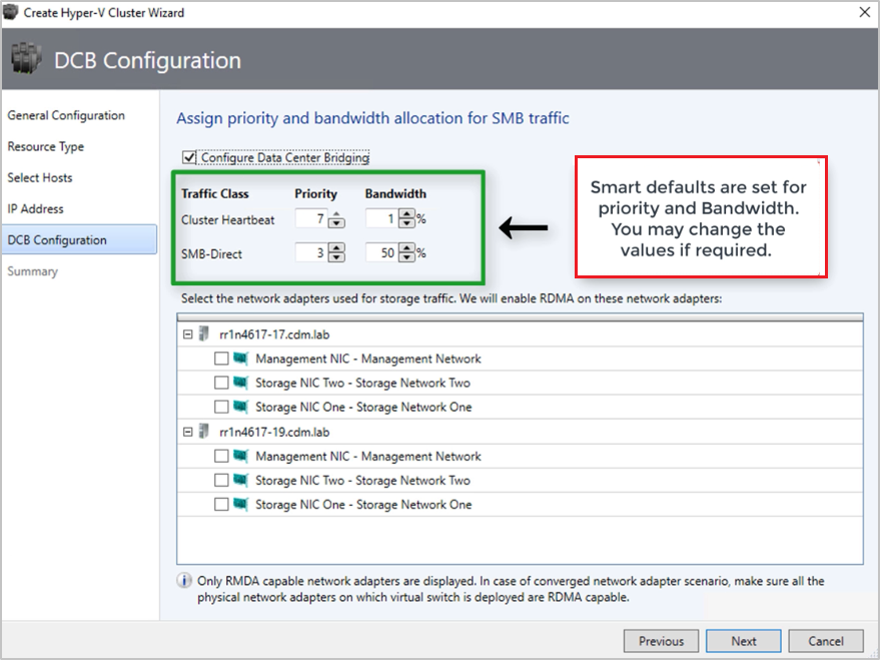

In DCB configuration, select Configure Data Center Bridging.

Provide Priority and Bandwidth values for SMB-Direct and Cluster Heartbeat traffic.

Note

Default values are assigned to Priority and Bandwidth. Customize these values based on your organization's environment needs.

Default values:

| Traffic Class | Priority | Bandwidth (%) |

|---|---|---|

| Cluster Heartbeat | 7 | 1 |

| SMB-Direct | 3 | 50 |

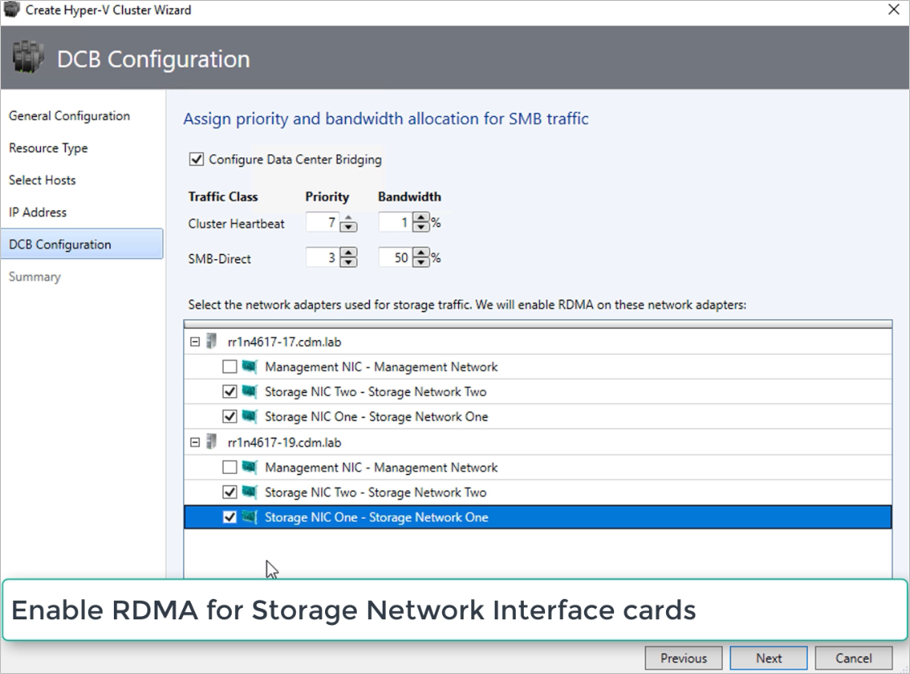

Select the network adapters used for storage traffic. RDMA is enabled on these network adapters.

Note

In a converged NIC scenario, select the storage vNICs. The underlying pNICs must be RDMA capable for vNICs to be displayed and available for selection.

Review the summary and select Finish.

An Azure Local instance will be created and the DCB parameters are configured on all the S2D nodes.

Note

After creating an Azure Local instance, it must be registered with Azure within 30 days of installation per Azure Online Service terms. If you're using System Center 2022, use Register-SCAzStackHCI cmdlet in VMM to register the Azure Local instance with Azure. Alternatively, follow these steps to register the Azure Local instance with Azure.

The registration status will reflect in VMM after a successful cluster refresh.

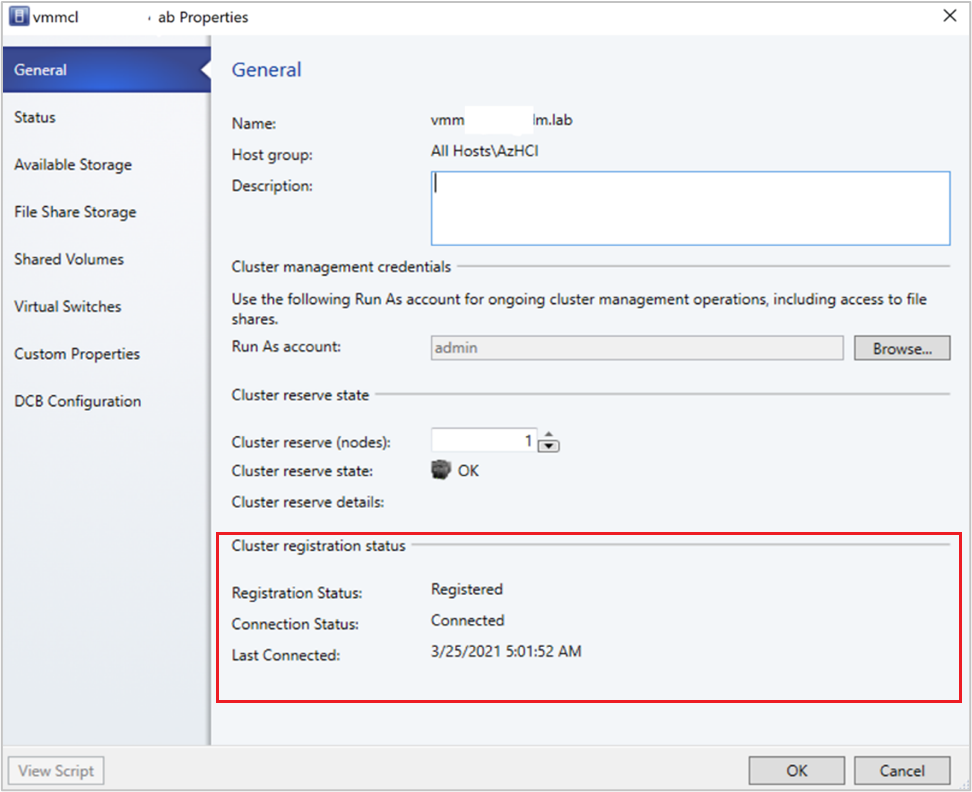

In the VMM console, you can view the registration status and last connected date of Azure Stack HCI clusters.

Select Fabric, right-click the Azure Stack HCI cluster, and select Properties.

Alternatively, run Get-SCVMHost and observe the properties of returned object to check the registration status.

You can now modify the storage pool settings and create virtual disks and CSVs.

Select Fabric > Storage > Arrays.

Right-click the cluster > Manage Pool, and select the storage pool that was created by default. You can change the default name and add a classification.

To create a CSV, right-click the cluster > Properties > Shared Volumes.

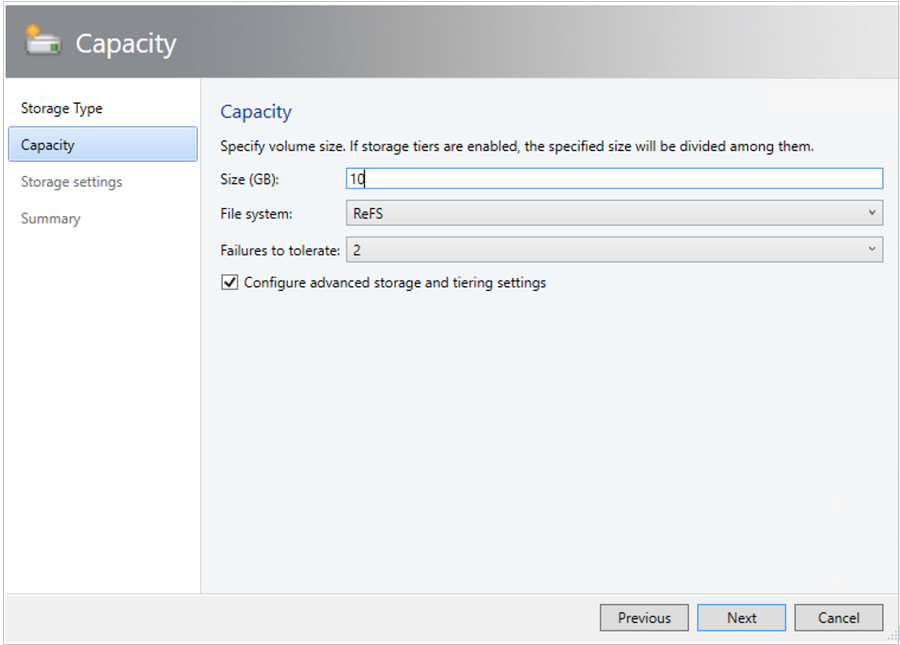

In the Create Volume Wizard > Storage Type, specify the volume name and select the storage pool.

In Capacity, you can specify the volume size, file system, and resiliency (Failures to tolerate) settings. Select Configure advanced storage and tiering settings to set up these options.

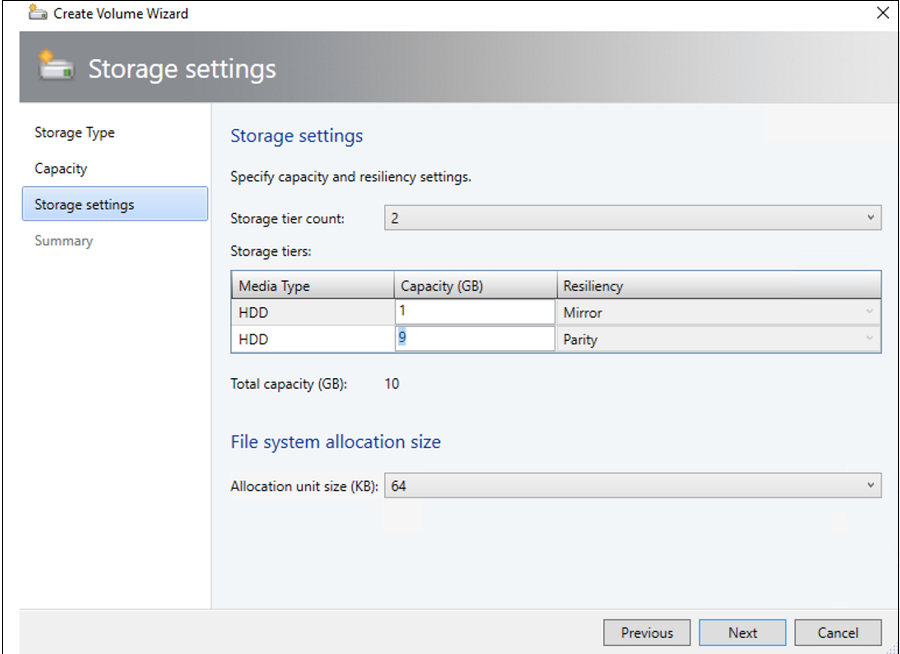

In Storage settings, you can specify the storage tier split, capacity, and resiliency.

In Summary, verify settings and finish the wizard. A virtual disk will be created automatically when you create the volume.

In a hyper-converged topology, VMs can be directly deployed on the cluster. Their virtual hard disks are placed on the volumes you created using S2D. You create and deploy these VMs just as you would create any other VM.

Important

If the Azure Local instance isn't registered with Azure or not connected to Azure for more than 30 days post registration, high availability virtual machine (HAVM) creation will be blocked on the cluster. Refer to step 4 and 5 for cluster registration.

Use Network migration functionality in VMM to migrate workloads from Hyper-V (Windows Server 2019 and later) to Azure Stack HCI.

Note

Live migration between Windows Server and Azure Local isn’t supported. Network migration from Azure Local to Windows Server isn’t supported.

VMM offers a simple wizard-based experience for V2V (Virtual to Virtual) conversion. You can use the conversion tool to migrate workloads at scale from VMware infrastructure to Hyper-V infrastructure. For the list of supported VMware servers, see System requirements.

For prerequisites and limitations for the conversion, see Convert a VMware VM to Hyper-V in the VMM fabric.

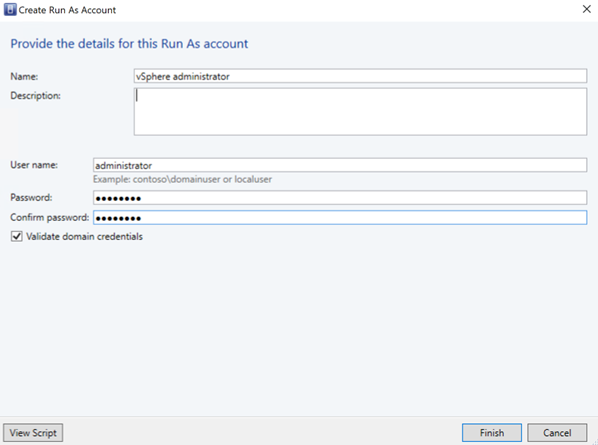

Create Run as account for vCenter Server Administrator role in VMM. These administrator credentials are used to manage vCenter server and ESXi hosts.

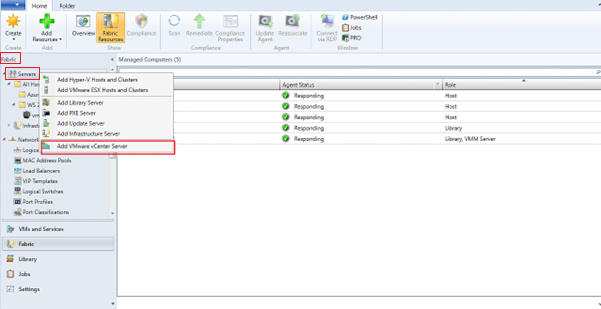

In the VMM console, under Fabric, select Servers > Add VMware vCenter Server.

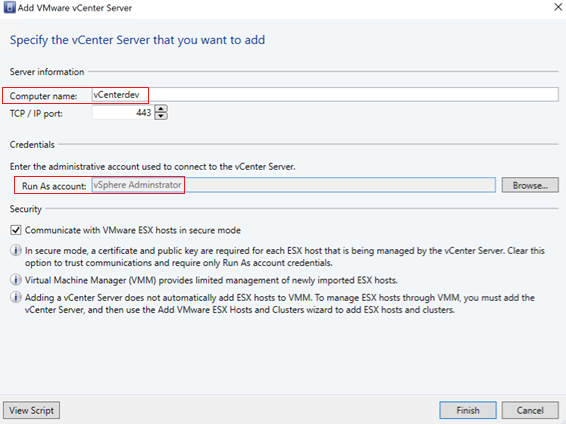

In the Add VMware vCenter Server page, do the following:

Computer name: Specify the vCenter server name.

Run As account: Select the Run As account created for vSphere administrator.

Select Finish.

In the Import Certificate page, select Import.

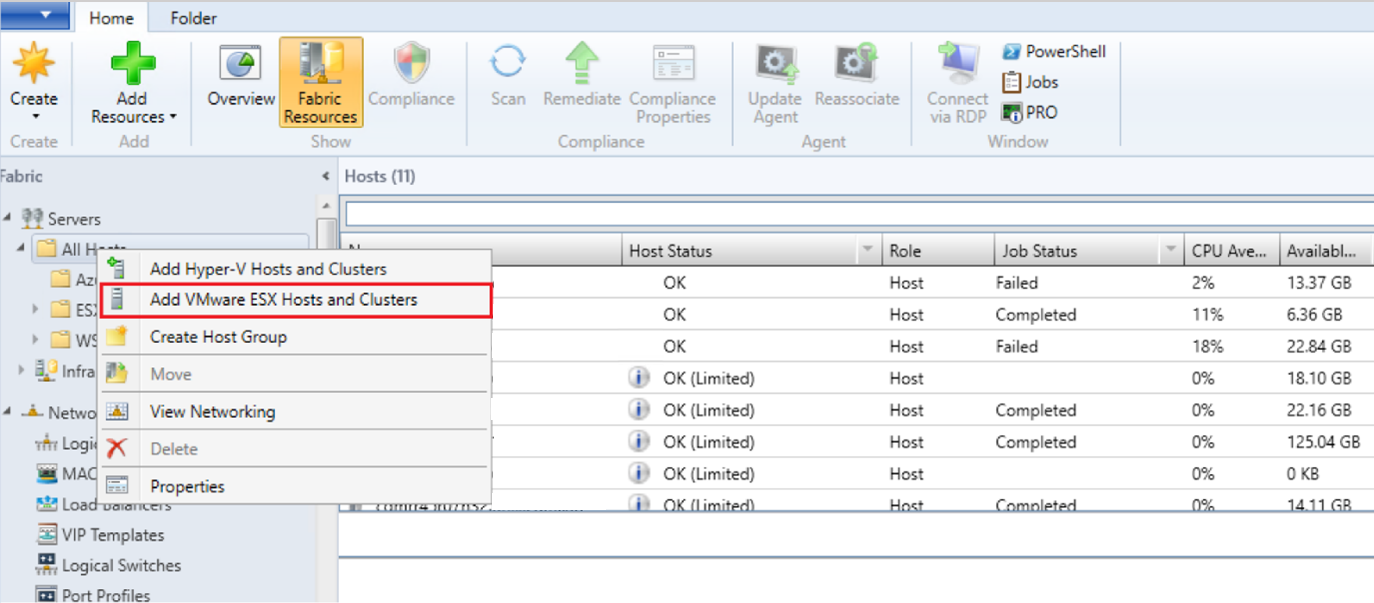

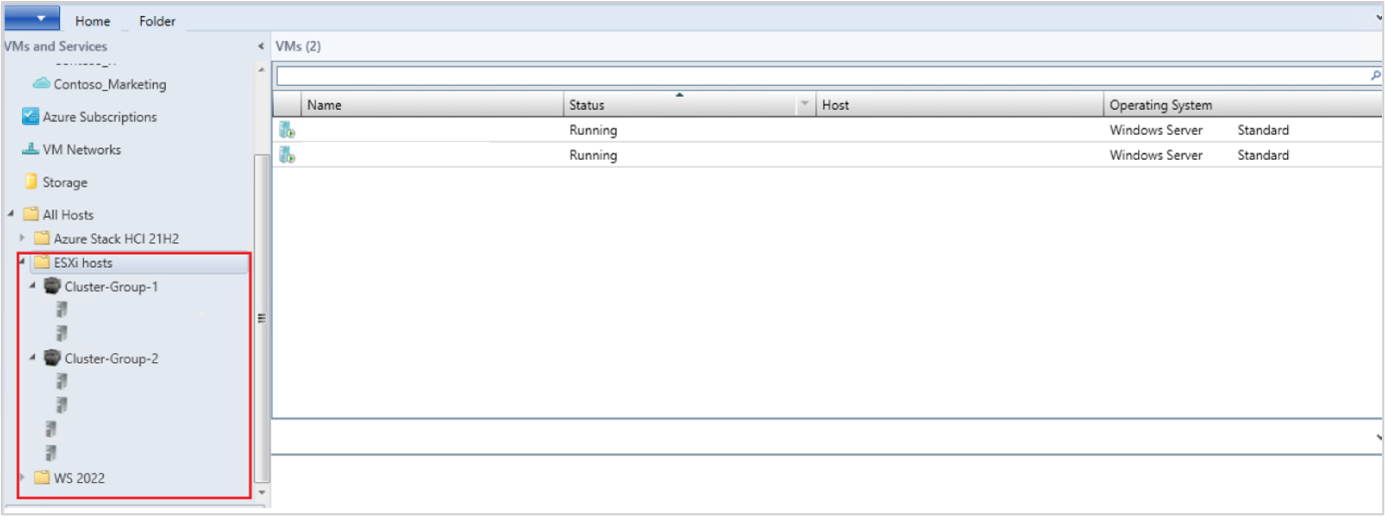

After the successful addition of the vCenter server, all the ESXi hosts under the vCenter are migrated to VMM.

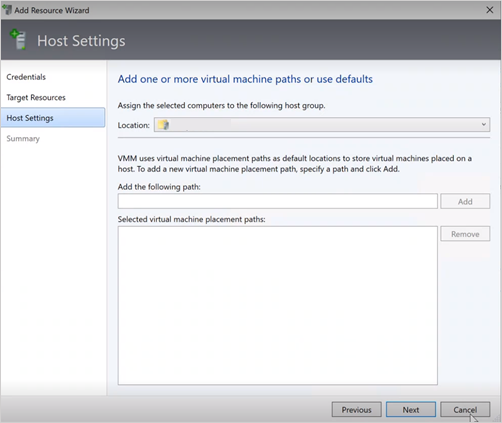

Under Credentials, select the Run as account that is used for the port and select Next.

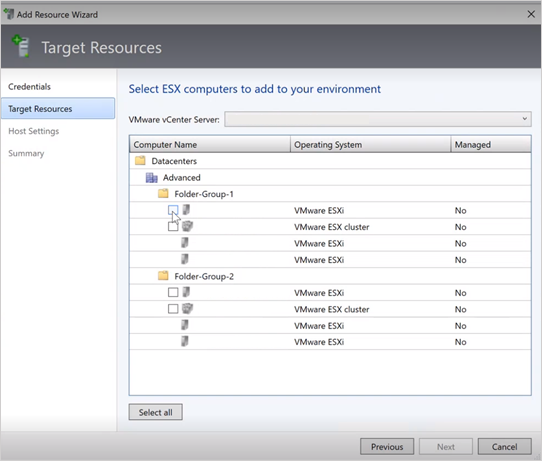

Under Target Resources, select all the ESX clusters that need to be added to VMM and select Next.

Under Host Settings, select the location where you want to add the VMs and select Next.

Under Summary, review the settings and select Finish. Along with the hosts, associated VMs will also get added.

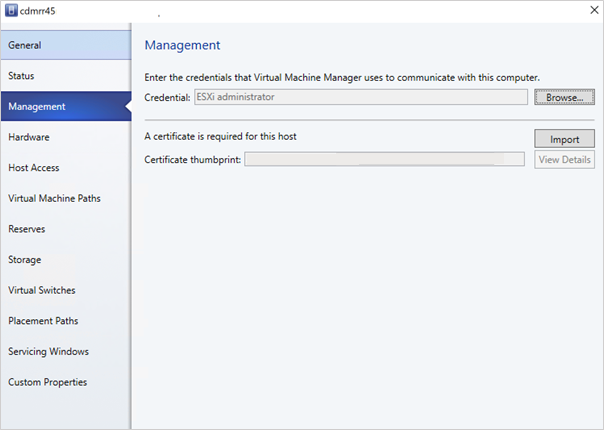

If the ESXi host status reflects as OK (Limited), right-click Properties > Management, select Run as account that is used for the port and import the certificates for the host.

Repeat the same process for all the ESXi hosts.

After you add the ESXi clusters, all the virtual machines running on the ESXi clusters are auto discovered in VMM.

Go to VMs and Services to view the virtual machines.

You can also manage the primary lifecycle operations of these virtual machines from VMM.

Right-click the VM and select Power Off (online migrations aren't supported) that need to be migrated and uninstall VMware tools from the guest operating system.

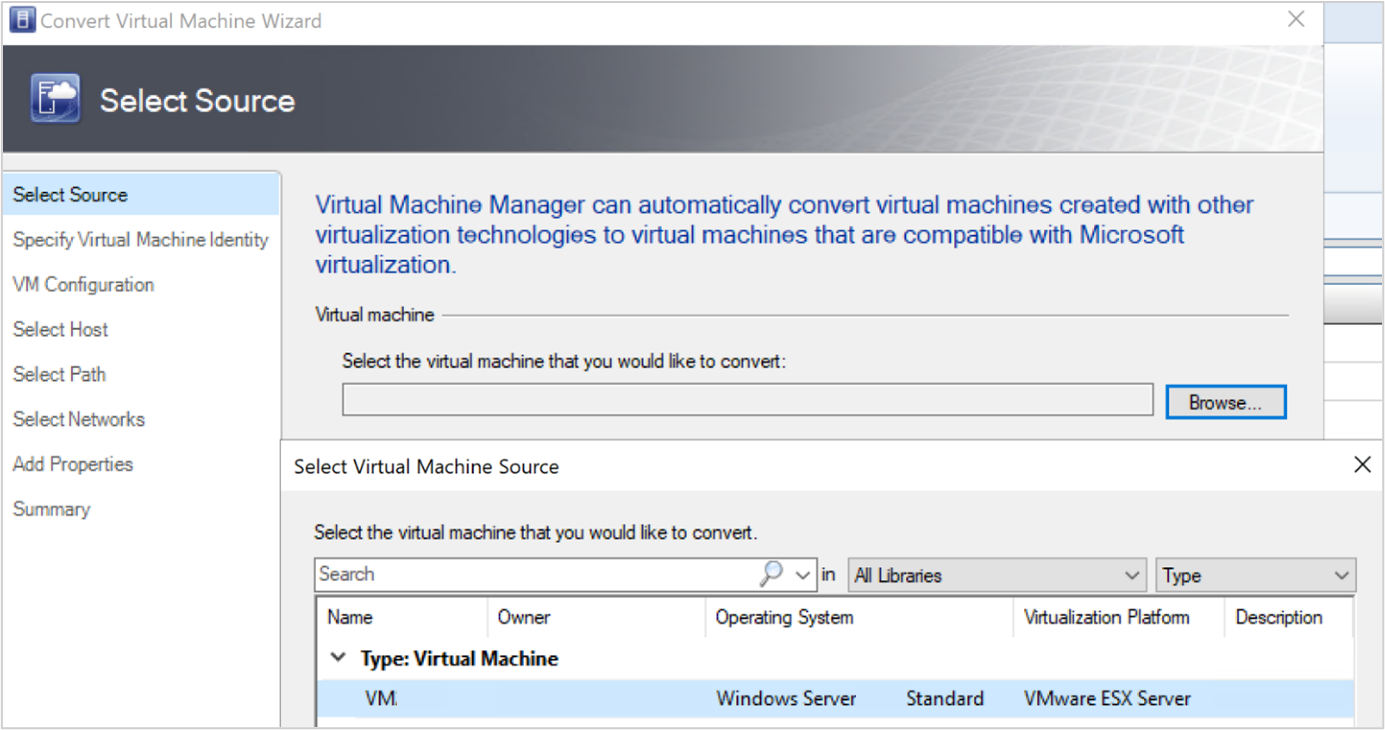

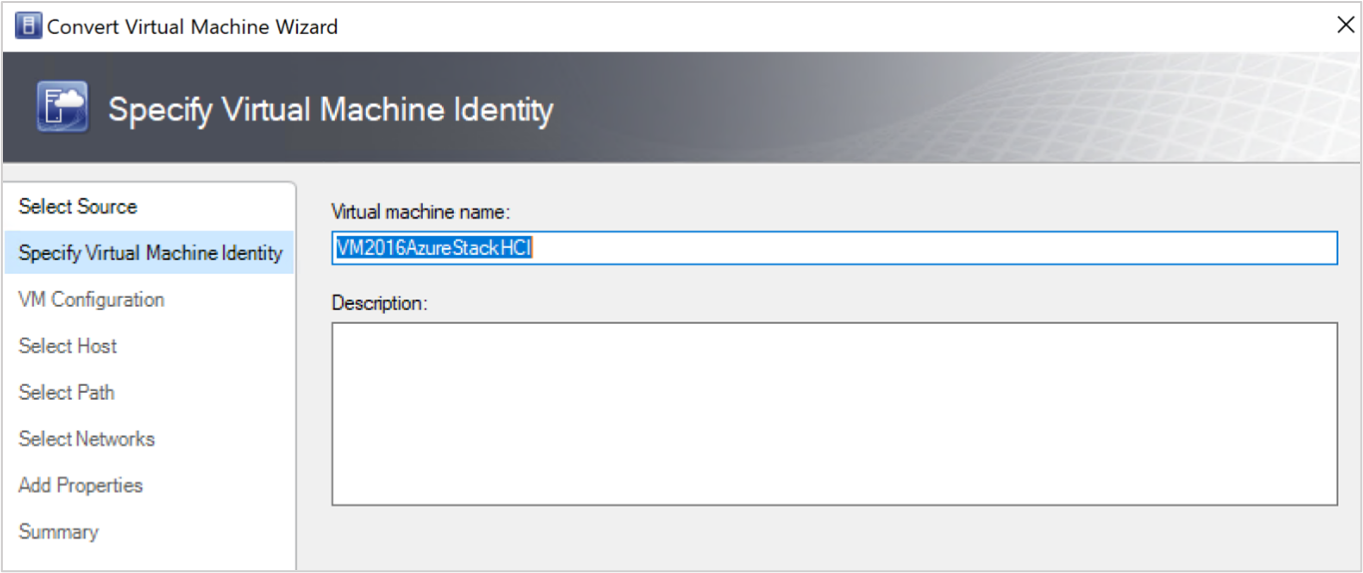

Select Home > Create Virtual Machines > Convert Virtual Machine.

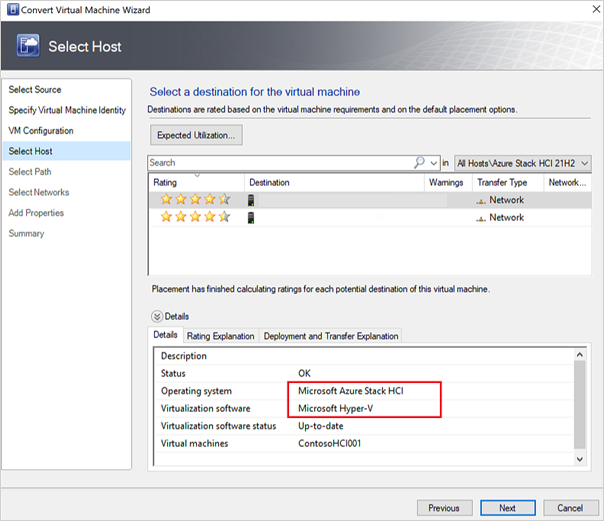

In the Convert Virtual Machine Wizard,

Under Select Host, select the target Azure Local machine and specify the location on the host for VM storage files and select Next.

Select a virtual network for the virtual machine and select Create to complete the migration.

The virtual machine running on the ESXi cluster is successfully migrated to Azure Local instance. For automation, use PowerShell commands for conversion.

Events

Apr 29, 2 PM - Apr 30, 7 PM

Join the ultimate Windows Server virtual event April 29-30 for deep-dive technical sessions and live Q&A with Microsoft engineers.

Sign up nowTraining

Module

Migrate VMware vSphere resources from on-premises to Azure VMware Solution - Training

Learn how to migrate virtual machines from an on-premises VMware environment to Azure VMware Solution by using VMware HCX.

Certification

Microsoft Certified: Azure Virtual Desktop Specialty - Certifications

Plan, deliver, manage, and monitor virtual desktop experiences and remote apps on Microsoft Azure for any device.