Parallel processing with PowerShell

By Richard Siddaway, the latest in his PowerShell series

The traditional approach to administering servers is to log into the server – usually via RDP these days as your data centre may be in a different building and often a different city. From there you can then perform your administrative tasks, log out – you’re not one of those people that doesn’t log out of administrator RDP sessions are you – and proceed to the next task on the next server.

If you have a handful of servers this approach is sustainable. By the time you get into the tens (low tens) of servers it just doesn’t work if you have to touch each server. Let me give you an example: a few years ago I heard a colleague grumbling because they had to go through the event logs on 30 servers to check which ones were showing a particular event. That’s not a quick process!

Before they’d finished the first server I’d written a quick and dirty PowerShell script that checked the event log on each server and counted the number of events of interest that were present. In most cases it was zero – which was good – so the support team could concentrate on the servers that actually had the problem. Those few minutes of scripting saved the support team hours of work and enabled the problem to be resolved much quicker. It also had the additional bonus of introducing the support team to PowerShell and got them interested in solving other problems using an automation approach.

Automating administrative tasks is a great time saver as well as bringing other benefits such as repeatability and consistency into your environment. However, there are some issues above and beyond learning how to automate tasks of which you need to be aware. One of those issues is the number of servers you need to deal with.

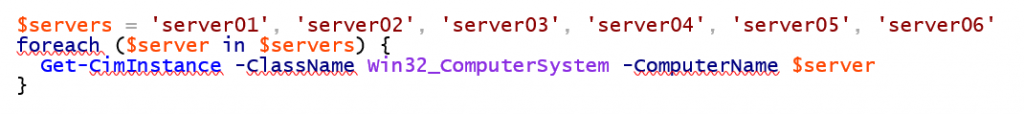

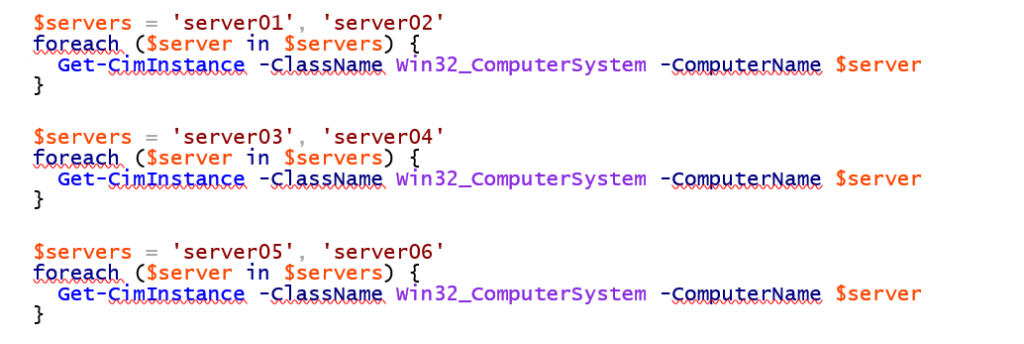

When you’re working with a relatively low number of servers you can afford to work sequentially – your script accesses the first server, and then the second, and then the third and so on. A simple example could be:

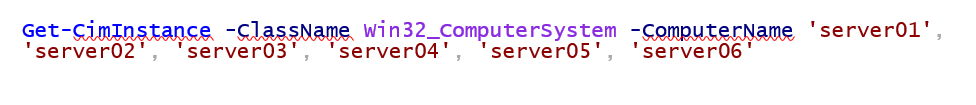

As an aside you could write the previous example as single line of code:

As an aside you could write the previous example as single line of code: I’ve deliberately not used that approach as it won’t scale.

I’ve deliberately not used that approach as it won’t scale.

Back to our example - a list of servers is generated and for each server in that list a call is made using Get-CimInstance to retrieve the computer system information. The server list could be extracted from Active Directory or maintained in a file that you read into the script.

At some point this approach will break down. You’ll have too many servers to process in too short a timeframe. You need to start thinking about performing tasks in parallel. You have a number of options available ranging from the simple, but hard work for you, to the more complicated approaches that involve less work for you. No prizes for guessing which I prefer.

Whichever approach you end up taking you will be getting PowerShell to run tasks in parallel. That will often require you to have additional instances of PowerShell running. The resources on your admin machine – CPU, memory and network bandwidth – are finite. Keep those in mind so you don’t overload the machine and end up getting nothing back.

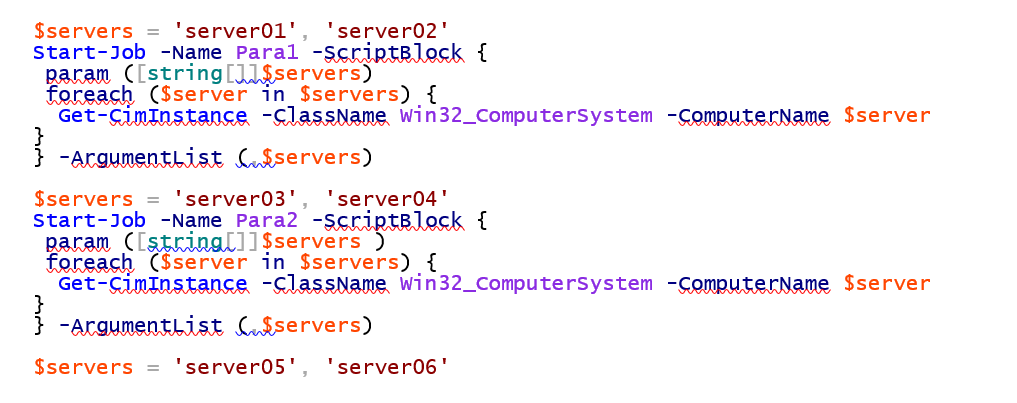

Your first option is to manually split the list of servers you need to access:

Conceptually, the three scripts above show you how to do this. Split the server list into a number of chunks and run each chunk in a separate PowerShell session. As an aside you’ll use slightly less resources if you run the scripts in the PowerShell console rather than in PowerShell ISE. Open both and use Get-Process to see the difference in requirements – especially memory.

Conceptually, the three scripts above show you how to do this. Split the server list into a number of chunks and run each chunk in a separate PowerShell session. As an aside you’ll use slightly less resources if you run the scripts in the PowerShell console rather than in PowerShell ISE. Open both and use Get-Process to see the difference in requirements – especially memory.

Ideally, it would be great if you could get the system to run the scripts in different PowerShell instances. There is a mechanism to do this – it’s called PowerShell jobs. When you run a script as a job it runs in a separate, background instance of PowerShell and you can carry on working.

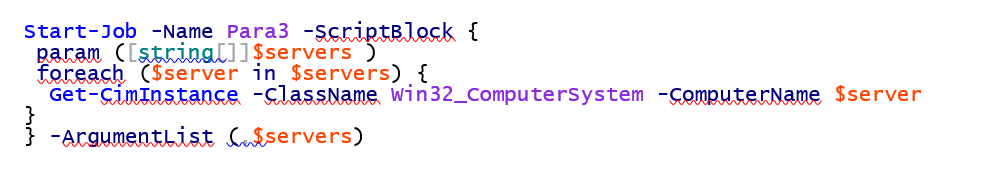

Converting our individual scripts, we’d end up with something like this:

We’re creating three jobs, each of which runs against two of the remote machines. In a production environment you’d have many more machines accessed by each job.

We’re creating three jobs, each of which runs against two of the remote machines. In a production environment you’d have many more machines accessed by each job.

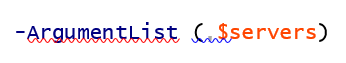

One thing that catches many people is the way you pass arguments into the scriptblock. The -ArgumentList parameter takes an array of arguments. If you just pass the array containing the list of servers:

Then the array will be unravelled and each element will be treated as an individual argument. What this means in practice is that only the first server in the list will be processed. You need to force the argument to be treated as an array by prefixing the variable with a comma:

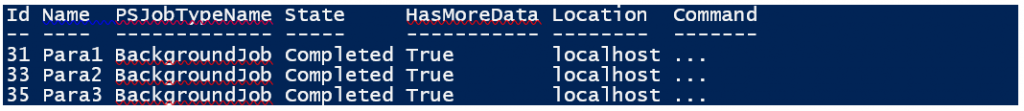

You don’t have to supply a name for the job, but it does help keep track of what you are doing. When the jobs are started you’ll see something like this (except the State will be Running):

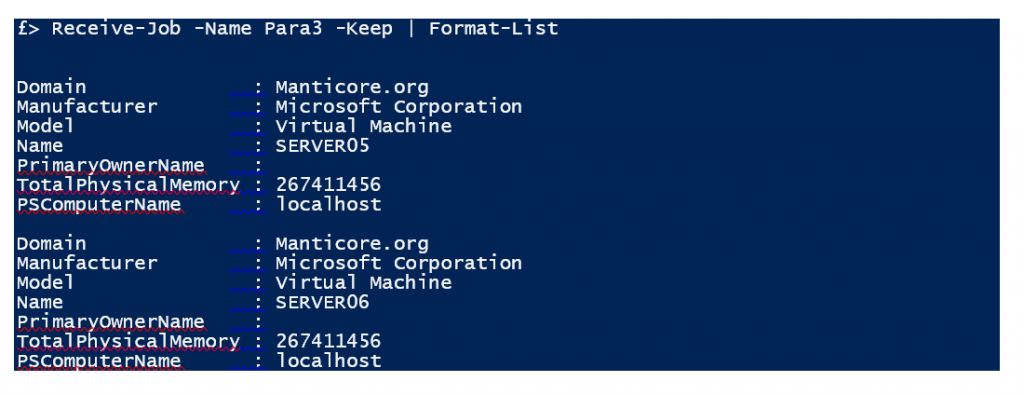

The Id number will, almost certainly, be different on your machine. When a job shows a State of Completed you can retrieve the data using Receive-Job:

The Id number will, almost certainly, be different on your machine. When a job shows a State of Completed you can retrieve the data using Receive-Job:

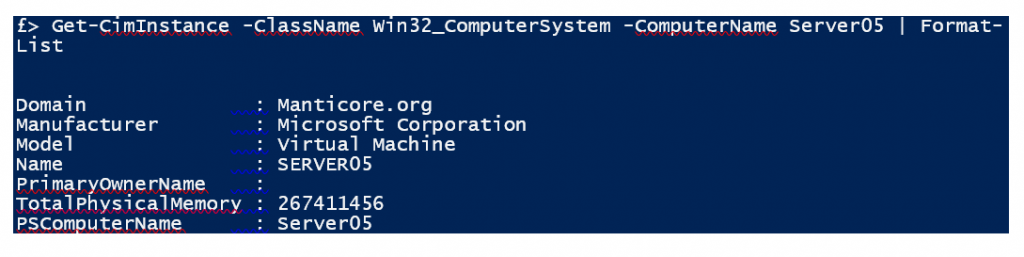

One disadvantage of using PowerShell jobs like this is that the PSComputerName property isn’t correctly populated. In the example above the Name of the machine is SERVER05 but the PSComputerName is set to localhost. Compare this to running directly:

One disadvantage of using PowerShell jobs like this is that the PSComputerName property isn’t correctly populated. In the example above the Name of the machine is SERVER05 but the PSComputerName is set to localhost. Compare this to running directly:

Both the Name and PSComputerName properties are set to Server05.

Both the Name and PSComputerName properties are set to Server05.

The -Keep parameter ensures that the data is still available in the job if you require to read it again. If you don’t use -Keep. The job’s data is deleted when you use Receive-Job. In either case the job will not be deleted – that has to be done manually:

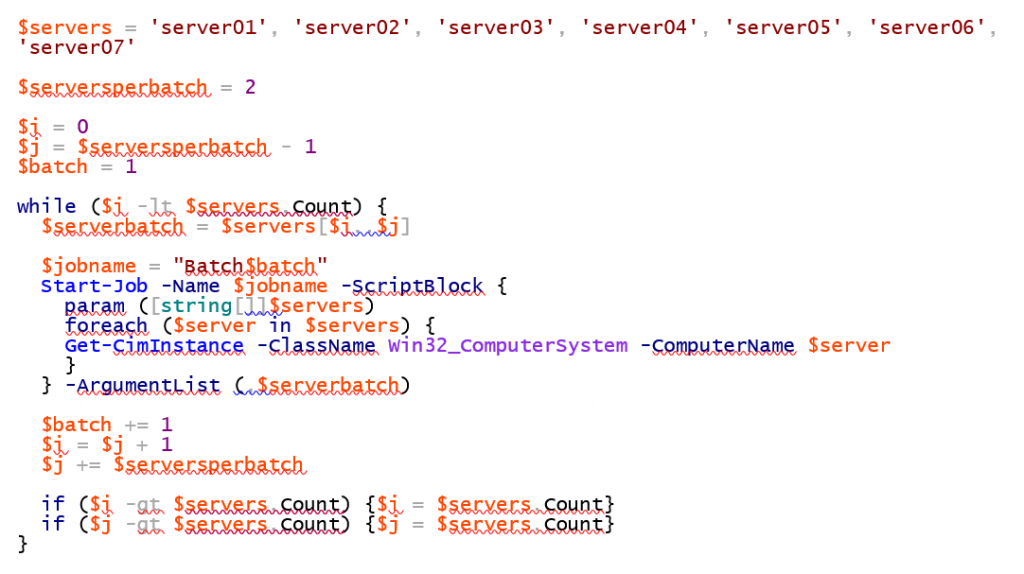

This takes us a step further but we’re still having to create the sets of servers manually. The simplest way to deal with that is to decide how many servers you want to process in a batch and split the list accordingly:

I’ve added a seventh server so the numbers don’t come out even. Set the number of servers per batch:

I’ve added a seventh server so the numbers don’t come out even. Set the number of servers per batch:

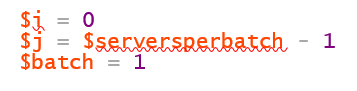

And some counters:

$i and $j are the indices into the array of server names. $batch is the batch counter.

Create a loop that will run while $i is less than the number of servers in the array. Create an array holding a subset of the servers and a name for the job:

Create the PowerShell job using Start-Job as done previously.

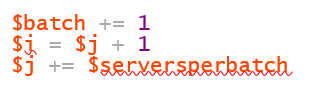

Increment the counters:

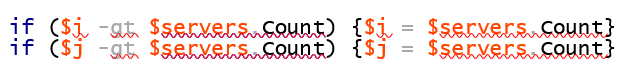

$batch increases by 1. $i is increased to 1 after the last index used ($j) and $j is increased by the number of servers per batch. A check is needed that neither $i nor $j exceed the number of servers in the list:

NOTE: This is a very simple case so check the logic doesn’t miss servers if you try this approach.

The number of jobs you can have running at any one time is dependent on the resources available on your system. Many PowerShell cmdlets have an -AsJob parameter so the work is processed as a job. Those cmdlets tend to also have a -ThrottleLimit parameter to limit the number of jobs that can be run simultaneously. This defaults to 32 which seems to be a reasonable starting point. If you want to run more jobs than this simultaneously then you need to test to determine what your system can handle.

PowerShell jobs are a very powerful, but underutilised aspect of PowerShell. The next alternative is also powerful and under used – PowerShell workflow.

PowerShell workflows were one of the headline items in PowerShell 3.0. Workflows are a really strong option if you need parallel processing and/or you need your code to manage and survive a reboot of the remote machine. After an initial flurry the use of workflows seems to have diminished. In the right place they perform admirably.

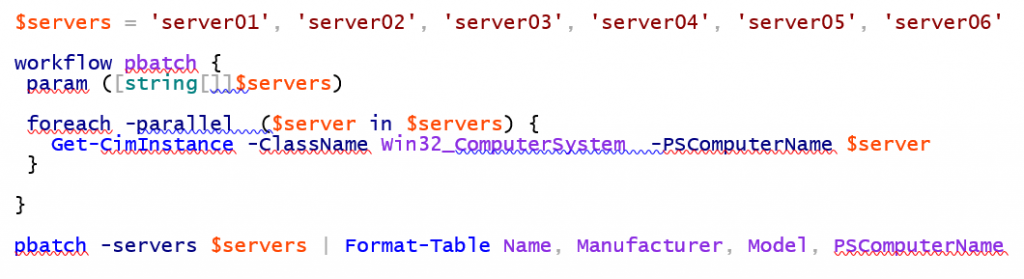

A PowerShell workflow to perform our task against a number of remote machines would look like this:

The keyword workflow is used to define the workflow. Notice the -parallel parameter on the foreach statement. This instructs the workflow to perform the actions in parallel rather than sequentially.

Also notice that the -ComputerName parameter on Get-CimInstance has changed to -PSComputerName. This is because Get-CimInstance is actually a PowerShell workflow activity rather than a cmdlet. This is one of the confusing oddities of PowerShell workflows that cause problems for people new to PowerShell and workflows.

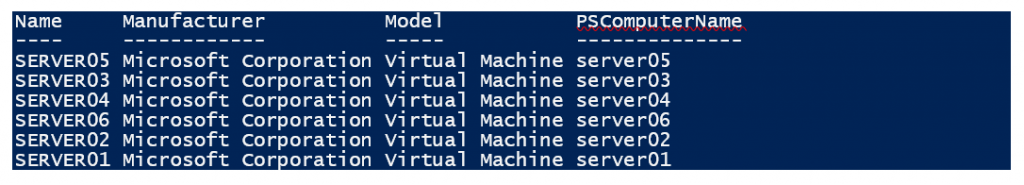

Running the workflow produces results like this:

Notice that the order in which the results are returned is random(ish). This is an artefact of the way workflows process the commands. If you need the data sorted into a particular order, you’ll need to do that yourself.

Your last option is to use PowerShell runspaces to run your tasks. This is a much more programmatic approach that involves running PowerShell instances – much as you did with PowerShell jobs but without some of the overheads inherent in the PowerShell job system.

This approach involves diving into .NET to a degree so if you’re not happy with that you may want to stick with the earlier options.

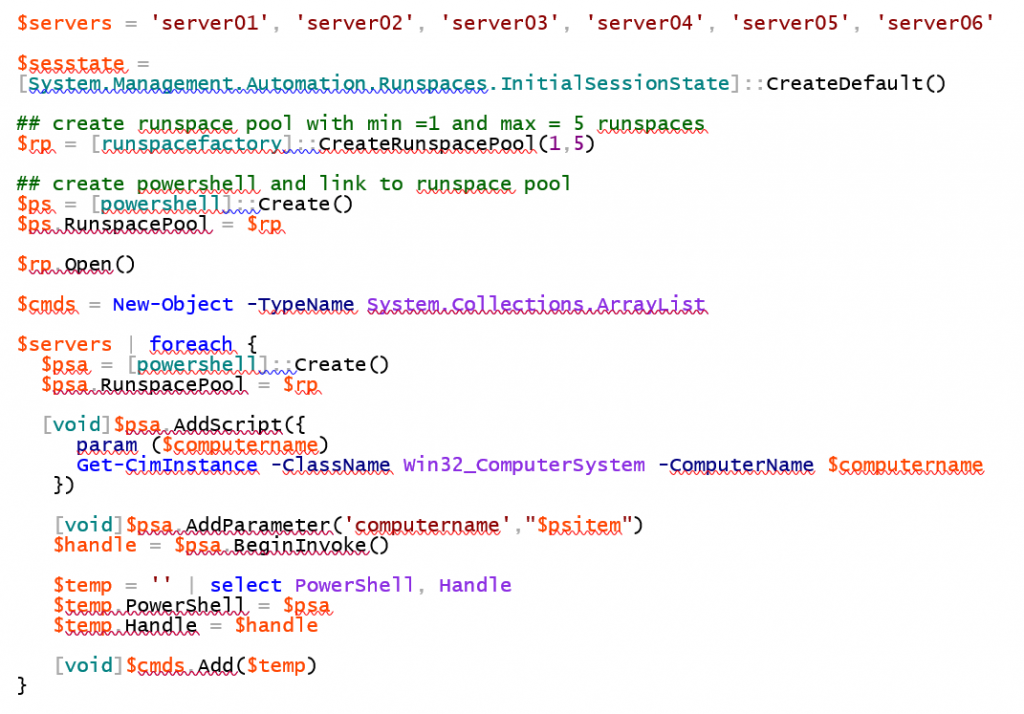

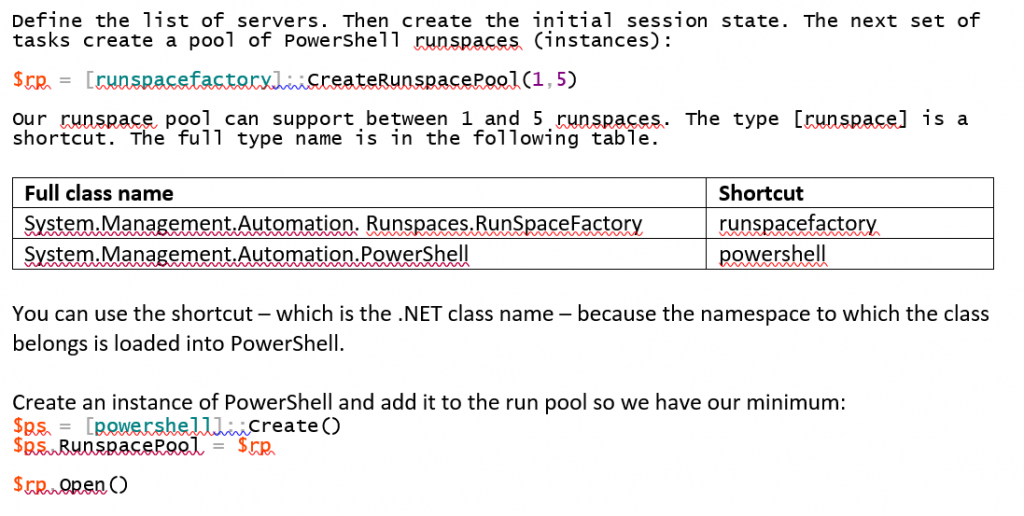

Our example looks like this:

It’s important to open the runspace pool otherwise it can’t be used.

It’s important to open the runspace pool otherwise it can’t be used.

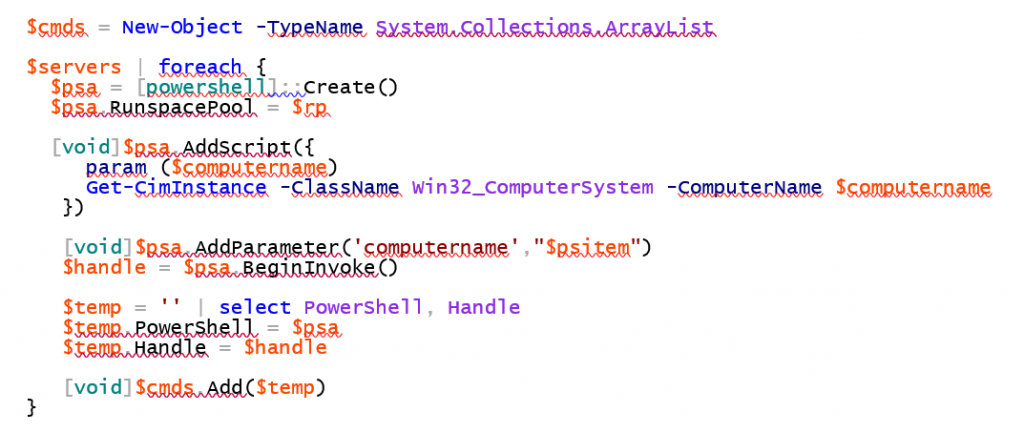

The actual work is performed by this code:

Create an array list – this can have members added unlike a standard array. Then, for each server create an instance of PowerShell and tell it which runspace pool to use. Add a script to the PowerShell instance that calls Get-CimInstance. Add the appropriate server name as a parameter.

Create an array list – this can have members added unlike a standard array. Then, for each server create an instance of PowerShell and tell it which runspace pool to use. Add a script to the PowerShell instance that calls Get-CimInstance. Add the appropriate server name as a parameter.

Start the script – BeginInvoke runs the script asynchronously. Add the instance of PowerShell to the list we created earlier.

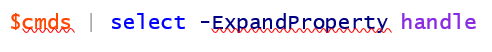

The execution progress can be viewed:

The IsCompleted property indicates if the script is still running.

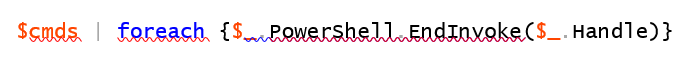

Once the scripts have finished you can retrieve the data:

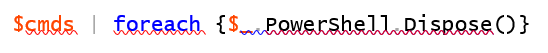

You should clean up the instances:

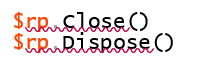

And finally clean up the runspace pool:

PowerShell is all about providing options in the way you perform your automation tasks. This article has shown a number of ways that you can perform tasks in parallel to speed up your tasks and ensure they complete in the appropriate time scale.

The options range from the relatively simple manual approach through PowerShell jobs and workflows and finish with a look at programming PowerShell runspaces through PowerShell. Which approach you take is up to you, but however you approach things always remember that if you need to access many remote machines you can do so in parallel.

This article has introduced a number of PowerShell topics – PowerShell jobs, PowerShell workflows, .NET programming with PowerShell. If there is a particular area, you’d like covered in more detail leave a comment and I’ll see what can be arranged.

Beginning use of PowerShell Runspaces - Hey Scripting Guy!

By Richard Siddaway, PowerShell MVP and author on a number of books on PowerShell, Active Directory and Windows Administration. He is a regular speaker at the PowerShell Summit and user groups, and blogs regularly on his PowerShell blog.

By Richard Siddaway, PowerShell MVP and author on a number of books on PowerShell, Active Directory and Windows Administration. He is a regular speaker at the PowerShell Summit and user groups, and blogs regularly on his PowerShell blog.

- Anonymous

June 20, 2016

Great stuffThanks - Anonymous

June 21, 2016

Hi!Nice write-up! A quick note on runspaces:Re-inventing the runspace-wheel definitely looks complicated and developer-y, but it's a bit less developer-y in spirit.Abstraction and using existing libraries like PoshRsJob is a great way to simplify using runspaces to the point where they effectively behave like an improved 'job', and happens to be a more dev-oriented approach - use the best library out there and contribute to it if warranted, rather than re-invent the wheel : )It's linked in the resource you referenced, but for convenience, here's the GitHub repo: https://github.com/proxb/PoshRSJobAlso, perhaps more controversially, we usually steer folks away from workflows for parallelization. They're a bit less flexible with regards to parallelization than tools like PoshRsJob, and they introduce a trove of gotchas given the differences between what you can do in PowerShell, and what you can do in workflows.Cheers! - Anonymous

June 22, 2016

Good work - thanks. - Anonymous

June 23, 2016

Thank you for the examples, but the main reason someone would want to do parallel processing is to increase performance, and your article doesn't talk about the relative performance of each of the methods. A nice follow-up article might have a table that compares each method to indicate relative speed, CPU, memory, code complexity, minimum PowerShell version locally and remotely, reboot durability, etc. For example, the reason workflows are not used much is because of the complex workflow rules for arguments, returns, variable scopes, command limitations within a flow, etc. This is what could be discussed along with the comparison table above. Thank you!- Anonymous

June 28, 2017

@Doodius Parallelus you are 100% right, workflows can be a pain in the neck - don't let the simple syntax fool you!For example, let's see you get it running with a degree of parallelism of more than 5 ...

- Anonymous

- Anonymous

June 24, 2016

Very much intresting contents. - Anonymous

June 27, 2016

The comment has been removed - Anonymous

September 10, 2016

Why oh why don't you mention the use of PS Remoting? It's a great way to do parallel processing of remote machines. - Anonymous

February 10, 2017

Greetings -Great article. Can you also show how to copy multiple large files in a folder to copy them in parallel? - Anonymous

August 22, 2017

Is there a reason that you copy the text from the ISE, paste it into Word, then take a screenshot of it? It would be much nicer to have this in a format that could be run on my own PC. - Anonymous

September 07, 2017

Where can i buy your books