Hi @Ryan Abbey ,

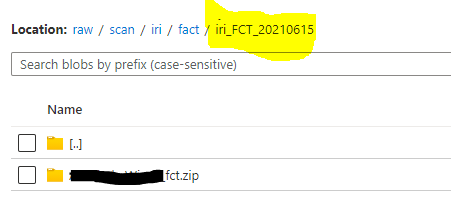

Please check detailed example, Which Copies file to folder(folder name will be dynamically created as you requested above(iri_FCT_yyyyMMdd))

Step1: Create a variable in your pipeline to hold current date. Use set variable activity to set value in it.

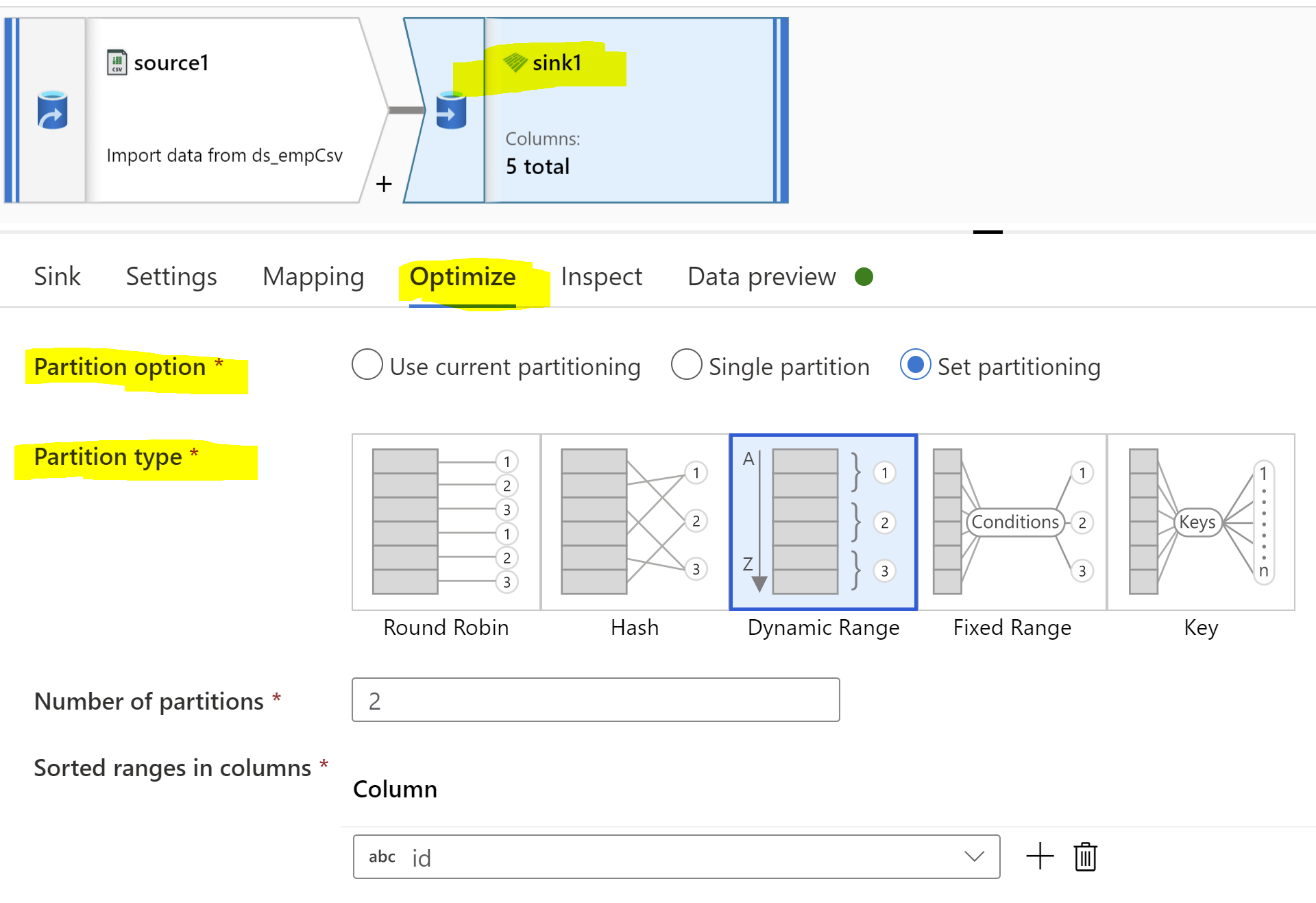

Step2: Use Copy activity to copy zip file. Source and Sink dataset types should be binary. In sink data set we should create a parameter which will dynamically give us target folder name as "iri_FCT_yyyyMMdd"

Hop this will help.

----------------------------------

- Please

accept an answerif correct. Original posters help the community find answers faster by identifying the correct answer. Here is how. - Want a reminder to come back and check responses? Here is how to subscribe to a notification.