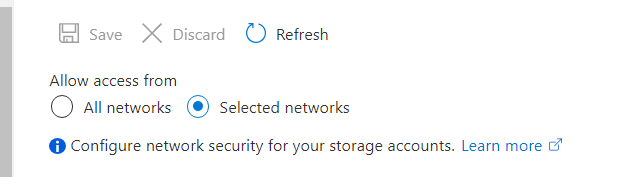

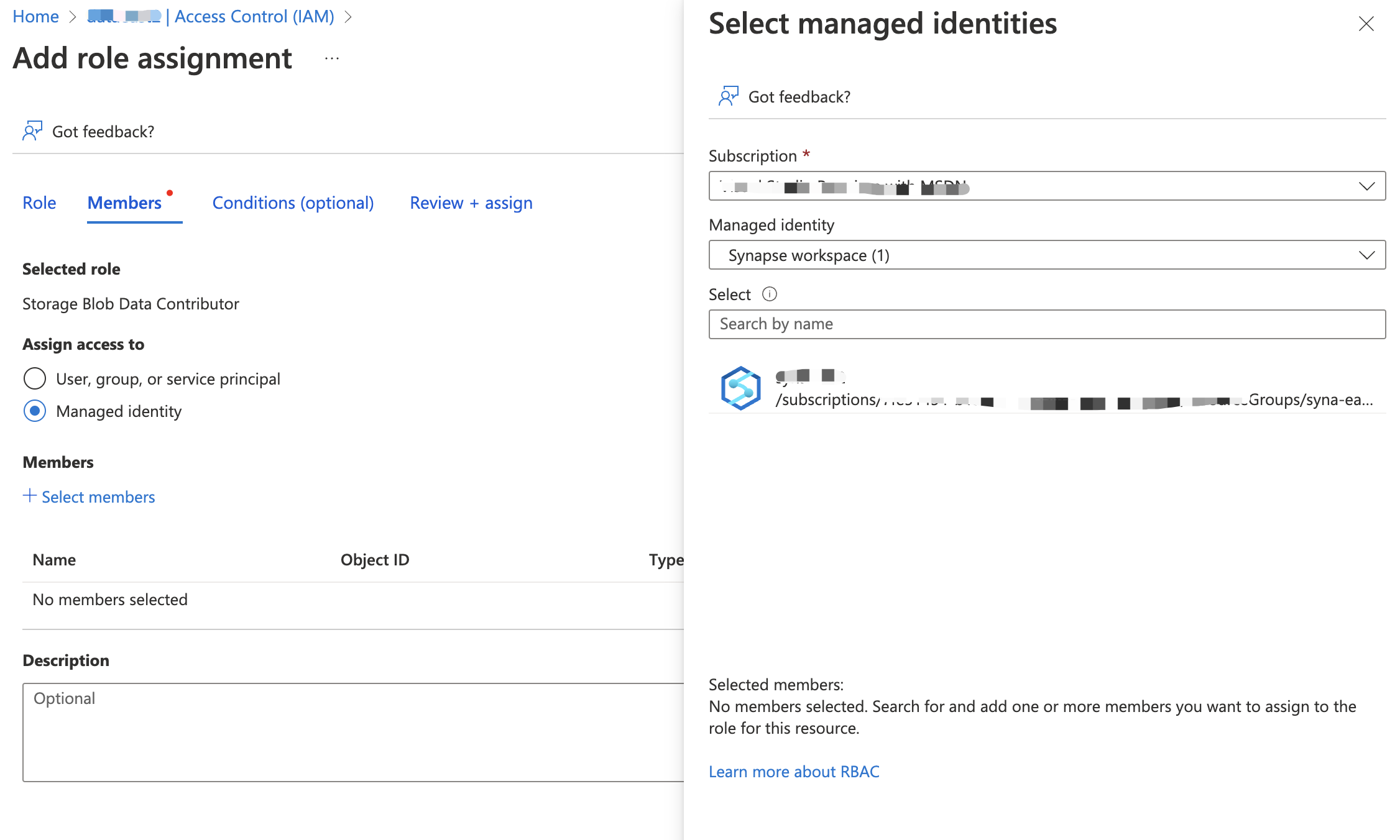

I built a pipeline with Data Factory, reading data from a MongoDB and storing the data in a Data Storage Lake Gen2. When checking the connection to source and target both connections worked without any problems. But checking the "File Path" connection concerning the sink, I am resceiving and authorization error. The connections is set up with a Managed Identity. Moreover, I added the resource of the Data Factory to the data storage.

When executing the pipeline the run fails and I am receiving the following error:

Operation on target Copy_36v failed: Failure happened on 'Sink' side. ErrorCode=AdlsGen2OperationFailed,'Type=Microsoft.DataTransfer.Common.Shared.HybridDeliveryException,Message=ADLS Gen2 operation failed for: Operation returned an invalid status code 'Forbidden'. Account: 'xxxxx'. FileSystem: 'mongodbleads'. Path: 'output/data_62........txt'. ErrorCode: 'AuthorizationPermissionMismatch'. Message: 'This request is not authorized to perform this operation using this permission.'.

...

'Forbidden',Source=,''Type=Microsoft.Azure.Storage.Data.Models.ErrorSchemaException,Message=Operation returned an invalid status code 'Forbidden',Source=Microsoft.DataTransfer.ClientLibrary,'

Any help would be appreciated. It seems that the Data Factory still can't totally access the Data Storage.

Do I manually have to add a Blob Container at my target Data Lake Gen2?

Thanks!