Ask Learn

PreviewPlease sign in to use this experience.

Sign inThis browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

Data science is a multidisciplinary field that uses scientific methods, processes, algorithms, and tools to extract knowledge and insights from structured and unstructured data.

In a typical data science project, it's a common practice to start with the Exploratory Data Analysis (EDA) where it involves understanding the patterns, spotting anomalies, testing hypotheses, and checking assumptions related to the underlying data.

The insights gained from EDA can guide data scientists in choosing the appropriate statistical or machine learning models that best fit the data.

Microsoft Fabric notebooks allow you to seamlessly integrate your exploration results into a data science workflow. This can then be used to feed an upstream reporting solution, such as a Power BI report.

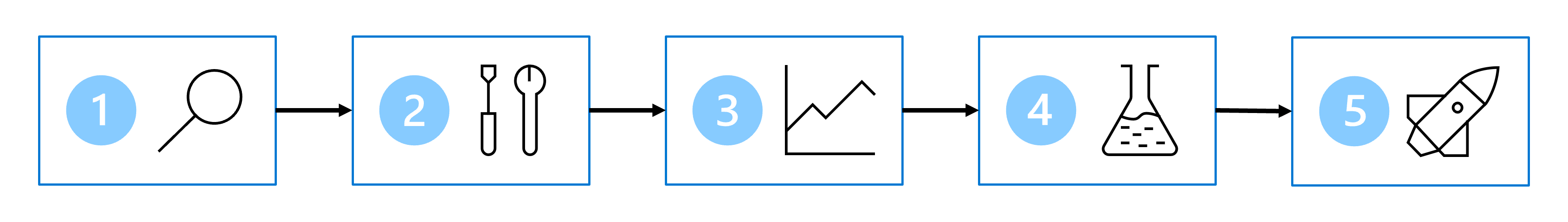

Data exploration is a preliminary investigation of the data that sets the stage for all subsequent steps in the data science process.

In this module, you focus on how to load data and perform data exploration. Working in a notebook within Microsoft Fabric, you work with Python to understand different types of data distribution. You learn the concept of missing data, and strategies to handle missing data effectively. Finally, you visualize data using various data visualization techniques and libraries.

Please sign in to use this experience.

Sign in