Events

May 19, 6 PM - May 23, 12 AM

Calling all developers, creators, and AI innovators to join us in Seattle @Microsoft Build May 19-22.

Register todayThis browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

Important

The Mixed Reality Academy tutorials were designed with HoloLens (1st gen), Unity 2017, and Mixed Reality Immersive Headsets in mind. As such, we feel it is important to leave these tutorials in place for developers who are still looking for guidance in developing for those devices. These tutorials will not be updated with the latest toolsets or interactions being used for HoloLens 2 and may not be compatible with newer versions of Unity. They will be maintained to continue working on the supported devices. A new series of tutorials has been posted for HoloLens 2.

Spatial sound breathes life into holograms and gives them presence in our world. Holograms are composed of both light and sound, and if you happen to lose sight of your holograms, spatial sound can help you find them. Spatial sound is not like the typical sound that you would hear on the radio, it is sound that is positioned in 3D space. With spatial sound, you can make holograms sound like they're behind you, next to you, or even on your head! In this course, you will:

| Course | HoloLens | Immersive headsets |

|---|---|---|

| MR Spatial 220: Spatial sound | ✔️ | ✔️ |

Note

If you want to look through the source code before downloading, it's available on GitHub.

By default, Unity does not load a spatializer plugin. The following steps will enable Spatial Sound in the project.

We will now build the project in Unity and configure the solution in Visual Studio.

When Unity is done, a File Explorer window will appear.

If deploying to HoloLens:

If deploying to an immersive headset:

The appropriate location for the sound is going to depend on the hologram. For example, if the hologram is of a human, the sound source should be located near the mouth and not the feet.

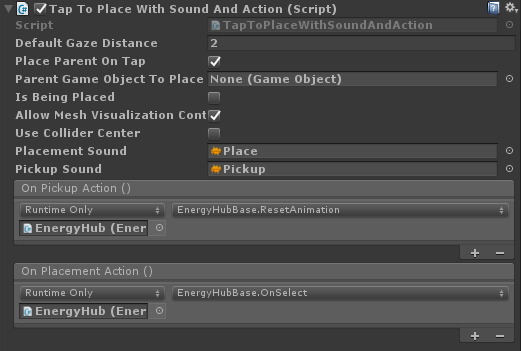

The following instructions will attach a spatialized sound to a hologram.

Project Decibel uses a Unity AudioMixer component to enable adjusting sound levels for groups of sounds. By grouping sounds this way, the overall volume can be adjusted while maintaining the relative volume of each sound.

Setting Doppler level to zero disables changes in pitch caused by motion (either of the hologram or the user). A classic example of Doppler is a fast-moving car. As the car approaches a stationary listener, the pitch of the engine rises. When it passes the listener, the pitch lowers with distance.

One example of learned expectations is that birds are generally above the heads of humans. If a user hears a bird sound, their initial reaction is to look up. Placing a bird below the user can lead to them facing the correct direction of the sound, but being unable to find the hologram based on the expectation of needing to look up.

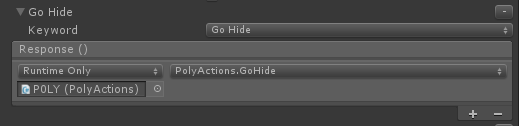

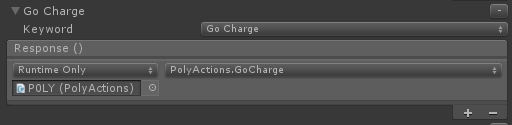

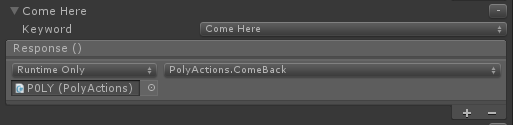

The following instructions enable P0LY to hide behind you, so that you can use sound to locate the hologram.

Gesture Sound Handler performs the following tasks:

Check that the Toolbar says "Release", "x86" or "x64", and "Remote Device". If not, this is the coding instance of Visual Studio. You may need to re-open the solution from the App folder.

After the application is deployed:

Note: There is a text panel that will tag-along with you. This will contain the available voice commands that you can use throughout this course.

For example, setting a cup on a table should make a quieter sound than dropping a boulder on a piece of metal.

A classic example is a concert hall. When a listener is standing outside of the hall and the door is closed, the music sounds muffled. There is also typically a reduction in volume. When the door is opened, the full spectrum of the sound is heard at the actual volume. High frequency sounds are generally absorbed more than low frequencies.

The Audio Emitter class provides the following features:

The RaycastNonAlloc method is used as a performance optimization to limit allocations as well as the number of results returned.

Note that AudioEmitter updates on human time scales, as opposed to on a per frame basis. This is done because humans generally do not move fast enough for the effect to need to be updated more frequently than every quarter or half of a second. Holograms that teleport rapidly from one location to another can break the illusion.

This setting limits the AudioSource frequencies to 1500 Hz and below.

This setting reduces the volume of the AudioSource to 90% of it's current level.

Audio Occluder implements IAudioInfluencer to:

The frequency used as neutral is 22 kHz (22000 Hz). This frequency was chosen due to it being above the nominal maximum frequency that can be heard by the human ear, this making no discernable impact to the sound.

When multiple occluders are in the path between the user and the AudioEmitter, the lowest frequency is applied to the filter.

When multiple occluders are in the path between the user and the AudioEmitter, the volume pass through is applied additively.

After the application is deployed:

Note the change in the sound. It should sound muffled and a little quieter. If you are able to position yourself with a wall or other object between you and the Energy Hub, you should notice a further muffling of the sound due to the occlusion by the real world.

Note that the sound occlusion is removed once P0LY exits the Energy Hub. If you are still hearing occlusion, P0LY may be occluded by the real world. Try moving to ensure you have a clear line of sight to P0LY.

If you are creating a Virtual Reality scenario, select the room model that best fits the virtual environment.

This section discusses key sound and experience design considerations and guidelines.

This avoids the need for special case code to adjust volume levels per sound, which can be time consuming and limits the ability to easily update sound files.

HoloLens is a fully contained, untethered holographic computer. Your users can and will use your experiences while moving. Be sure to test your audio mix by walking around.

In the real world, a dog does not bark from its tail and a human's voice does not come from his/her feet. Avoid having your sounds emit from unexpected portions of your holograms.

For small holograms, it is reasonable to have sound emit from the center of the geometry.

The human voice and music are very easy to localize. If someone calls your name, you are able to very accurately determine from what direction the voice came and from how far away. Short, unfamiliar sounds are harder to localize.

Life experience plays a part in our ability to identify the location of a sound. This is one reason why the human voice is particularly easy to localize. It is important to be aware of your user's learned expectations when placing your sounds.

For example, when someone hears a bird song they generally look up, as birds tend to be above the line of sight (flying or in a tree). It is not uncommon for a user to turn in the correct direction of a sound, but look in the wrong vertical direction and become confused or frustrated when they are unable to find the hologram.

In the real world, if we hear a sound, we can generally identify the object that is emitting the sound. This should also hold true in your experiences. It can be very disconcerting for users to hear a sound, know from where the sound originates and be unable to see an object.

There are some exceptions to this guideline. For example, ambient sounds such as crickets in a field need not be visible. Life experience gives us familiarity with the source of these sounds without the need to see it.

Mixed Reality experiences allow holograms to be seen in the real world. They should also allow real world sounds to be heard. A 70% volume target enables the user to hear the world around them along with the sound of your experience.

A volume level of 100% is akin to a Virtual Reality experience. Visually, the user is transported to a different world. The same should hold true audibly.

When designing your mix, it is often helpful to create sound categories and have the ability to increase or decrease their volume as a unit. This retains the relative levels of each sound while enabling quick and easy changes to the overall mix. Common categories include; sound effects, ambience, voice overs and background music.

It can often be useful to change the sound mix in your experience based on where a user is (or is not) looking. One common use for this technique are to reduce the volume level for holograms that are outside of the Holographic Frame to make it easier for the user to focus on the information in front of them. Another use is to increase the volume of a sound to draw the user's attention to an important event.

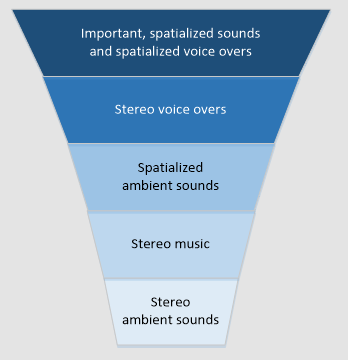

When building your mix, it is recommended to start with your experience's background audio and add layers based on importance. Often, this results in each layer being louder than the previous.

Imagining your mix as an inverted funnel, with the least important (and generally quietest sounds) at the bottom, it is recommended to structure your mix similar to the following diagram.

Voice overs are an interesting scenario. Based on the experience you are creating you may wish to have a stereo (not localized) sound or to spatialize your voice overs. Two Microsoft published experiences illustrate excellent examples of each scenario.

HoloTour uses a stereo voice over. When the narrator is describing the location being viewed, the sound is consistent and does not vary based on the user's position. This enables the narrator to describe the scene without taking away from the spatialized sounds of the environment.

Fragments utilizes a spatialized voice over in the form of a detective. The detective's voice is used to help bring the user's attention to an important clue as if an actual human was in the room. This enables an even greater sense of immersion into the experience of solving the mystery.

When using Spatial Sound, 10 - 12 emitters will consume approximately 12% of the CPU.

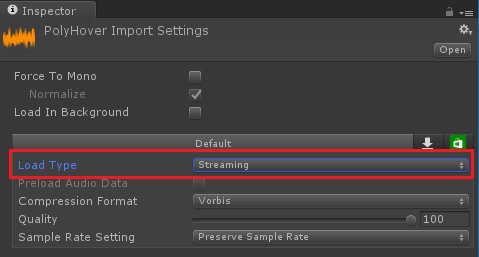

Audio data can be large, especially at common sample rates (44.1 and 48 kHz). A general rule is that audio files longer than 5 - 10 seconds should be streamed to reduce application memory usage.

In Unity, you can mark an audio file for streaming in the file's import settings.

An AudioSource component will be added to VoiceSource.

Setting Max Distance tells User Voice Effect how close the user must be to the parent object before the effect is enabled.

The previous settings configure the parameters of the Unity AudioChorusFilter used to add richness to the user's voice.

The previous settings configure the parameters of the Unity AudioEchoFilter used to cause the user's voice to echo.

The User Voice Effect script is responsible for:

The user must be facing the GameObject, regardless of distance, for the effect to be enabled.

User Voice Effect uses the Mic Stream Selector component, from the MixedRealityToolkit for Unity, to select the high quality voice stream and route it into Unity's audio system.

After the application is deployed:

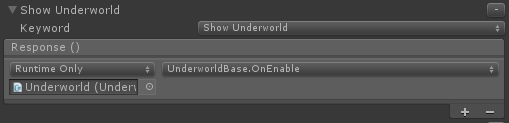

The underworld will be shown and all other holograms will be hidden. If you do not see the underworld, ensure that you are facing a real-world surface.

There are now audio effects applied to your voice!

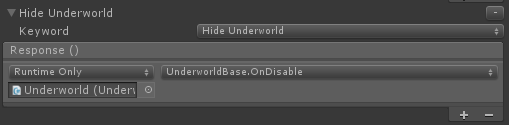

The underworld will be hidden and the previously hidden holograms will reappear.

Congratulations! You have now completed MR Spatial 220: Spatial sound.

Listen to the world and bring your experiences to life with sound!

Events

May 19, 6 PM - May 23, 12 AM

Calling all developers, creators, and AI innovators to join us in Seattle @Microsoft Build May 19-22.

Register today