Events

Take the Microsoft Learn Challenge

Nov 19, 11 PM - Jan 10, 11 PM

Ignite Edition - Build skills in Microsoft Azure and earn a digital badge by January 10!

Register nowThis browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

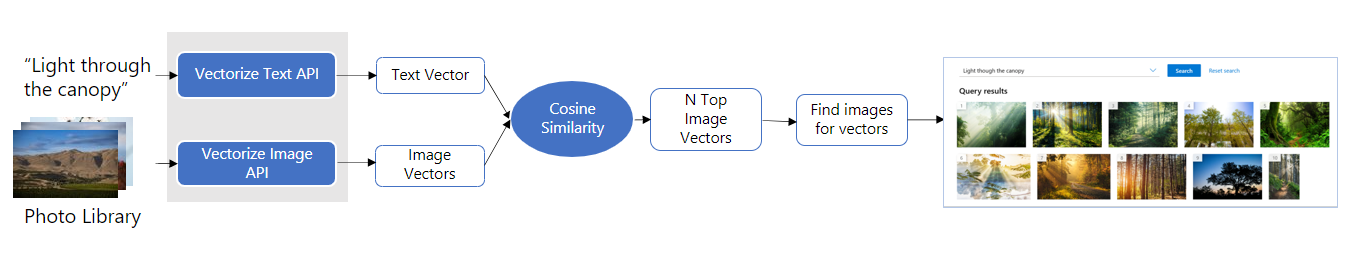

Multimodal embedding is the process of generating a vector representation of an image that captures its features and characteristics. These vectors encode the content and context of an image in a way that is compatible with text search over the same vector space.

Image retrieval systems have traditionally used features extracted from the images, such as content labels, tags, and image descriptors, to compare images and rank them by similarity. However, vector similarity search offers a number of benefits over traditional keyword-based search and is becoming a vital component in popular content search services.

Keyword search is the most basic and traditional method of information retrieval. In that approach, the search engine looks for the exact match of the keywords or phrases entered by the user in the search query and compares it with the labels and tags provided for the images. The search engine then returns images that contain those exact keywords as content tags and image labels. Keyword search relies heavily on the user's ability to use relevant and specific search terms.

Vector search searches large collections of vectors in high-dimensional space to find vectors that are similar to a given query. Vector search looks for semantic similarities by capturing the context and meaning of the search query. This approach is often more efficient than traditional image retrieval techniques, as it can reduce search space and improve the accuracy of the results.

Multimodal embedding has a variety of applications in different fields, including:

Caution

Multimodal embedding is not designed analyze medical images for diagnostic features or disease patterns. Please do not use Multimodal embedding for medical purposes.

Vector embeddings are a way of representing content—text or images—as vectors of real numbers in a high-dimensional space. Vector embeddings are often learned from large amounts of textual and visual data using machine learning algorithms, such as neural networks.

Each dimension of the vector corresponds to a different feature or attribute of the content, such as its semantic meaning, syntactic role, or context in which it commonly appears. In Azure AI Vision, image and text vector embeddings have 1024 dimensions.

Important

Vector embeddings can only be compared and matched if they're from the same model type. Images vectorized by one model won't be searchable through a different model. The latest Image Analysis API offers two models, version 2023-04-15 which supports text search in many languages, and the legacy 2022-04-11 model which supports only English.

The following are the main steps of the image retrieval process using Multimodal embeddings.

Note

Multimodal embedding does not do any biometric processing of human faces. For face detection and identification, see the Azure AI Face service.

The image and video retrieval services return a field called "relevance." The term "relevance" denotes a measure of similarity between a query and image or video frame embeddings. The relevance score is composed of two parts:

Important

The relevance score is a good measure to rank results such as images or video frames with respect to a single query. However, the relevance score cannot be accurately compared across queries. Therefore, it's not possible to easily map the relevance score to a confidence level. It's also not possible to trivially create a threshold algorithm to eliminate irrelevant results based solely on the relevance score.

Image input

Text input

Enable Multimodal embeddings for your search service and follow the steps to generate vector embeddings for text and images.

Events

Take the Microsoft Learn Challenge

Nov 19, 11 PM - Jan 10, 11 PM

Ignite Edition - Build skills in Microsoft Azure and earn a digital badge by January 10!

Register nowTraining

Module

Perform vector search and retrieval in Azure AI Search - Training

Perform vector search and retrieval in Azure AI Search.

Certification

Microsoft Certified: Azure AI Engineer Associate - Certifications

Design and implement an Azure AI solution using Azure AI services, Azure AI Search, and Azure Open AI.