Train your custom Text Analytics for health model

Training is the process where the model learns from your labeled data. After training is completed, you'll be able to view the model's performance to determine if you need to improve your model.

To train a model, you start a training job and only successfully completed jobs create a model. Training jobs expire after seven days, which means you won't be able to retrieve the job details after this time. If your training job completed successfully and a model was created, the model won't be affected. You can only have one training job running at a time, and you can't start other jobs in the same project.

The training times can be anywhere from a few minutes when dealing with few documents, up to several hours depending on the dataset size and the complexity of your schema.

- A successfully created project with a configured Azure blob storage account

- Text data that has been uploaded to your storage account.

- Labeled data

See the project development lifecycle for more information.

Before you start the training process, labeled documents in your project are divided into a training set and a testing set. Each one of them serves a different function. The training set is used in training the model, this is the set from which the model learns the labeled entities and what spans of text are to be extracted as entities. The testing set is a blind set that is not introduced to the model during training but only during evaluation. After model training is completed successfully, the model is used to make predictions from the documents in the testing and based on these predictions evaluation metrics are calculated. Model training and evaluation are only for newly defined entities with learned components; therefore, Text Analytics for health entities are excluded from model training and evaluation due to them being entities with prebuilt components. It's recommended to make sure that all your labeled entities are adequately represented in both the training and testing set.

Custom Text Analytics for health supports two methods for data splitting:

- Automatically splitting the testing set from training data:The system splits your labeled data between the training and testing sets, according to the percentages you choose. The recommended percentage split is 80% for training and 20% for testing.

Note

If you choose the Automatically splitting the testing set from training data option, only the data assigned to training set will be split according to the percentages provided.

- Use a manual split of training and testing data: This method enables users to define which labeled documents should belong to which set. This step is only enabled if you have added documents to your testing set during data labeling.

To start training your model from within the Language Studio:

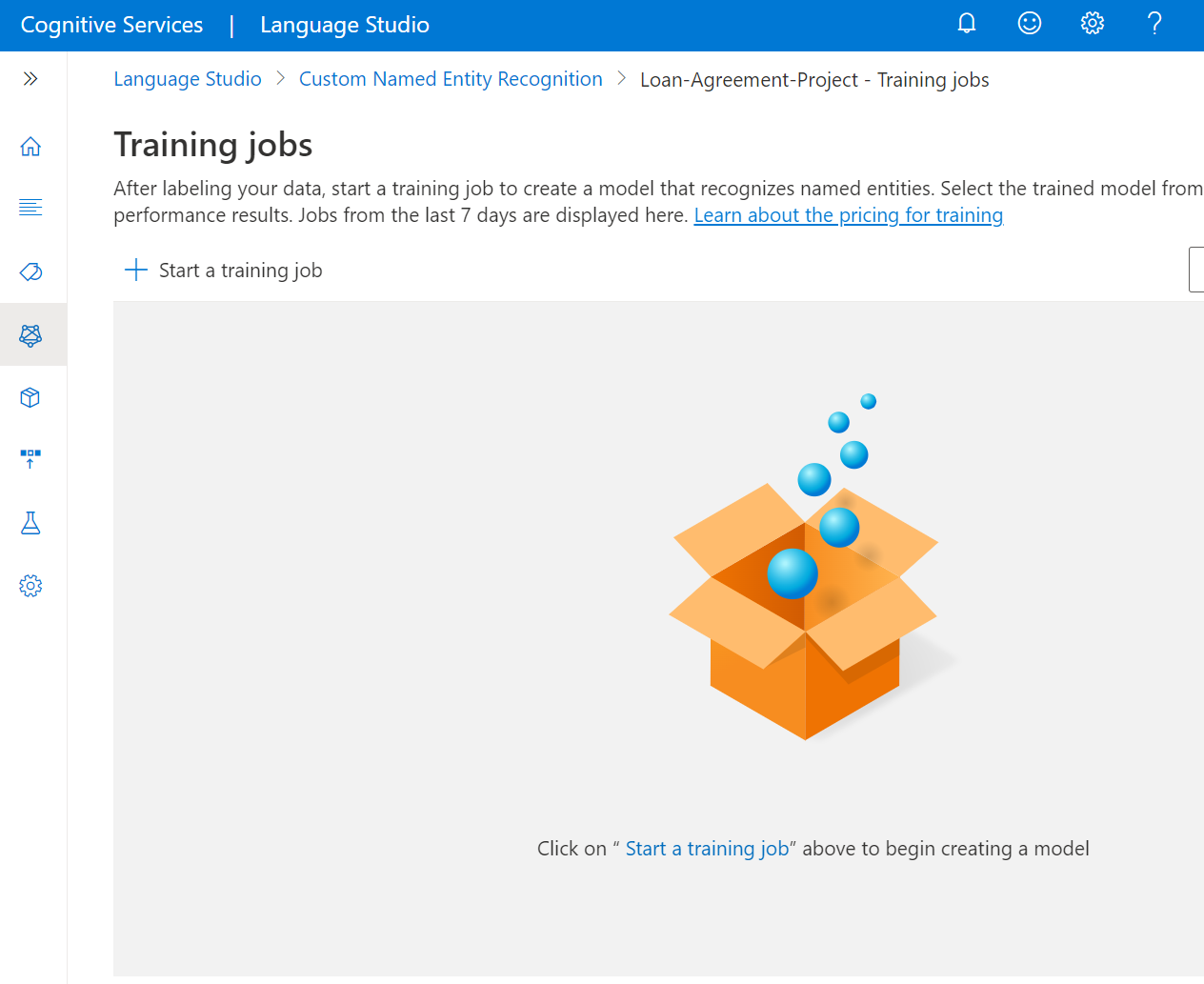

Select Training jobs from the left side menu.

Select Start a training job from the top menu.

Select Train a new model and type in the model name in the text box. You can also overwrite an existing model by selecting this option and choosing the model you want to overwrite from the dropdown menu. Overwriting a trained model is irreversible, but it won't affect your deployed models until you deploy the new model.

Select data splitting method. You can choose Automatically splitting the testing set from training data where the system will split your labeled data between the training and testing sets, according to the specified percentages. Or you can Use a manual split of training and testing data, this option is only enabled if you have added documents to your testing set. See data labeling and how to train a model for information about data splitting.

Select the Train button.

If you select the Training Job ID from the list, a side pane will appear where you can check the Training progress, Job status, and other details for this job.

Note

- Only successfully completed training jobs will generate models.

- Training can take some time between a couple of minutes and several hours based on the size of your labeled data.

- You can only have one training job running at a time. You can't start other training job within the same project until the running job is completed.

To cancel a training job from within Language Studio, go to the Training jobs page. Select the training job you want to cancel and select Cancel from the top menu.

After training is completed, you'll be able to view the model's performance to optionally improve your model if needed. Once you're satisfied with your model, you can deploy it, making it available to use for extracting entities from text.