Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

APPLIES TO:

Azure CLI ml extension v2 (current)

Azure CLI ml extension v2 (current)

Python SDK azure-ai-ml v2 (current)

Python SDK azure-ai-ml v2 (current)

This article describes how to troubleshoot and resolve common Azure Machine Learning online endpoint deployment and scoring issues.

The document structure reflects the way you should approach troubleshooting:

- Use local deployment to test and debug your models locally before deploying in the cloud.

- Use container logs to help debug issues.

- Understand common deployment errors that might arise and how to fix them.

The HTTP status codes section explains how invocation and prediction errors map to HTTP status codes when you score endpoints with REST requests.

Prerequisites

- An active Azure subscription with the free or paid version of Azure Machine Learning. Get a free trial Azure subscription.

- An Azure Machine Learning workspace.

- The Azure CLI and Azure Machine Learning CLI v2. Install, set up, and use the CLI (v2).

Request tracing

There are two supported tracing headers:

x-request-idis reserved for server tracing. Azure Machine Learning overrides this header to ensure it's a valid GUID. When you create a support ticket for a failed request, attach the failed request ID to expedite the investigation. Alternatively, provide the name of the region and the endpoint name.x-ms-client-request-idis available for client tracing scenarios. This header accepts only alphanumeric characters, hyphens, and underscores, and is truncated to a maximum of 40 characters.

Deploy locally

Local deployment means to deploy a model to a local Docker environment. Local deployment supports creation, update, and deletion of a local endpoint, and allows you to invoke and get logs from the endpoint. Local deployment is useful for testing and debugging before deployment to the cloud.

Tip

You can also use the Azure Machine Learning inference HTTP server Python package to debug your scoring script locally. Debugging with the inference server helps you to debug the scoring script before deploying to local endpoints so that you can debug without being affected by the deployment container configurations.

You can deploy locally with Azure CLI or Python SDK. Azure Machine Learning studio doesn't support local deployment or local endpoints.

To use local deployment, add --local to the appropriate command.

az ml online-deployment create --endpoint-name <endpoint-name> -n <deployment-name> -f <spec_file.yaml> --local

The following steps occur during local deployment:

- Docker either builds a new container image or pulls an existing image from the local Docker cache. Docker uses an existing image if one matches the environment part of the specification file.

- Docker starts the new container with mounted local artifacts such as model and code files.

For more information, see Deploy and debug locally by using a local endpoint.

Tip

You can use Visual Studio Code to test and debug your endpoints locally. For more information, see Debug online endpoints locally in Visual Studio Code.

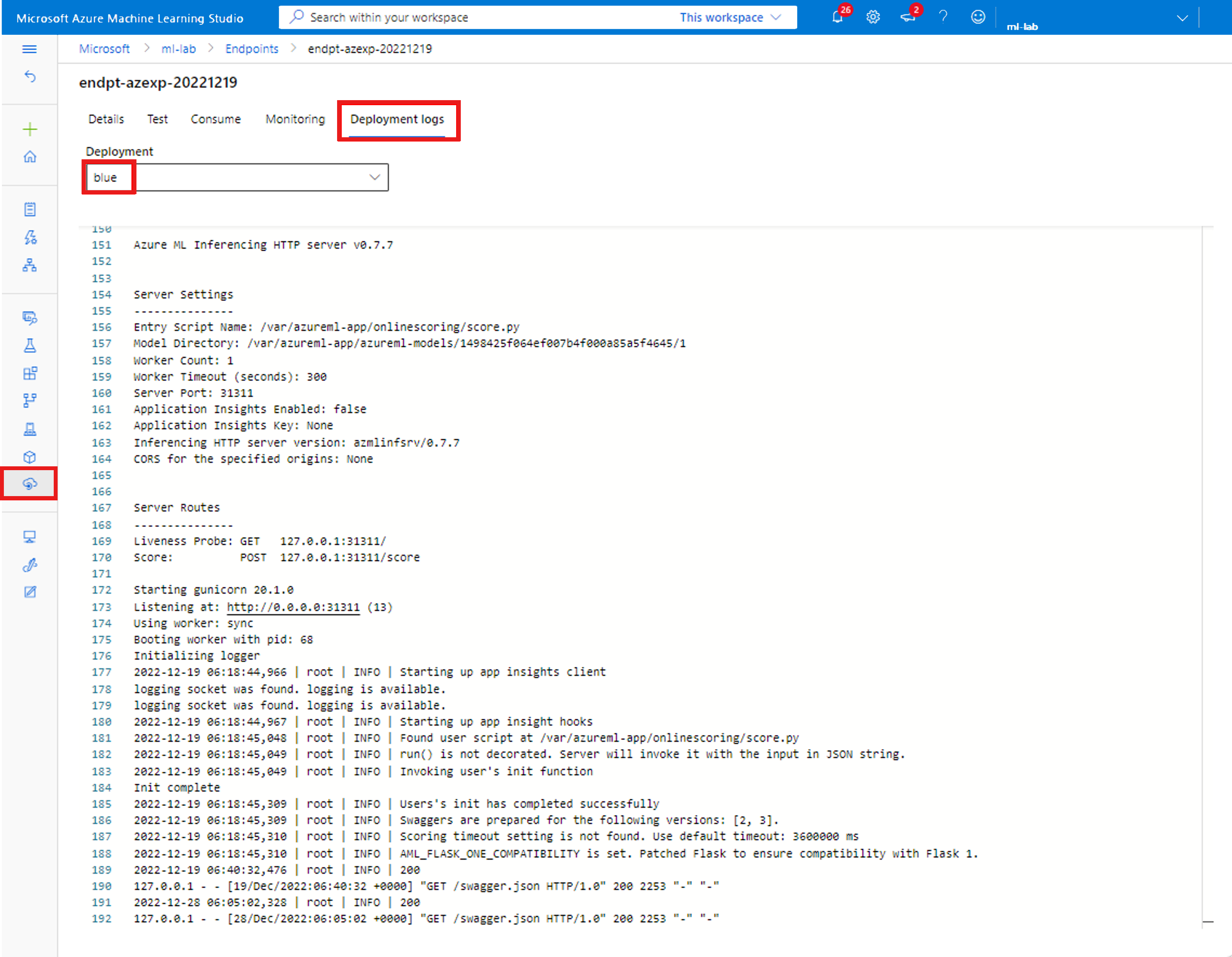

Get container logs

You can't get direct access to a virtual machine (VM) where a model deploys, but you can get logs from some of the containers that are running on the VM. The amount of information you get depends on the provisioning status of the deployment. If the specified container is up and running, you see its console output. Otherwise, you get a message to try again later.

You can get logs from the following types of containers:

- The inference server console log contains the output of print and logging functions from your scoring script score.py code.

- Storage initializer logs contain information on whether code and model data successfully downloaded to the container. The container runs before the inference server container starts to run.

For Kubernetes online endpoints, administrators can directly access the cluster where you deploy the model and check the logs in Kubernetes. For example:

kubectl -n <compute-namespace> logs <container-name>

Note

If you use Python logging, make sure to use the correct logging level, such as INFO, for the messages to be published to logs.

See log output from containers

To see log output from a container, use the following command:

az ml online-deployment get-logs -g <resource-group> -w <workspace-name> -e <endpoint-name> -n <deployment-name> -l 100

Or

az ml online-deployment get-logs --resource-group <resource-group> --workspace-name <workspace-name> --endpoint-name <endpoint-name> --name <deployment-name> --lines 100

By default, logs are pulled from the inference server. You can get logs from the storage initializer container by passing –-container storage-initializer.

The commands above include --resource-group and --workspace-name. You can also set these parameters globally via az configure to avoid repeating them in each command. For example:

az configure --defaults group=<resource-group> workspace=<workspace-name>

To check your current configuration settings, run:

az configure --list-defaults

To see more information, add --help or --debug to commands.

Common deployment errors

Deployment operation status can report the following common deployment errors:

-

Common to both managed online endpoint and Kubernetes online endpoint:

- Subscription doesn't exist

- Startup task failed due to authorization error

- Startup task failed due to incorrect role assignments on resource

- Startup task failed due to incorrect role assignments on storage account when mdc is enabled

- Invalid template function specification

- Unable to download user container image

- Unable to download user model

Limited to Kubernetes online endpoint:

If you're creating or updating a Kubernetes online deployment, also see Common errors specific to Kubernetes deployments.

ERROR: ImageBuildFailure

This error is returned when the Docker image environment is being built. You can check the build log for more information on the failure. The build log is located in the default storage for your Azure Machine Learning workspace.

The exact location might be returned as part of the error, for example "the build log under the storage account '[storage-account-name]' in the container '[container-name]' at the path '[path-to-the-log]'".

The following sections describe common image build failure scenarios:

- Azure Container Registry authorization failure

- Image build compute not set in a private workspace with virtual network

- Image build timing out

- Generic or unknown failure

Azure Container Registry authorization failure

An error message mentions "container registry authorization failure" when you can't access the container registry with the current credentials. The desynchronization of workspace resource keys can cause this error, and it takes some time to automatically synchronize. However, you can manually call for key synchronization with az ml workspace sync-keys, which might resolve the authorization failure.

Container registries that are behind a virtual network might also encounter this error if they're set up incorrectly. Verify that the virtual network is set up properly.

Image build compute not set in a private workspace with virtual network

If the error message mentions "failed to communicate with the workspace's container registry", and you're using a virtual network, and the workspace's container registry is private and configured with a private endpoint, you need to allow Container Registry to build images in the virtual network.

Image build timing out

Image build timeouts are often due to an image being too large to be able to complete building within the deployment creation timeframe. Check your image build logs at the location that the error specifies. The logs are cut off at the point that the image build timed out.

To resolve this issue, build your image separately so the image only needs to be pulled during deployment creation. Also review the default probe settings if you have ImageBuild timeouts.

Generic image build failure

Check the build log for more information on the failure. If no obvious error is found in the build log and the last line is Installing pip dependencies: ...working..., a dependency might be causing the error. Pinning version dependencies in your conda file can fix this problem.

Try deploying locally to test and debug your models before deploying to the cloud.

ERROR: OutOfQuota

The following resources might run out of quota when using Azure services:

For Kubernetes online endpoints only, the Kubernetes resource might also run out of quota.

CPU quota

You need to have enough compute quota to deploy a model. The CPU quota defines how many virtual cores are available per subscription, per workspace, per SKU, and per region. Each deployment subtracts from available quota and adds it back after deletion, based on type of the SKU.

You can check if there are unused deployments you can delete, or you can submit a request for a quota increase.

Cluster quota

The OutOfQuota error occurs when you don't have enough Azure Machine Learning compute cluster quota. The quota defines the total number of clusters per subscription that you can use at the same time to deploy CPU or GPU nodes in the Azure cloud.

Disk quota

The OutOfQuota error occurs when the size of the model is larger than the available disk space and the model can't be downloaded. Try using a SKU with more disk space or reducing the image and model size.

Memory quota

The OutOfQuota error occurs when the memory footprint of the model is larger than the available memory. Try a SKU with more memory.

Role assignment quota

When you create a managed online endpoint, role assignment is required for the managed identity to access workspace resources. If you hit the role assignment limit, try to delete some unused role assignments in this subscription. You can check all role assignments by selecting Access control for your Azure subscription in the Azure portal.

Endpoint quota

Try to delete some unused endpoints in this subscription. If all your endpoints are actively in use, try requesting an endpoint limit increase. To learn more about the endpoint limit, see Endpoint quota with Azure Machine Learning online endpoints and batch endpoints.

Kubernetes quota

The OutOfQuota error occurs when the requested CPU or memory can't be provided due to nodes being unschedulable for this deployment. For example, nodes might be cordoned or otherwise unavailable.

The error message typically indicates the resource insufficiency in the cluster, for example OutOfQuota: Kubernetes unschedulable. Details:0/1 nodes are available: 1 Too many pods.... This message means that there are too many pods in the cluster and not enough resources to deploy the new model based on your request.

Try the following mitigations to address this issue:

IT operators who maintain the Kubernetes cluster can try to add more nodes or clear some unused pods in the cluster to release some resources.

Machine learning engineers who deploy models can try to reduce the resource request of the deployment.

- If you directly define the resource request in the deployment configuration via the resource section, try to reduce the resource request.

- If you use

instance_typeto define resource for model deployment, contact the IT operator to adjust the instance type resource configuration. For more information, see Create and manage instance types.

Region-wide VM capacity

Due to a lack of Azure Machine Learning capacity in the region, the service failed to provision the specified VM size. Retry later or try deploying to a different region.

Other quota

To run the score.py file you provide as part of the deployment, Azure creates a container that includes all the resources that the score.py needs. Azure Machine Learning then runs the scoring script on that container. If your container can't start, scoring can't happen. The container might be requesting more resources than the instance_type can support. Consider updating the instance_type of the online deployment.

To get the exact reason for the error, take the following action.

Run the following command:

az ml online-deployment get-logs -e <endpoint-name> -n <deployment-name> -l 100

ERROR: BadArgument

You might get this error when you use either managed online endpoints or Kubernetes online endpoints, for the following reasons:

- Subscription doesn't exist

- Startup task failed due to authorization error

- Startup task failed due to incorrect role assignments on resource

- Invalid template function specification

- Unable to download user container image

- Unable to download user model

- MLflow model format with private network is unsupported

You might also get this error when using Kubernetes online endpoints only, for the following reasons:

Subscription doesn't exist

The referenced Azure subscription must be existing and active. This error occurs when Azure can't find the subscription ID you entered. The error might be due to a typo in the subscription ID. Double-check that the subscription ID was correctly entered and is currently active.

Authorization error

After you provision the compute resource when you create a deployment, Azure pulls the user container image from the workspace container registry and mounts the user model and code artifacts into the user container from the workspace storage account. Azure uses managed identities to access the storage account and the container registry.

If you create the associated endpoint with user-assigned identity, the user's managed identity must have Storage blob data reader permission on the workspace storage account and AcrPull permission on the workspace container registry. Make sure your user-assigned identity has the right permissions.

When MDC is enabled, the user's managed identity must have Storage Blob Data Contributor permission on the workspace storage account. For more information, see Storage Blob Authorization Error when MDC is enabled.

If you create the associated endpoint with system-assigned identity, Azure role-based access control (RBAC) permission is automatically granted and no further permissions are needed. For more information, see Container registry authorization error.

Invalid template function specification

This error occurs when a template function was specified incorrectly. Either fix the policy or remove the policy assignment to unblock. The error message might include the policy assignment name and the policy definition to help you debug this error. See Azure policy definition structure for tips to avoid template failures.

Unable to download user container image

The user container might not be found. Check the container logs to get more details.

Make sure the container image is available in the workspace container registry. For example, if the image is testacr.azurecr.io/azureml/azureml_92a029f831ce58d2ed011c3c42d35acb:latest, you can use the following command to check the repository:

az acr repository show-tags -n testacr --repository azureml/azureml_92a029f831ce58d2ed011c3c42d35acb --orderby time_desc --output table`

Unable to download user model

The user model might not be found. Check the container logs to get more details. Make sure you registered the model to the same workspace as the deployment.

To show details for a model in a workspace, take the following action. You must specify either version or label to get the model information.

Run the following command:

az ml model show --name <model-name> --version <version>

Also check if the blobs are present in the workspace storage account. For example, if the blob is https://foobar.blob.core.windows.net/210212154504-1517266419/WebUpload/210212154504-1517266419/GaussianNB.pkl, you can use the following command to check if the blob exists:

az storage blob exists --account-name <storage-account-name> --container-name <container-name> --name WebUpload/210212154504-1517266419/GaussianNB.pkl --subscription <sub-name>

If the blob is present, you can use the following command to get the logs from the storage initializer:

az ml online-deployment get-logs --endpoint-name <endpoint-name> --name <deployment-name> –-container storage-initializer`

MLflow model format with private network is unsupported

You can't use the private network feature with an MLflow model format if you're using the legacy network isolation method for managed online endpoints. If you need to deploy an MLflow model with the no-code deployment approach, try using a workspace managed virtual network.

Resource requests greater than limits

Requests for resources must be less than or equal to limits. If you don't set limits, Azure Machine Learning sets default values when you attach your compute to a workspace. You can check the limits in the Azure portal or by using the az ml compute show command.

Azureml-fe not ready

The front-end azureml-fe component that routes incoming inference requests to deployed services installs during k8s-extension installation and automatically scales as needed. This component should have at least one healthy replica on the cluster.

You get this error if the component isn't available when you trigger a Kubernetes online endpoint or deployment creation or update request. Check the pod status and logs to fix this issue. You can also try to update the k8s-extension installed on the cluster.

ERROR: ResourceNotReady

To run the score.py file you provide as part of the deployment, Azure creates a container that includes all the resources that the score.py needs, and runs the scoring script on that container. The error in this scenario is that this container crashes when running, so scoring can't happen. This error can occur under one of the following conditions:

There's an error in score.py. Use

get-logsto diagnose common problems, such as:- A package that score.py tries to import that isn't included in the conda environment

- A syntax error

- A failure in the

init()method

If

get-logsdoesn't produce any logs, it usually means that the container failed to start. To debug this issue, try deploying locally.Readiness or liveness probes aren't set up correctly.

Container initialization takes too long, so the readiness or liveness probe fails beyond the failure threshold. In this case, adjust probe settings to allow a longer time to initialize the container. Or try a bigger supported VM SKU, which accelerates the initialization.

There's an error in the container environment setup, such as a missing dependency.

If you get the

TypeError: register() takes 3 positional arguments but 4 were givenerror, check the dependency between flask v2 andazureml-inference-server-http. For more information, see Troubleshoot HTTP server issues.

ERROR: ResourceNotFound

You might get this error when using either managed online endpoint or Kubernetes online endpoint, for the following reasons:

- Azure Resource Manager can't find a required resource

- Container registry is private or otherwise inaccessible

Resource Manager can't find a resource

This error occurs when Azure Resource Manager can't find a required resource. For example, you can receive this error if a storage account can't be found at the path specified. Double check path or name specifications for accuracy and spelling. For more information, see Resolve errors for Resource Not Found.

Container registry authorization error

This error occurs when an image belonging to a private or otherwise inaccessible container registry is supplied for deployment. Azure Machine Learning APIs can't accept private registry credentials.

To mitigate this error, either ensure that the container registry isn't private, or take the following steps:

- Grant your private registry's acrPull role to the system identity of your online endpoint.

- In your environment definition, specify the address of your private image and give the instruction to not modify or build the image.

If this mitigation succeeds, the image doesn't require building, and the final image address is the given image address. At deployment time, your online endpoint's system identity pulls the image from the private registry.

For more diagnostic information, see How to use workspace diagnostics.

ERROR: WorkspaceManagedNetworkNotReady

This error occurs if you try to create an online deployment that enables a workspace managed virtual network, but the managed virtual network isn't provisioned yet. Provision the workspace managed virtual network before you create an online deployment.

To manually provision the workspace managed virtual network, follow the instructions at Manually provision a managed VNet. You may then start creating online deployments. For more information, see Network isolation with managed online endpoint and Secure your managed online endpoints with network isolation.

ERROR: OperationCanceled

You might get this error when using either managed online endpoint or Kubernetes online endpoint, for the following reasons:

- Operation was canceled by another operation that has a higher priority

- Operation was canceled due to a previous operation waiting for lock confirmation

Operation canceled by another higher priority operation

Azure operations have a certain priority level and execute from highest to lowest. This error happens when another operation that has a higher priority overrides your operation. Retrying the operation might allow it to perform without cancellation.

Operation canceled waiting for lock confirmation

Azure operations have a brief waiting period after being submitted, during which they retrieve a lock to ensure that they don't encounter race conditions. This error happens when the operation you submitted is the same as another operation. The other operation is currently waiting for confirmation that it received the lock before it proceeds.

You might have submitted a similar request too soon after the initial request. Retrying the operation after waiting up to a minute might allow it to perform without cancellation.

ERROR: SecretsInjectionError

Secret retrieval and injection during online deployment creation uses the identity associated with the online endpoint to retrieve secrets from the workspace connections or key vaults. This error happens for one of the following reasons:

The endpoint identity doesn't have Azure RBAC permission to read the secrets from the workspace connections or key vaults, even though the deployment definition specified the secrets as references mapped to environment variables. Role assignment might take time for changes to take effect.

The format of the secret references is invalid, or the specified secrets don't exist in the workspace connections or key vaults.

For more information, see Secret injection in online endpoints (preview) and Access secrets from online deployment using secret injection (preview).

ERROR: InternalServerError

This error means there's something wrong with the Azure Machine Learning service that needs to be fixed. Submit a customer support ticket with all information needed to address the issue.

Common errors specific to Kubernetes deployments

Identity and authentication errors:

- ACRSecretError

- TokenRefreshFailed

- GetAADTokenFailed

- ACRAuthenticationChallengeFailed

- ACRTokenExchangeFailed

- KubernetesUnaccessible

Crashloopbackoff errors:

Scoring script errors:

Other errors:

- NamespaceNotFound

- EndpointAlreadyExists

- ScoringFeUnhealthy

- ValidateScoringFailed

- InvalidDeploymentSpec

- PodUnschedulable

- PodOutOfMemory

- InferencingClientCallFailed

ERROR: ACRSecretError

When you create or update Kubernetes online deployments, you might get this error for one of the following reasons:

Role assignment isn't completed. Wait for a few seconds and try again.

The Azure Arc-enabled Kubernetes cluster or AKS Azure Machine Learning extension isn't properly installed or configured. Check the Azure Arc-enabled Kubernetes or Azure Machine Learning extension configuration and status.

The Kubernetes cluster has improper network configuration. Check the proxy, network policy, or certificate.

Your private AKS cluster doesn't have the proper endpoints. Make sure to set up private endpoints for Container Registry, the storage account, and the workspace in the AKS virtual network.

Your Azure Machine Learning extension version is v1.1.25 or lower. Make sure your extension version is greater than v1.1.25.

ERROR: TokenRefreshFailed

This error occurs because the Kubernetes cluster identity isn't set properly, so the extension can't get a principal credential from Azure. Reinstall the Azure Machine Learning extension and try again.

ERROR: GetAADTokenFailed

This error occurs because the Kubernetes cluster request Microsoft Entra ID token failed or timed out. Check your network access and then try again.

Follow instructions at Use Kubernetes compute to check the outbound proxy and make sure the cluster can connect to the workspace. You can find the workspace endpoint URL in the online endpoint Custom Resource Definition (CRD) in the cluster.

Check whether the workspace allows public access. Regardless of whether the AKS cluster itself is public or private, if a private workspace disables public network access, the Kubernetes cluster can communicate with that workspace only through a private link. For more information, see What is a secure AKS inferencing environment.

ERROR: ACRAuthenticationChallengeFailed

This error occurs because the Kubernetes cluster can't reach the workspace Container Registry service to do an authentication challenge. Check your network, especially Container Registry public network access, then try again. You can follow the troubleshooting steps in GetAADTokenFailed to check the network.

ERROR: ACRTokenExchangeFailed

This error occurs because the Microsoft Entra ID token isn't yet authorized, so the Kubernetes cluster exchange Container Registry token fails. The role assignment takes some time, so wait a minute and then try again.

This failure might also be due to too many concurrent requests to the Container Registry service. This error should be transient, and you can try again later.

ERROR: KubernetesUnaccessible

You might get the following error during Kubernetes model deployments:

{"code":"BadRequest","statusCode":400,"message":"The request is invalid.","details":[{"code":"KubernetesUnaccessible","message":"Kubernetes error: AuthenticationException. Reason: InvalidCertificate"}],...}

To mitigate this error, you can rotate the AKS certificate for the cluster. The new certificate should be updated after 5 hours, so you can wait for 5 hours and redeploy it. For more information, see Certificate rotation in Azure Kubernetes Service (AKS).

ERROR: ImagePullLoopBackOff

You might get this error when you create or update Kubernetes online deployments because you can't download the images from the container registry, resulting in the images pull failure. Check the cluster network policy and the workspace container registry to see if the cluster can pull images from the container registry.

ERROR: DeploymentCrashLoopBackOff

You might get this error when you create or update Kubernetes online deployments because the user container crashed when initializing. There are two possible reasons for this error:

- The user script score.py has a syntax error or import error that raises exceptions in initializing.

- The deployment pod needs more memory than its limit.

To mitigate this error, first check the deployment logs for any exceptions in user scripts. If the error persists, try to extend the resource/instance type memory limit.

ERROR: KubernetesCrashLoopBackOff

You might get this error when you create or update Kubernetes online endpoints or deployments for one of the following reasons:

- One or more pods is stuck in CrashLoopBackoff status. Check if the deployment log exists and there are error messages in the log.

- There's an error in score.py and the container crashed when initializing your score code. Follow instructions under ERROR: ResourceNotReady.

- Your scoring process needs more memory than your deployment configuration limit. You can try to update the deployment with a larger memory limit.

ERROR: NamespaceNotFound

You might get this error when you create or update Kubernetes online endpoints because the namespace your Kubernetes compute used is unavailable in your cluster. Check the Kubernetes compute in your workspace portal and check the namespace in your Kubernetes cluster. If the namespace isn't available, detach the legacy compute and reattach to create a new one, specifying a namespace that already exists in your cluster.

ERROR: UserScriptInitFailed

You might get this error when you create or update Kubernetes online deployments because the init function in your uploaded score.py file raised an exception. Check the deployment logs to see the exception message in detail, and fix the exception.

ERROR: UserScriptImportError

You might get this error when you create or update Kubernetes online deployments because the score.py file that you uploaded imports unavailable packages. Check the deployment logs to see the exception message in detail, and fix the exception.

ERROR: UserScriptFunctionNotFound

You might get this error when you create or update Kubernetes online deployments because the score.py file that you uploaded doesn't have a function named init() or run(). Check your code and add the function.

ERROR: EndpointNotFound

You might get this error when you create or update Kubernetes online deployments because the system can't find the endpoint resource for the deployment in the cluster. Create the deployment in an existing endpoint or create the endpoint first in your cluster.

ERROR: EndpointAlreadyExists

You might get this error when you create a Kubernetes online endpoint because the endpoint already exists in your cluster. The endpoint name should be unique per workspace and per cluster, so create an endpoint with another name.

ERROR: ScoringFeUnhealthy

You might get this error when you create or update a Kubernetes online endpoint or deployment because the azureml-fe system service that runs in the cluster isn't found or is unhealthy. To mitigate this issue, reinstall or update the Azure Machine Learning extension in your cluster.

ERROR: ValidateScoringFailed

You might get this error when you create or update Kubernetes online deployments because the scoring request URL validation failed when processing the model. Check the endpoint URL and then try to redeploy.

ERROR: InvalidDeploymentSpec

You might get this error when you create or update Kubernetes online deployments because the deployment spec is invalid. Check the error message to make sure the instance count is valid. If you enabled autoscaling, make sure the minimum instance count and maximum instance count are both valid.

ERROR: PodUnschedulable

You might get this error when you create or update Kubernetes online endpoints or deployments for one of the following reasons:

- The system can't schedule the pod to nodes due to insufficient resources in your cluster.

- No node matches the node affinity selector.

To mitigate this error, follow these steps:

- Check the

node selectordefinition of theinstance_typeyou used, and thenode labelconfiguration of your cluster nodes. - Check the

instance_typeand the node SKU size for the AKS cluster or the node resource for the Azure Arc-enabled Kubernetes cluster. - If the cluster is under-resourced, reduce the instance type resource requirement or use another instance type with smaller resource requirements.

- If the cluster has no more resources to meet the requirement of the deployment, delete some deployments to release resources.

ERROR: PodOutOfMemory

You might get this error when you create or update an online deployment because the memory limit you gave for deployment is insufficient. To mitigate this error, you can set the memory limit to a larger value or use a bigger instance type.

ERROR: InferencingClientCallFailed

You might get this error when you create or update Kubernetes online endpoints or deployments because the k8s-extension of the Kubernetes cluster isn't connectable. In this case, detach and then reattach your compute.

To troubleshoot errors by reattaching, make sure to reattach with the same configuration as the detached compute, such as compute name and namespace, to avoid other errors. If it still isn't working, ask an administrator who can access the cluster to use kubectl get po -n azureml to check whether the relay server pods are running.

Model consumption issues

Common model consumption errors resulting from the endpoint invoke operation status include bandwidth limit issues, CORS policy, and various HTTP status codes.

Bandwidth limit issues

Managed online endpoints have bandwidth limits for each endpoint. You can find the limit configuration in limits for online endpoints. If your bandwidth usage exceeds the limit, your request is delayed.

To monitor the bandwidth delay, use the metric Network bytes to understand the current bandwidth usage. For more information, see Monitor managed online endpoints.

Two response trailers are returned if the bandwidth limit is enforced:

ms-azureml-bandwidth-request-delay-msis the delay time in milliseconds it took for the request stream transfer.ms-azureml-bandwidth-response-delay-msis the delay time in milliseconds it took for the response stream transfer.

Blocked by CORS policy

V2 online endpoints don't support Cross-Origin Resource Sharing (CORS) natively. If your web application tries to invoke the endpoint without properly handling the CORS preflight requests, you can get the following error message:

Access to fetch at 'https://{your-endpoint-name}.{your-region}.inference.ml.azure.com/score' from origin http://{your-url} has been blocked by CORS policy: Response to preflight request doesn't pass access control check. No 'Access-control-allow-origin' header is present on the request resource. If an opaque response serves your needs, set the request's mode to 'no-cors' to fetch the resource with the CORS disabled.

You can use Azure Functions, Azure Application Gateway, or another service as an interim layer to handle CORS preflight requests.

HTTP status codes

When you access online endpoints with REST requests, the returned status codes adhere to the standards for HTTP status codes. The following sections present details about how endpoint invocation and prediction errors map to HTTP status codes.

Common error codes for managed online endpoints

The following table contains common error codes when REST requests consume managed online endpoints:

| Status code | Reason | Description |

|---|---|---|

| 200 | OK | Your model executed successfully within your latency bounds. |

| 401 | Unauthorized | You don't have permission to do the requested action, such as score, or your token is expired or in the wrong format. For more information, see Authentication for managed online endpoints and Authenticate clients for online endpoints. |

| 404 | Not found | The endpoint doesn't have any valid deployment with positive weight. |

| 408 | Request timeout | The model execution took longer than the timeout supplied in request_timeout_ms under request_settings of your model deployment config. |

| 424 | Model error | If your model container returns a non-200 response, Azure returns a 424. Check the Model Status Code dimension under the Requests Per Minute metric on your endpoint's Azure Monitor Metric Explorer. Or check response headers ms-azureml-model-error-statuscode and ms-azureml-model-error-reason for more information. If 424 comes with liveness or readiness probe failing, consider adjusting ProbeSettings to allow more time to probe container liveness or readiness. |

| 429 | Too many pending requests | Your model is currently getting more requests than it can handle. To guarantee smooth operation, Azure Machine Learning permits a maximum of 2 * max_concurrent_requests_per_instance * instance_count requests to process in parallel at any given time. Requests that exceed this maximum are rejected.You can review your model deployment configuration under the request_settings and scale_settings sections to verify and adjust these settings. Also ensure that the environment variable WORKER_COUNT is correctly passed, as outlined in RequestSettings.If you get this error when you're using autoscaling, your model is getting requests faster than the system can scale up. Consider resending requests with an exponential backoff to give the system time to adjust. You could also increase the number of instances by using code to calculate instance count. Combine these steps with setting autoscaling to help ensure that your model is ready to handle the influx of requests. |

| 429 | Rate-limited | The number of requests per second reached the managed online endpoints limits. |

| 500 | Internal server error | Azure Machine Learning-provisioned infrastructure is failing. |

Common error codes for Kubernetes online endpoints

The following table contains common error codes when REST requests consume Kubernetes online endpoints:

| Status code | Error | Description |

|---|---|---|

| 409 | Conflict error | When an operation is already in progress, any new operation on that same online endpoint responds with a 409 conflict error. For example, if a create or update online endpoint operation is in progress, triggering a new delete operation throws an error. |

| 502 | Exception or crash in the run() method of the score.py file |

When there's an error in score.py, for example an imported package that doesn't exist in the conda environment, a syntax error, or a failure in the init() method, see ERROR: ResourceNotReady to debug the file. |

| 503 | Large spikes in requests per second | The autoscaler is designed to handle gradual changes in load. If you receive large spikes in requests per second, clients might receive HTTP status code 503. Even though the autoscaler reacts quickly, it takes AKS a significant amount of time to create more containers. See How to prevent 503 status code errors. |

| 504 | Request times out | A 504 status code indicates that the request timed out. The default timeout setting is 5 seconds. You can increase the timeout or try to speed up the endpoint by modifying score.py to remove unnecessary calls. If these actions don't correct the problem, the code might be in a nonresponsive state or an infinite loop. Follow ERROR: ResourceNotReady to debug the score.py file. |

| 500 | Internal server error | Azure Machine Learning-provisioned infrastructure is failing. |

How to prevent 503 status code errors

Kubernetes online deployments support autoscaling, which allows replicas to be added to support extra load. For more information, see Azure Machine Learning inference router. The decision to scale up or down is based on utilization of the current container replicas.

Two actions can help prevent 503 status code errors: Changing the utilization level for creating new replicas, or changing the minimum number of replicas. You can use these approaches individually or in combination.

Change the utilization target at which autoscaling creates new replicas by setting the

autoscale_target_utilizationto a lower value. This change doesn't cause replicas to be created faster, but at a lower utilization threshold. For example, changing the value to 30% causes replicas to be created when 30% utilization occurs instead of waiting until the service is 70% utilized.Change the minimum number of replicas to provide a larger pool that can handle the incoming spikes.

How to calculate instance count

To increase the number of instances, you can calculate the required replicas as follows:

from math import ceil

# target requests per second

target_rps = 20

# time to process the request (in seconds, choose appropriate percentile)

request_process_time = 10

# Maximum concurrent requests per instance

max_concurrent_requests_per_instance = 1

# The target CPU usage of the model container. 70% in this example

target_utilization = .7

concurrent_requests = target_rps * request_process_time / target_utilization

# Number of instance count

instance_count = ceil(concurrent_requests / max_concurrent_requests_per_instance)

Note

If you receive request spikes larger than the new minimum replicas can handle, you might receive 503 again. For example, as traffic to your endpoint increases, you might need to increase the minimum replicas.

If the Kubernetes online endpoint is already using the current max replicas and you still get 503 status codes, increase the autoscale_max_replicas value to increase the maximum number of replicas.

Network isolation issues

This section provides information about common network isolation issues.

Online endpoint creation fails with a message about v1 legacy mode

Managed online endpoints are a feature of the Azure Machine Learning v2 API platform. If your Azure Machine Learning workspace is configured for v1 legacy mode, the managed online endpoints don't work. Specifically, if the v1_legacy_mode workspace setting is set to true, v1 legacy mode is turned on, and there's no support for v2 APIs.

To see how to turn off v1 legacy mode, see Network isolation change with our new API platform on Azure Resource Manager.

Important

Check with your network security team before you set v1_legacy_mode to false, because v1 legacy mode might be turned on for a reason.

Online endpoint creation with key-based authentication fails

Use the following command to list the network rules of the Azure key vault for your workspace. Replace <key-vault-name> with the name of your key vault.

az keyvault network-rule list -n <key-vault-name>

The response for this command is similar to the following JSON code:

{

"bypass": "AzureServices",

"defaultAction": "Deny",

"ipRules": [],

"virtualNetworkRules": []

}

If the value of bypass isn't AzureServices, use the guidance in Configure Azure Key Vault networking settings to set it to AzureServices.

Online deployments fail with an image download error

Note

This issue applies when you use the legacy network isolation method for managed online endpoints. In this method, Azure Machine Learning creates a managed virtual network for each deployment under an endpoint.

Check whether the

egress-public-network-accessflag has a value ofdisabledfor the deployment. If this flag is enabled, and the visibility of the container registry is private, this failure is expected.Use the following command to check the status of the private endpoint connection. Replace

<registry-name>with the name of the Azure Container Registry for your workspace:az acr private-endpoint-connection list -r <registry-name> --query "[?privateLinkServiceConnectionState.description=='Egress for Microsoft.MachineLearningServices/workspaces/onlineEndpoints'].{ID:id, status:privateLinkServiceConnectionState.status}"In the response code, verify that the

statusfield is set toApproved. If the value isn'tApproved, use the following command to approve the connection. Replace<private-endpoint-connection-ID>with the ID that the preceding command returns.az network private-endpoint-connection approve --id <private-endpoint-connection-ID> --description "Approved"

Scoring endpoint can't be resolved

Verify that the client issuing the scoring request is a virtual network that can access the Azure Machine Learning workspace.

Use the

nslookupcommand on the endpoint host name to retrieve the IP address information:nslookup <endpoint-name>.<endpoint-region>.inference.ml.azure.comFor example, your command might look similar to the following one:

nslookup endpointname.westcentralus.inference.ml.azure.comThe response contains an address that should be in the range provided by the virtual network.

Note

- For Kubernetes online endpoint, the endpoint host name should be the CName (domain name) that's specified in your Kubernetes cluster.

- If the endpoint uses HTTP, the IP address is contained in the endpoint URI, which you can get from the studio UI.

- For more ways to get the IP address of the endpoint, see Update your DNS with an FQDN.

If the

nslookupcommand doesn't resolve the host name, take the actions in one of the following sections.

Managed online endpoints

Use the following command to check whether an A record exists in the private Domain Name System (DNS) zone for the virtual network.

az network private-dns record-set list -z privatelink.api.azureml.ms -o tsv --query [].nameThe results should contain an entry similar to

*.<GUID>.inference.<region>.If no inference value is returned, delete the private endpoint for the workspace and then re-create it. For more information, see How to configure a private endpoint.

If the workspace with a private endpoint uses a custom DNS server, run the following command to verify that the resolution from the custom DNS server works correctly:

dig <endpoint-name>.<endpoint-region>.inference.ml.azure.com

Kubernetes online endpoints

Check the DNS configuration in the Kubernetes cluster.

Check whether the Azure Machine Learning inference router,

azureml-fe, works as expected. To perform this check, take the following steps:Run the following command in the

azureml-fepod:kubectl exec -it deploy/azureml-fe -- /bin/bashRun one of the following commands:

curl -vi -k https://localhost:<port>/api/v1/endpoint/<endpoint-name>/swagger.json "Swagger not found"For HTTP, use the following command:

curl https://localhost:<port>/api/v1/endpoint/<endpoint-name>/swagger.json "Swagger not found"

If the curl HTTPS command fails or times out but the HTTP command works, check whether the certificate is valid.

If the preceding process fails to resolve to the A record, use the following command to verify whether the resolution works from the Azure DNS virtual public IP address, 168.63.129.16:

dig @168.63.129.16 <endpoint-name>.<endpoint-region>.inference.ml.azure.comIf the preceding command succeeds, troubleshoot the conditional forwarder for Azure Private Link on a custom DNS.

Online deployments can't be scored

Run the following command to see the status of a deployment that can't be scored:

az ml online-deployment show -e <endpoint-name> -n <deployment-name> --query '{name:name,state:provisioning_state}'A value of

Succeededfor thestatefield indicates a successful deployment.For a successful deployment, use the following command to check that traffic is assigned to the deployment:

az ml online-endpoint show -n <endpoint-name> --query trafficThe response from this command should list the percentage of traffic that's assigned to each deployment.

Tip

This step isn't necessary if you use the

azureml-model-deploymentheader in your request to target this deployment.If the traffic assignments or deployment header are set correctly, use the following command to get the logs for the endpoint:

az ml online-deployment get-logs -e <endpoint-name> -n <deployment-name>Review the logs to see whether there's a problem running the scoring code when you submit a request to the deployment.

Inference server issues

This section provides basic troubleshooting tips for the Azure Machine Learning inference HTTP server.

Check installed packages

Follow these steps to address issues with installed packages:

Gather information about installed packages and versions for your Python environment.

In your environment file, check the version of the

azureml-inference-server-httpPython package that's specified. In the Azure Machine Learning inference HTTP server startup logs, check the version of the inference server that's displayed. Confirm that the two versions match.In some cases, the pip dependency resolver installs unexpected package versions. You might need to run

pipto correct installed packages and versions.If you specify Flask or its dependencies in your environment, remove these items.

- Dependent packages include

flask,jinja2,itsdangerous,werkzeug,markupsafe, andclick. - The

flaskpackage is listed as a dependency in the inference server package. The best approach is to allow the inference server to install theflaskpackage. - When the inference server is configured to support new versions of Flask, the inference server automatically receives the package updates as they become available.

- Dependent packages include

Check the inference server version

The azureml-inference-server-http server package is published to PyPI. The PyPI page lists the changelog and all versions of the package.

If you use an early package version, update your configuration to the latest version. The following table summarizes stable versions, common issues, and recommended adjustments:

| Package version | Description | Issue | Resolution |

|---|---|---|---|

| 0.4.x | Bundled in training images dated 20220601 or earlier and azureml-defaults package versions 0.1.34 through 1.43. Latest stable version is 0.4.13. |

For server versions earlier than 0.4.11, you might encounter Flask dependency issues, such as can't import name Markup from jinja2. |

Upgrade to version 0.4.13 or 1.4.x, the latest version, if possible. |

| 0.6.x | Preinstalled in inferencing images dated 20220516 and earlier. Latest stable version is 0.6.1. |

N/A | N/A |

| 0.7.x | Supports Flask 2. Latest stable version is 0.7.7. | N/A | N/A |

| 0.8.x | Uses an updated log format. Ends support for Python 3.6. | N/A | N/A |

| 1.0.x | Ends support for Python 3.7. | N/A | N/A |

| 1.1.x | Migrates to pydantic 2.0. |

N/A | N/A |

| 1.2.x | Adds support for Python 3.11. Updates gunicorn to version 22.0.0. Updates werkzeug to version 3.0.3 and later versions. |

N/A | N/A |

| 1.3.x | Adds support for Python 3.12. Upgrades certifi to version 2024.7.4. Upgrades flask-cors to version 5.0.0. Upgrades the gunicorn and pydantic packages. |

N/A | N/A |

| 1.4.x | Upgrades waitress to version 3.0.1. Ends support for Python 3.8. Removes the compatibility layer that prevents the Flask 2.0 upgrade from breaking request object code. |

If you depend on the compatibility layer, your request object code might not work. | Migrate your score script to Flask 2. |

Check package dependencies

The most relevant dependent packages for the azureml-inference-server-http server package include:

flaskopencensus-ext-azureinference-schema

If you specify the azureml-defaults package in your Python environment, the azureml-inference-server-http package is a dependent package. The dependency is installed automatically.

Tip

If you use the Azure Machine Learning SDK for Python v1 and don't explicitly specify the azureml-defaults package in your Python environment, the SDK might automatically add the package. However, the package version is locked relative to the SDK version. For example, if the SDK version is 1.38.0, the azureml-defaults==1.38.0 entry is added to the environment's pip requirements.

TypeError during inference server startup

You might encounter the following TypeError during inference server startup:

TypeError: register() takes 3 positional arguments but 4 were given

File "/var/azureml-server/aml_blueprint.py", line 251, in register

super(AMLBlueprint, self).register(app, options, first_registration)

TypeError: register() takes 3 positional arguments but 4 were given

This error occurs when you have Flask 2 installed in your Python environment, but your azureml-inference-server-http package version doesn't support Flask 2. Support for Flask 2 is available in the azureml-inference-server-http 0.7.0 package and later versions, and the azureml-defaults 1.44 package and later versions.

If you don't use the Flask 2 package in an Azure Machine Learning Docker image, use the latest version of the

azureml-inference-server-httporazureml-defaultspackage.If you use the Flask 2 package in an Azure Machine Learning Docker image, confirm that the image build version is

July 2022or later.You can find the image version in the container logs. For example, see the following log statements:

2022-08-22T17:05:02,147738763+00:00 | gunicorn/run | AzureML Container Runtime Information 2022-08-22T17:05:02,161963207+00:00 | gunicorn/run | ############################################### 2022-08-22T17:05:02,168970479+00:00 | gunicorn/run | 2022-08-22T17:05:02,174364834+00:00 | gunicorn/run | 2022-08-22T17:05:02,187280665+00:00 | gunicorn/run | AzureML image information: openmpi4.1.0-ubuntu20.04, Materialization Build:20220708.v2 2022-08-22T17:05:02,188930082+00:00 | gunicorn/run | 2022-08-22T17:05:02,190557998+00:00 | gunicorn/run |The build date of the image appears after the

Materialization Buildnotation. In the preceding example, the image version is20220708, or July 8, 2022. The image in this example is compatible with Flask 2.If you don't see a similar message in your container log, your image is out-of-date and should be updated. If you use a Compute Unified Device Architecture (CUDA) image and you can't find a newer image, check the AzureML-Containers repo to see whether your image is deprecated. You can find designated replacements for deprecated images.

If you use the inference server with an online endpoint, you can also find the logs in Azure Machine Learning studio. On the page for your endpoint, select the Logs tab.

If you deploy with the SDK v1 and don't explicitly specify an image in your deployment configuration, the inference server applies the openmpi4.1.0-ubuntu20.04 package with a version that matches your local SDK toolset. However, the installed version might not be the latest available version of the image.

For SDK version 1.43, the inference server installs the openmpi4.1.0-ubuntu20.04:20220616 package version by default, but this package version isn't compatible with SDK 1.43. Make sure you use the latest SDK for your deployment.

If you can't update the image, you can temporarily avoid the issue by pinning the azureml-defaults==1.43 or azureml-inference-server-http~=0.4.13 entries in your environment file. These entries direct the inference server to install the older version with flask 1.0.x.

ImportError or ModuleNotFoundError during inference server startup

You might encounter an ImportError or ModuleNotFoundError on specific modules, such as opencensus, jinja2, markupsafe, or click, during inference server startup. The following example shows the error message:

ImportError: cannot import name 'Markup' from 'jinja2'

The import and module errors occur when you use version 0.4.10 or earlier versions of the inference server that don't pin the Flask dependency to a compatible version. To prevent the issue, install a later version of the inference server.

Other common issues

Other common online endpoint issues are related to conda installation and autoscaling.

Conda installation issues

Issues with MLflow deployment generally stem from issues with the installation of the user environment specified in the conda.yml file.

To debug conda installation problems, try the following steps:

- Check the conda installation logs. If the container crashed or took too long to start up, the conda environment update probably failed to resolve correctly.

- Install the mlflow conda file locally with the command

conda env create -n userenv -f <CONDA_ENV_FILENAME>. - If there are errors locally, try resolving the conda environment and creating a functional one before redeploying.

- If the container crashes even if it resolves locally, the SKU size used for deployment might be too small.

- Conda package installation occurs at runtime, so if the SKU size is too small to accommodate all the packages in the conda.yml environment file, the container might crash.

- A Standard_F4s_v2 VM is a good starting SKU size, but you might need larger VMs depending on the dependencies the conda file specifies.

- For Kubernetes online endpoints, the Kubernetes cluster must have a minimum of four vCPU cores and 8 GB of memory.

Autoscaling issues

If you have trouble with autoscaling, see Troubleshoot Azure Monitor autoscale.

For Kubernetes online endpoints, the Azure Machine Learning inference router is a front-end component that handles autoscaling for all model deployments on the Kubernetes cluster. For more information, see Autoscale Kubernetes inference routing.